[WITH CODE] Optimization: Adaptive regret for regime-shifting markets

Why “looking good on average” can be useless after a regime change—and how shifting-experts fixes it.

Table of contents:

Introduction.

Quantifying failure in non-stationary environments.

Model risks and limitations.

Formalism for failure.

A framework for principled adaptation.

Shifting-experts algorithm.

Meta-optimization dilemma.

Before you begin, remember that you have an index with the newsletter content organized by clicking on “Read full story” in this image.

Introduction

In our preceding discourse, we talked about the features of parameter-free optimization, a methodology designed to liberate quantitative strategists from the sinister task of parameter tuning. The allure was undeniable: escape the perilous cycle of tweaking lookback windows, volatility thresholds, and rebalancing frequencies—a process that often culminates in overfitted models, brittle constructs that perform pretty well in-sample only to disintegrate upon exposure to live market conditions. By excising these explicit parameters, the objective was to engineer systems with inherent robustness. Yet, an unsettling reality persists, one that we must now confront directly:

Every model possesses an implicit parameter—the market regime in which it was conceived.

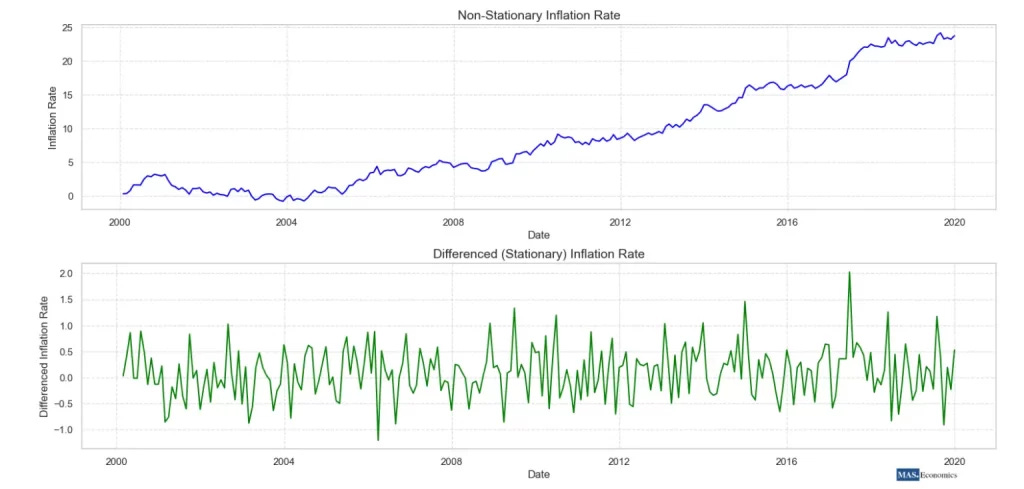

A so-called “parameter-free” model is, in truth, inextricably linked to its developmental environment. It assimilates a collection of rules, statistical relationships, and dynamic behaviors from the dataset upon which it is trained. A market regime can be conceptualized as a distinct statistical properties:

Its volatility structure, the covariance matrix governing inter-asset relationships, the degree of autocorrelation present in its price series.

And its characteristic signal-to-noise ratio.

Consequently, a model trained during a placid era of low inflation and consistent economic expansion internalizes a set of assumptions—a market worldview—that may be invalidated when inflationary pressures mount and volatility becomes the dominant market force. A momentum strategy, for instance, honed in a trending, low-volatility bull market, is predisposed to systematic failure in a choppy, high-volatility, mean-reverting environment where every apparent breakout is a deceptive trap. The optimization problem has not been solved; it has merely been displaced to a higher, more abstract plane. The model is implicitly “optimized” for the statistical fingerprint of its training data.

Because of that, our focus shifts from the granular details of model parameters to the more encompassing and critical challenge of model adaptation. The primary risk we aim to dissect and mitigate is that of model-regime mismatch: the inevitable failure of a static model operating within a dynamic, non-stationary world. Conventional backtesting methodologies frequently compound this risk by fostering a hazardous form of analytical myopia. They assess performance using metrics that average across an entire historical timeline, such as the Sharpe ratio, Sortino ratio, or total compounded return. While these metrics may appear impressive, they can cover a dangerous truth: a strategy might have been exceptionally profitable for a five-year period before systematically bleeding capital for the subsequent two years.

This averaging effect is deceptive and represents the pivotal conflict we must resolve. A trader, after all, does not experience the “average” return; they live through the path-dependent, day-to-day fluctuations of their profit and loss. A strategy that incurs persistent losses for two years is not a viable strategy; it is a liability, irrespective of how stellar its long-term average performance appears on a backtest report.

The pivotal event that propels our entire research is the regime break—that critical juncture when the market fundamentally alters its behavior, when established rules cease to apply, often with startling abruptness and little to no forewarning.

Quantifying failure in non-stationary environments

The principal obstacle confronting the quantitative strategist is not the market’s innate propensity for change, but rather the inadequacy of our conventional tools for performance measurement. Our analytical arsenal is largely predicated on an implicit, and often unstated, assumption of stationarity—the notion that the statistical properties of the financial universe are stable and time-invariant.

In the context of financial markets, this is arguably the single most perilous assumption one can make. Our task is to deconstruct this outdated paradigm and erect a new analytical framework from its foundations.

Let us ground this discussion in a concrete, quantitative examination. The standard operating procedure for evaluating a trading strategy involves computing a suite of performance metrics over a fixed historical period, for example, a decade. These metrics typically include the Sharpe Ratio, Calmar Ratio, Compound Annual Growth Rate (CAGR), and maximum drawdown. The fundamental, inescapable flaw in this approach is that these are global metrics. They perform an act of aggressive dimensionality reduction, condensing a rich, time-varying, path-dependent sequence of returns into a single, sterile numerical value.

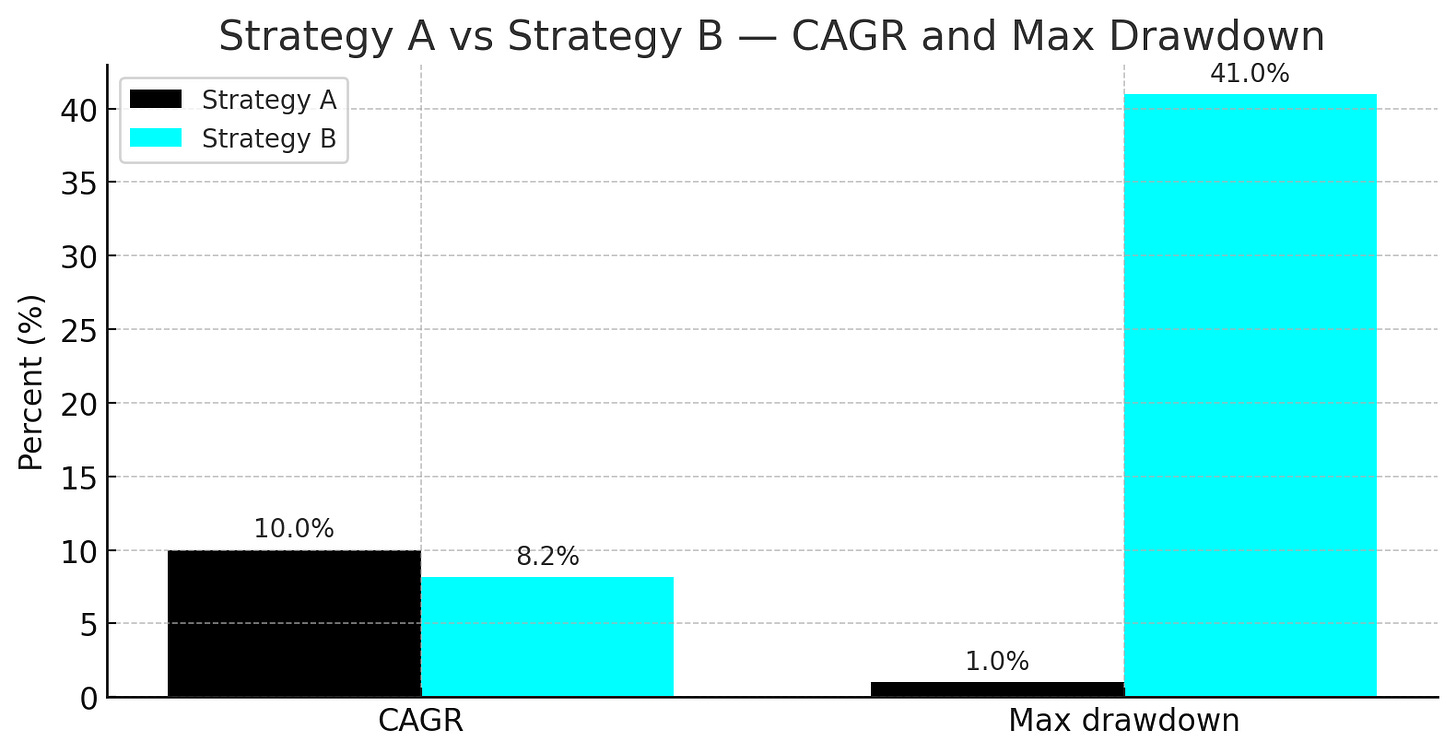

To illustrate the severity of this issue, consider two hypothetical strategies, A and B, over a 10-year horizon:

Strategy A: Generates a consistent, steady 10% return each year for the entire 10-year period. Its CAGR is precisely 10%. The maximum drawdown is negligible. The performance is smooth, predictable, and confidence-inspiring.

Strategy B: Delivers a spectacular 30% annual return for the first 5 years, followed by a painful -10% annual return for the next 5 years. The compounded total return over the decade results in a CAGR of approximately 8.2% (((1.305)×(0.905))1/10−1≈0.082). Its maximum drawdown, incurred entirely in the second half of the backtest, would be substantial, likely exceeding 40%.

When judged solely by the global metric of CAGR, Strategy A appears only marginally superior to Strategy B. However, any rational investor or risk manager would—and should—abandon Strategy B after year 6 or 7, as it would be demonstrably failing in the current market environment. A quantitative analyst who places blind faith in the 10-year CAGR of Strategy B is courting disaster by deploying a model that is actively destroying capital. The global metric has utterly failed to signal the regime break in the strategy’s performance. It has averaged a period of exceptional success with a period of abject failure, yielding a final assessment of “mediocre,” a descriptor that accurately characterizes neither period and provides zero actionable intelligence for capital allocation decisions today.

The obstacle is one of analytical perspective. We are conditioned by the conventions of quantitative finance to place our trust in these summary statistics.

They are convenient for reporting, for comparison, and for marketing. The first, most crucial step is to actively unlearn this reliance and begin to conceptualize performance as a local, not global, phenomenon. The key question is not How did this strategy perform over the last decade? but rather: How is this strategy performing now, and in the recent past?

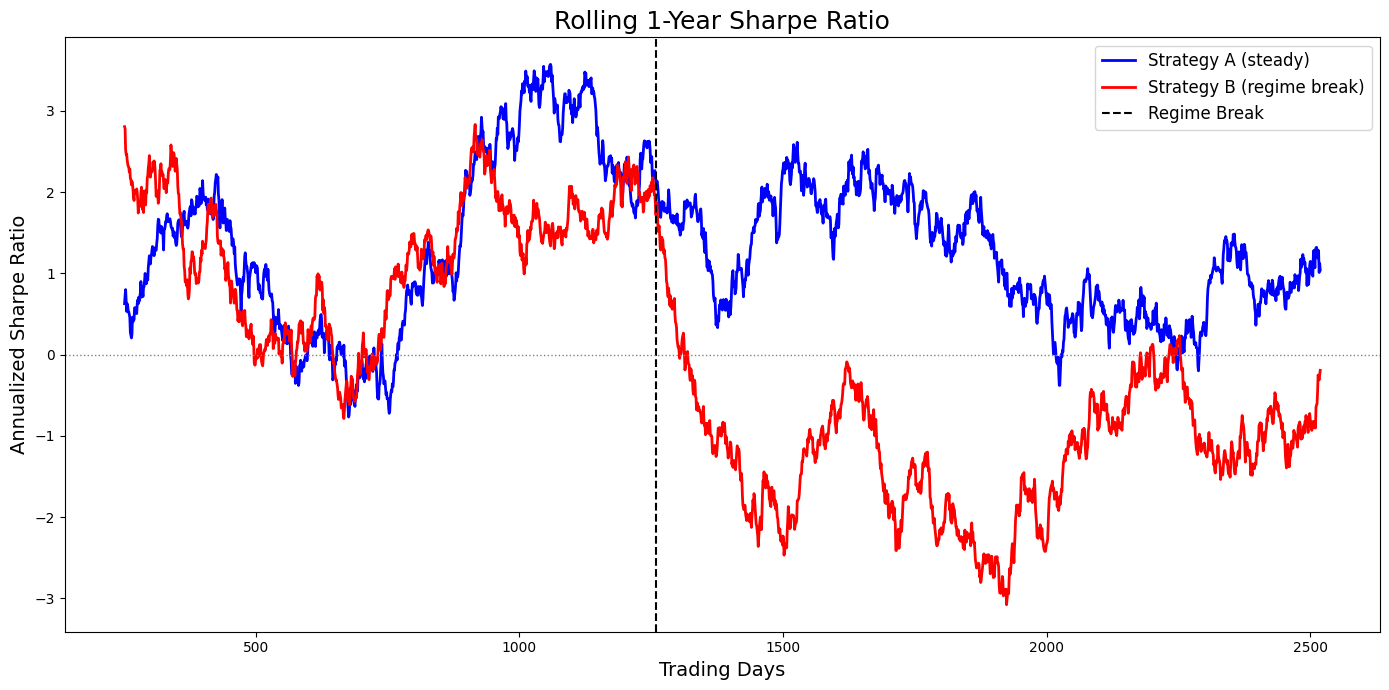

We need to care more about the derivative of the equity curve than its final value. To visualize this, let’s plot the rolling Sharpe ratio of our two strategies. We will simulate daily returns to make the analysis more realistic.

Judged by these numbers, Strategy B still looks somewhat viable, albeit inferior to A.

The plot, however, tells a different story. Strategy A’s rolling Sharpe ratio remains consistently positive and stable, oscillating around its long-term average. In stark contrast, Strategy B’s rolling Sharpe ratio is exceptionally high for the first five years and then plunges, remaining deeply and persistently negative for the second five years. The visual evidence is unequivocal: Strategy B is broken. The global metric, by averaging the good with the bad, commits a grievous error of misrepresentation. This visualization crystallizes the core problem: our tools are not designed to detect failure in real-time.

Model risks and limitations

Before continuing it’s important to be aware that adaptive regret framework has potential, but it is not infallible. A proficient quantitative strategist is defined not only by their models but by the awareness of their limitations. To deploy such a system without a rigorous understanding of its failure modes is to trade analytical hubris for financial ruin.

The single most critical limitation to internalize is that minimizing regret is not the same as maximizing risk-adjusted returns. The regret metric is a valuable proxy for adaptive capability, but it is not the ultimate objective function of a trading business, which is typically some function of PnL, volatility, and drawdown.

Achieving a small or even negative adaptive/dynamic regret provides a guarantee that your algorithm performed nearly as well as the best available sequence of experts. However, if all the experts in your universe lose money during a particular period (e.g., a market crash where all long-only strategies suffer), your low-regret portfolio will still lose money. The framework guarantees you’ll lose money efficiently, but it doesn’t prevent the loss itself.

The solution is not to discard regret but to supplement it with a dashboard of traditional risk and performance metrics. Never rely on regret alone. Continuously monitor rolling PnL, Sharpe/Sortino ratios, maximum drawdown, and turnover-cost drag. Implement hard-coded risk management overlays, such as a kill-switch that flattens the portfolio if a predefined maximum drawdown threshold is breached or if realized volatility spikes beyond a certain limit. Regret tells you about your relative performance; PnL tells you if you’re still in business.

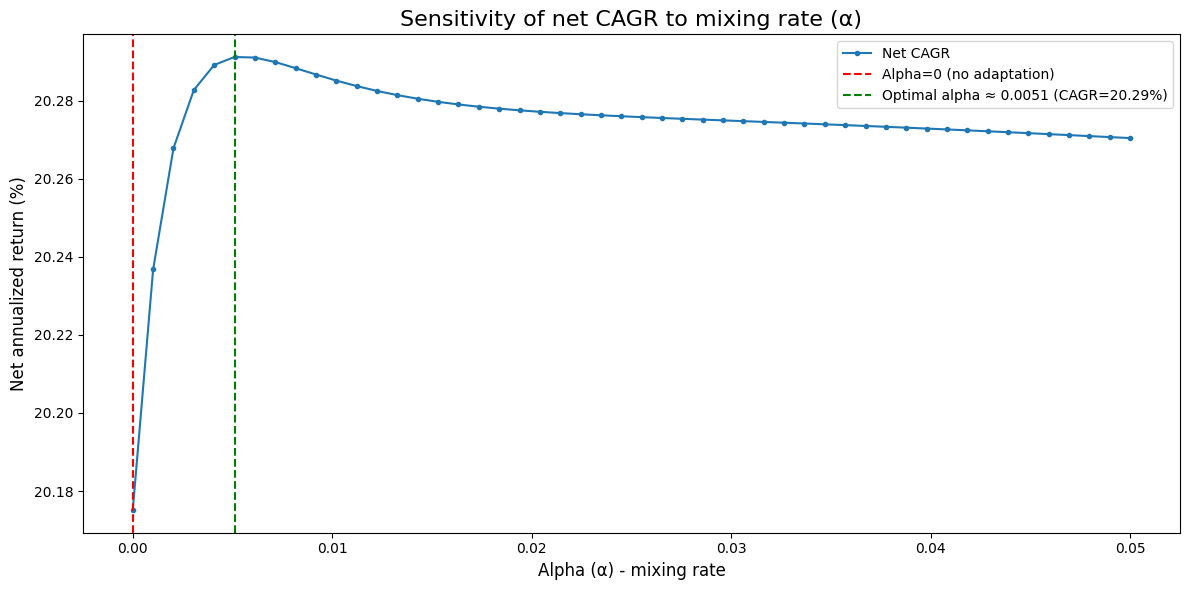

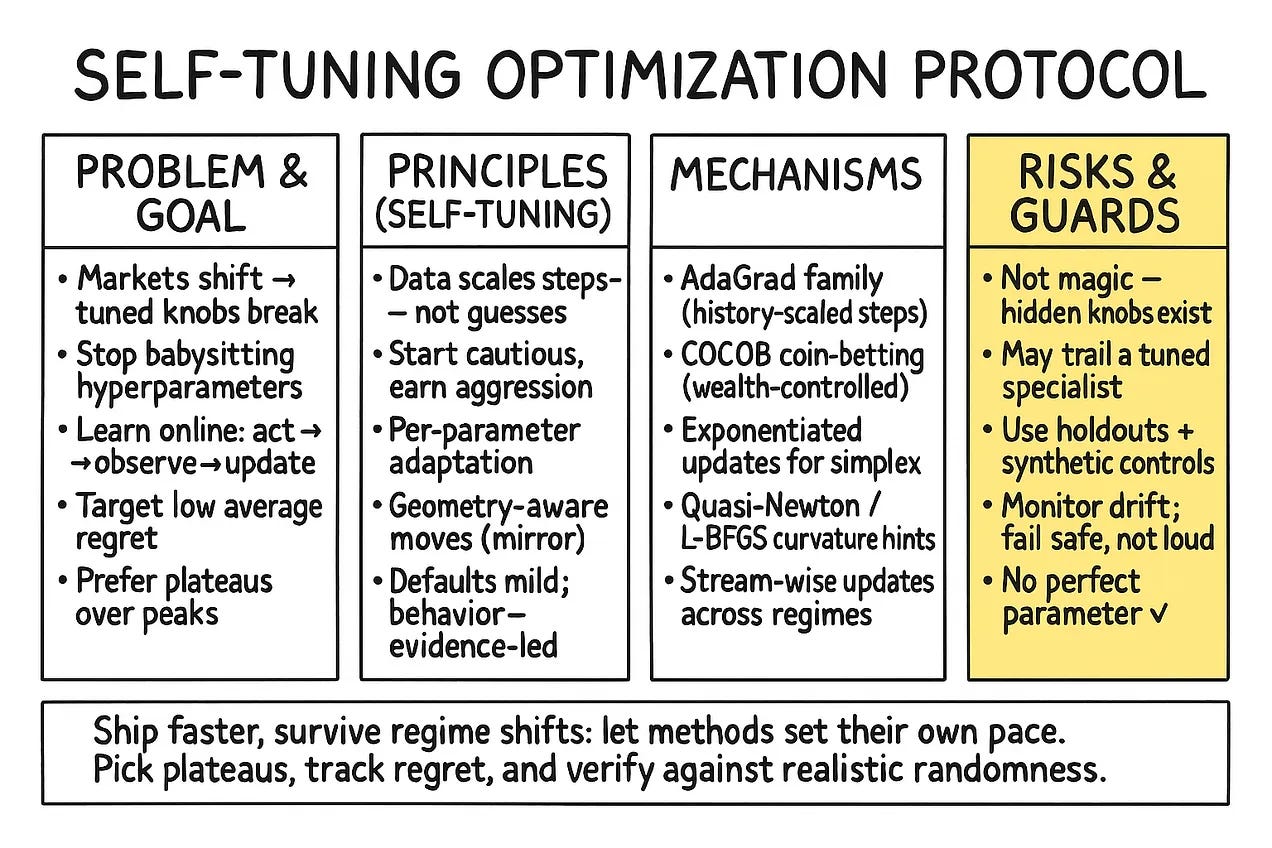

Another important point is the choice of the mixing rate, α, places the strategist on a knife’s edge between two opposing risks.

A small α makes the aggregator conservative and slow to adapt. After a sharp, sudden regime break, it will continue to place weight on the old, failing paradigm for too long, leading to protracted drawdowns. Conversely, a large α makes the aggregator hyperactive and jittery. In a stable market regime, it will constantly overreact to noise, interpreting minor fluctuations as regime shifts, leading to excessive turnover and cost drag.

A way to mitigate this is to move beyond a static α. More advanced implementations can feature a time-varying mixing rate. For example, αt could be engineered to increase temporarily following a sharp spike in portfolio losses (a potential signal of a regime break) and then gradually decay back to a baseline level during periods of calm. An even more robust approach is a strongly-adaptive meta-aggregator, where multiple

FixedShareAggregatorinstances are run in parallel, each with a different fixed α (e.g., α∈{0,1/T,4/T,12/T}). A second-level aggregator then dynamically allocates weight to the aggregator that is performing best, effectively learning the optimal adaptation speed online.

The adaptive aggregator is a manager, not a magician. It can only allocate capital among the strategies you provide it. Its performance is fundamentally bounded by the quality and diversity of this expert universe.

This is the “Garbage In, Garbage Out” principle. If the market enters a new regime for which you have no corresponding expert strategy, no amount of intelligent re-weighting can save you. If your universe consists of ten different trend-following strategies, and the market becomes violently mean-reverting, the aggregator will simply pick the least-bad trend-following strategy as it cycles through losses.

If the expert strategies are highly correlated, the benefits of adaptation are severely diminished. The weight updates will cause the portfolio to bang between experts that are essentially providing the same information. This can lead to risk concentration, where the aggregator collapses its weight into one corner of a highly correlated cluster of experts, creating a portfolio that is far less diversified than the number of experts would suggest.

Finally, a host of risks arise from the gap between the clean mathematics of the algorithm and the finite, messy world of computer hardware and software protocols.

The core update involves exponentiation, which can be numerically unstable. With many experts or very large losses, the values can underflow to zero or overflow to infinity, causing the algorithm to fail. The calculation of dynamic regret via dynamic programming is computationally intensive, making it infeasible for real-time monitoring with a large number of experts or a long history.

Formalism for failure

To advance beyond this qualitative critique, we must introduce a more rigorous, mathematical language to define and measure performance in this dynamic context. The field of online machine learning provides an exceptionally well-suited concept: regret. Regret quantifies the performance of our chosen algorithm relative to some benchmark, typically an oracle that possesses information unavailable to us at the time of decision-making (such as knowledge of the future).

Let us consider a universe of N distinct trading strategies, which we will refer to as experts. These experts represent our fundamental building blocks—they are our candidate sources of alpha. For example, Expert 1 could be a 50-day moving average crossover system, Expert 2 a statistical arbitrage strategy on a pair of equities, and Expert 3 a volatility-selling strategy. At each time step t (e.g., each trading day), each expert i incurs a loss ℓi,t. For our purposes, it is convenient to define loss as the negative of the logarithmic return: ℓi,t=−log(1+Ri,t). A lower loss signifies better performance. Our master algorithm, or “aggregator,” must dynamically combine the outputs of these experts to form a portfolio, which in turn incurs a loss ℓt.

The classical formulation of regret is Static Regret. It is defined as the difference between the cumulative loss of our algorithm and the cumulative loss of the single best-performing expert in hindsight over the entire period T.

Let’s implement this method because we weill use it in the main script:

def static_regret(loss_agg: np.ndarray, loss_experts: np.ndarray) -> float:

“”“

R_T^static = sum_t loss_agg[t] - min_i sum_t loss_experts[t, i]

“”“

L_agg = float(loss_agg.sum())

L_i = loss_experts.sum(axis=0) # (N,)

return L_agg - float(np.min(L_i))The objective of many traditional online learning algorithms is to guarantee that this static regret grows sub-linearly with time. This is a theoretical guarantee, as it implies that on a per-period basis, the algorithm’s performance converges to that of the hindsight-optimal expert (RTstat/T→0 as T→∞). This sounds highly desirable: over a sufficiently long horizon, our algorithm will perform nearly as well as if we had possessed a crystal ball on day one, allowing us to select the single best strategy and commit to it for all time.

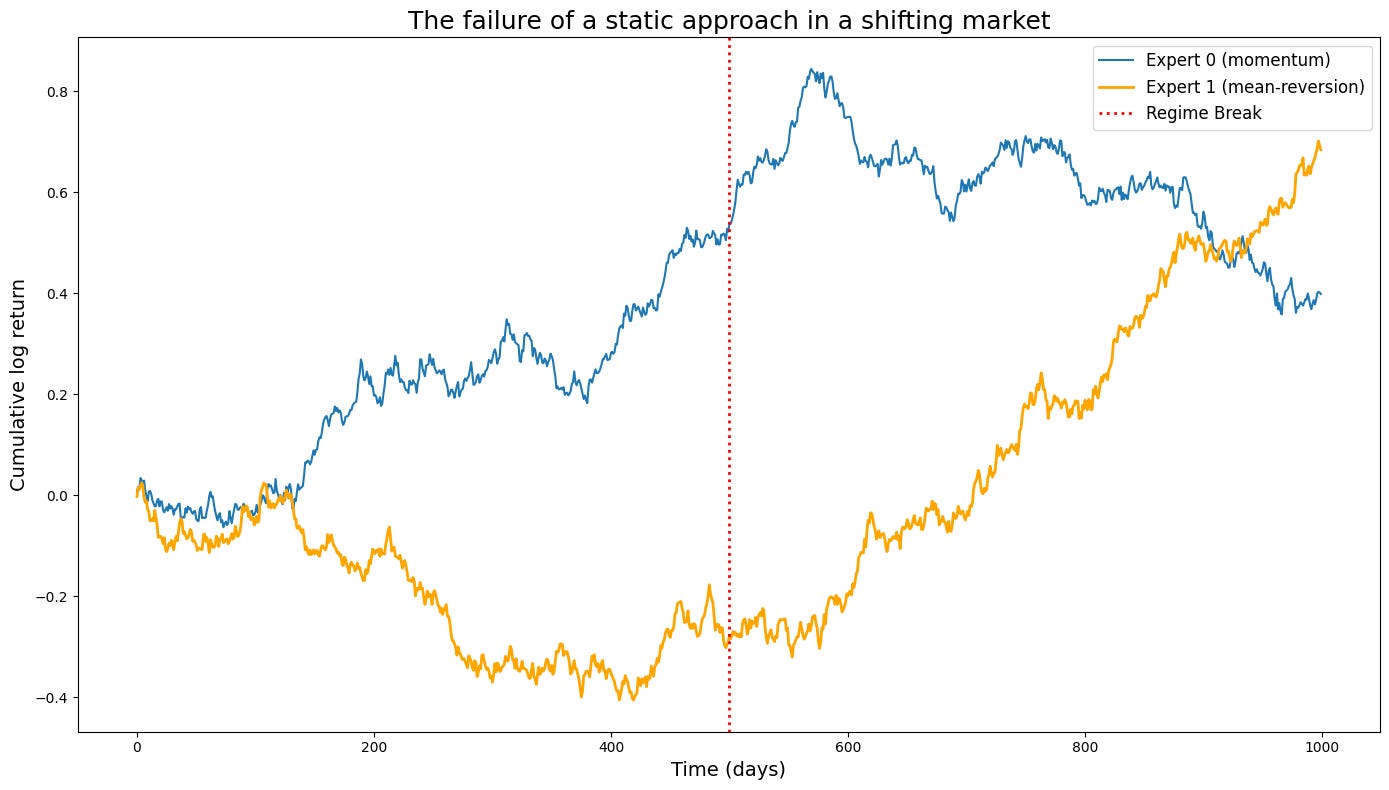

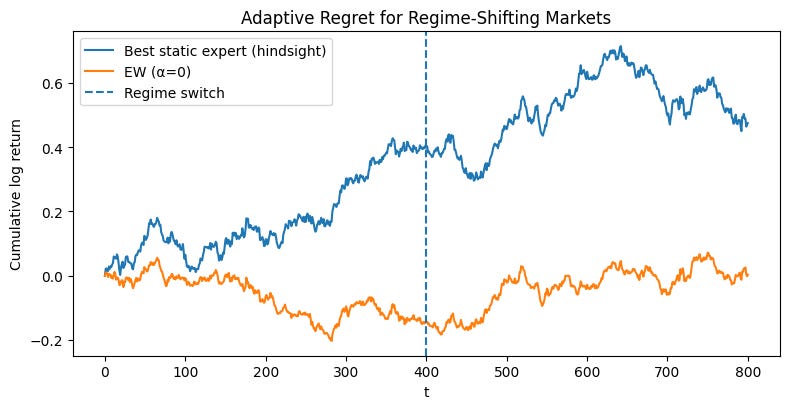

However, in the non-stationary data of finance, this is like science fiction. The single best expert is a static concept imposed upon the market. Let’s construct a simple simulation to demonstrate this catastrophic failure mode in action. We will model two experts: a momentum expert and a mean-reversion expert. The market will operate in a momentum-favoring regime for the first half of the simulation and then abruptly switch to a mean-reversion-favoring regime.

The resulting plot is a stark illustration of the problem. An algorithm optimized to minimize static regret would be judged against the performance of the orange line. Let’s say it successfully tracks this benchmark. What does that mean for the investor? It means the algorithm would have performed brilliantly for 500 days and then proceeded to systematically destroy capital for the next 500 days. The fact that its total static regret is low is cold comfort. The very benchmark we are using—the single best expert in hindsight—is an inappropriate and misleading target in a world that changes. Minimizing static regret forces our algorithm to find a compromise strategy, one that is mediocre on average but potentially catastrophic locally. This is the core analytical obstacle that must be surmounted. We need a better definition of success.

A framework for principled adaptation

The resolution to this dilemma lies not in building a better model, but in fundamentally changing the question we ask of it. Instead of asking, How can my algorithm perform as well as the single best strategy over the entire historical period? we must pivot to a more relevant and actionable question: How can my algorithm perform, at all times, nearly as well as whatever strategy is currently the best? This paradigm shift necessitates a radical overhaul of our mathematical framework and our algorithmic toolkit, moving from a static, global perspective to a dynamic, local one.

To construct a solution, we must first define the problem with precision. This requires the introduction of new performance metrics that explicitly capture the essence of adaptation and penalize failure within any time frame.

Adaptive (or interval) regret: This metric radically reframes the evaluation process. Instead of assessing performance over the entire history from t=1 to T, it examines every possible contiguous sub-interval [r,s] within that history. It measures the maximum regret our algorithm incurs over any such interval. Mathematically, it is defined as:

\(R_{\text{interval}}^{\text{adapt}} = \max_{0 \le r \le s \le T} \left\{ \sum_{t=r}^{s} \ell_t - \min_{i=1,\ldots,N} \sum_{t=r}^{s} \ell_{i,t} \right\}\)An algorithm that achieves low adaptive regret provides an exceptionally strong performance guarantee. It promises that no matter which window of time an analyst chooses to examine—be it the last week, the last month, the last quarter, or the tumultuous period surrounding a specific economic event—the algorithm’s performance was nearly as good as the best possible strategy for that specific period. This is a profound improvement. It means the algorithm cannot conceal a period of disastrous performance behind a long history of prior success. It is held accountable for its performance locally in time, which is precisely what any rational risk manager or investor truly cares about. An algorithm with low adaptive regret would not have been permitted to bleed capital for 500 days, as we saw in our previous example, because its regret over that specific 500-day interval would have been enormous.

To implement this part we will use:

def interval_regret_windows( loss_agg: np.ndarray, loss_experts: np.ndarray, windows: List[int] = [20, 60, 120] ) -> Dict[int, float]: “”“ Adaptive (interval) regret restricted to fixed window lengths. Returns max over all starting t for each window W: max_t sum_{s=t}^{t+W-1} loss_agg[s] - min_i sum_{s=t}^{t+W-1} loss_experts[s, i] “”“ T, N = loss_experts.shape P_agg = np.concatenate(([0.0], np.cumsum(loss_agg))) P_exp = np.vstack((np.zeros((1, N)), np.cumsum(loss_experts, axis=0))) out = {} for W in windows: if W > T: out[W] = np.nan continue worst = -np.inf for t in range(0, T - W + 1): L_a = P_agg[t + W] - P_agg[t] seg = P_exp[t + W] - P_exp[t] # (N,) best_expert_seg = float(np.min(seg)) regret = L_a - best_expert_seg if regret > worst: worst = regret out[W] = worst return outDynamic (or shifting) regret: This metric elevates the concept one step further. It compares the algorithm’s performance not to a single static expert, nor even to a sequence of interval-best experts, but to a pretty interesting benchmark that is permitted to switch between the available experts a predetermined number of times. Our benchmark is no longer a single entity but an optimal sequence of experts. If we allow for S switches over the total period T, the dynamic regret is defined as:

\(R_{S,T}^{\text{dyn}} = \sum_{t=1}^{T} \ell_t - \min_{u_1, \ldots, u_T \in \{1, \ldots, N\}^T \text{ with } \le S \text{ switches}} \sum_{t=1}^{T} \ell_{u_t, t}\)Here, the sequence u1,…,uT represents the choice of expert at each time step, and the minimization is over all possible sequences that contain at most S changes (i.e., where ut≠ut-1). Dynamic regret is perhaps the most intellectually honest metric for measuring adaptive performance. It explicitly acknowledges that the optimal strategy is not fixed. It asks, How did my algorithm perform compared to a clairvoyant competitor who knew the perfect sequence of momentum → mean-reversion and was allowed to make that one switch at the perfect time?

Crucially, as we will see in our implementation, this hypothetical switching benchmark can be (and should be) charged a transaction cost for each switch it makes, creating a truly robust and fair comparison. If our algorithm can achieve low dynamic regret against this formidable, cost-adjusted benchmark, we can possess a high degree of confidence in its genuine adaptive capabilities.

def dynamic_regret( self, loss_agg: np.ndarray, loss_experts: np.ndarray, S: int, include_costs: bool = True ) -> float: “”“ Dynamic (shifting) regret vs best comparator sequence with up to S switches. Returns: R_T^dyn(S) = sum_t loss_agg[t] - min_{u sequence, ≤S switches} sum_t loss_{t, u_t} [+ switching_costs] If include_costs=True, comparator pays 2*lam per switch (L1 distance between expert one-hots). “”“ T, N = loss_experts.shape lam = self.lam if include_costs else 0.0 INF = 1e100 DP = np.full((T, N, S + 1), INF, dtype=float) # init at t=0 for i in range(N): DP[0, i, 0] = loss_experts[0, i] # transitions for t in range(1, T): for i in range(N): li = loss_experts[t, i] # stay with i (no new switch) for s in range(S + 1): DP[t, i, s] = min(DP[t, i, s], DP[t - 1, i, s] + li) # switch from j != i (pay 2*lam) for j in range(N): if j == i: continue for s in range(1, S + 1): DP[t, i, s] = min(DP[t, i, s], DP[t - 1, j, s - 1] + li + 2.0 * lam) best_comp = float(np.min(DP[T - 1])) return float(loss_agg.sum()) - best_comp

Shifting-experts algorithm

How, then, do we construct an algorithm capable of optimizing these more demanding, more relevant metrics? The solution lies in an algorithm from the online learning literature, a variant of the prediction with expert advice framework known as the Fixed-Share Update. We will encapsulate its logic within a practical Python class, FixedShareAggregator, designed for real-world trading applications.

For more about “prediction with expert advice” check:

The core intuition is to maintain and update a probability distribution, represented by a vector of weights wt∈RN, over our N experts at each time step t. These weights, which must be non-negative and sum to one (wt≥0,∑iwi,t=1), represent our algorithm’s confidence in each expert’s ability to perform well in the next time step. The portfolio return at time t is then the weighted average of the expert returns: Rt=∑iwi,tRi,t. The update from wt to wt+1 after observing the losses ℓi,t occurs in a two-step process.

Step A - Multiplicative loss update: We first adjust the weights based on performance. Experts that incurred high losses see their weights decrease, while those with low losses see their weights increase (or decrease less). This is accomplished via an exponential update rule:

\(w'_{i, t+1} = \frac{ w_{i, t} \exp(-\eta_t \ell_{i, t}) }{ \sum_{j=1}^{N} w_{j, t} \exp(-\eta_t \ell_{j, t}) }\)This update has several desirable properties. The exponential form heavily penalizes large losses while being relatively forgiving of small ones, making it robust. The term ηt>0 is the learning rate. A high ηt makes the algorithm highly reactive to recent performance, while a low ηt makes it more conservative. A common and theoretically sound choice, which we will use, is a time-varying scale-free rate that automatically anneals over time: ηt=√(2log N/(t+1)). This allows the algorithm to learn quickly at the beginning and become more stable as it accumulates more evidence.

Step B - Mixing step: This is the crucial innovation of the Fixed-Share algorithm. After the loss update, we perform a mixing step. We posit that at any given moment, there is a small probability, α, that the market regime has just changed, rendering all our past observations less relevant. In this event, the best course of action is to reset our beliefs towards a state of maximum uncertainty. We achieve this by mixing our performance-updated weights w’t+1 with a uniform distribution over the experts.

\(w_{i, t+1} = (1 - \alpha) w'_{i, t+1} + \alpha \frac{1}{N}\)This mixing step acts as a form of regularization or, more intuitively, as an insurance policy against regime shifts. The term (1−α)w’i,t+1 represents our belief if the world hasn’t changed, while the α·1/N term represents our hedge against the possibility that it has. This simple addition prevents any expert’s weight from ever decaying to absolute zero. Even a strategy that has performed poorly for a very long time retains a small sliver of weight, allowing it to rapidly regain influence if the market regime shifts in its favor.

The parameter α is the mixing rate. It directly controls the assumed frequency of regime changes. A higher α makes the algorithm more agile and forgetful, while α=0 reduces the algorithm to a standard exponentially weighted average portfolio, which is slow to adapt. A particularly intelligent setting, which we implement as a default, is

one_switch, which dynamically resolves α=1/(T−1) at runtime. This encodes a prior belief that we expect approximately one regime change over the entire backtest period T, a reasonable and non-aggressive starting point for many financial applications.Let’s build this part:

class FixedShareAggregator: “”“ Exponential Weights with Fixed-Share mixing on the simplex (weights >= 0, sum = 1). Correct online protocol at step t: 1) EARN with current portfolio w_t on return r_t. 2) UPDATE using loss_t to get w~_{t+1} (exp-weights) and then mix to w_{t+1}. 3) PAY transaction cost lam * ||w_{t+1} - w_t||_1. Update equations: w~_{t+1,i} ∝ w_{t,i} * exp(-eta_t * loss_{t,i}) w_{t+1} = (1 - alpha) * w~_{t+1} + alpha * (1/N) * 1 “”“ def __init__( self, eta: Union[str, float] = “scale_free”, alpha: Union[str, float] = “one_switch”, lam: float = 0.0, trust_band: float = 0.0, clip_loss: Optional[Tuple[Optional[float], Optional[float]]] = None, init_weights: Optional[np.ndarray] = None, random_state: Optional[int] = None ): “”“ Parameters ---------- eta : “scale_free” or float If “scale_free”: eta_t = sqrt(2 log N / t). If float: constant eta. alpha : “one_switch” or float in [0,1] If “one_switch”: alpha = 1/(T-1) resolved at fit time. Else fixed float. lam : float Transaction cost per unit L1 turnover (both sides aggregated). trust_band : float If ||w_new - w_old||_1 <= trust_band, skip the rebalance to avoid tiny trades. clip_loss : (lo, hi) or None Clip losses before updating (robustness for heavy tails). init_weights : np.ndarray or None Initial weights on simplex; if None, use uniform. “”“ self.eta = eta self.alpha = alpha self.lam = float(lam) self.trust_band = float(trust_band) self.clip_loss = clip_loss self.init_weights = init_weights self.random_state = random_state def fit( self, returns_experts: ArrayLike, returns_type: str = “log” ) -> FitResult: “”“ Online aggregation over T steps. Parameters ---------- returns_experts : (T, N) array Expert returns, log by default. If arithmetic, set returns_type=”arith”. returns_type : {”log”, “arith”} Returns ------- FitResult “”“ R = np.asarray(returns_experts, dtype=float) if returns_type not in {”log”, “arith”}: raise ValueError(”returns_type must be ‘log’ or ‘arith’”) if returns_type == “arith”: R = np.log1p(R) # convert safely to log-returns T, N = R.shape losses = -R.copy() # loss = -log-return if self.clip_loss is not None: lo, hi = self.clip_loss losses = _clip(losses, lo, hi) # resolve alpha if isinstance(self.alpha, str): if self.alpha.lower() == “one_switch”: alpha_val = 1.0 / max(1, T - 1) else: raise ValueError(”Unknown alpha specifier”) else: alpha_val = float(self.alpha) if not (0.0 <= alpha_val <= 1.0): raise ValueError(”alpha must be in [0,1]”) # init weights if self.init_weights is None: w = np.ones(N) / N else: w = np.asarray(self.init_weights, dtype=float) if w.shape != (N,) or np.any(w < 0) or not np.isclose(w.sum(), 1.0): raise ValueError(”init_weights must be shape (N,), on simplex”) weights_hist = np.zeros((T + 1, N)) weights_hist[0] = w eq = np.zeros(T + 1) # net (after costs) eq_gross = np.zeros(T + 1) # gross (before costs) costs = np.zeros(T + 1) turnover = np.zeros(T + 1) loss_agg = np.zeros(T) ret_agg = np.zeros(T) for t in range(T): # --- (1) earn with current portfolio w_t --- ret = float(np.dot(w, R[t])) ret_agg[t] = ret loss_agg[t] = -ret eq_gross[t+1] = eq_gross[t] + ret # --- (2) compute multiplicative update on observed loss_t --- if isinstance(self.eta, str): if self.eta.lower() == “scale_free”: eta_t = np.sqrt(2.0 * np.log(N) / max(1, t + 1)) else: raise ValueError(”Unknown eta specifier”) else: eta_t = float(self.eta) w_tilde = w * np.exp(-eta_t * losses[t]) s = w_tilde.sum() if s <= 0 or not np.isfinite(s): w_tilde = np.ones(N) / N else: w_tilde /= s # fixed-share mix w_new = (1.0 - alpha_val) * w_tilde + alpha_val * (np.ones(N) / N) # trust band (skip tiny turnover) turn = np.abs(w_new - w).sum() if turn <= self.trust_band: w_new = w.copy() cost = 0.0 turn = 0.0 else: cost = self.lam * turn # --- (3) pay cost and move to t+1 --- eq[t+1] = eq[t] + ret - cost costs[t+1] = cost turnover[t+1] = turn w = w_new weights_hist[t+1] = w return FitResult( equity=eq, eq_gross=eq_gross, weights=weights_hist, costs=costs, turnover=turnover, loss_agg=loss_agg, loss_experts=losses, returns_agg=ret_agg, returns_experts=R, params={ “eta”: self.eta, “alpha”: self.alpha, “alpha_resolved”: alpha_val, “lam”: self.lam, “trust_band”: self.trust_band, “clip_loss”: self.clip_loss})

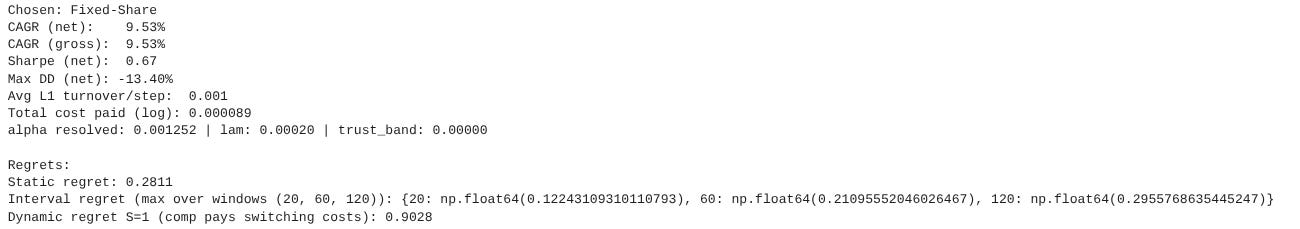

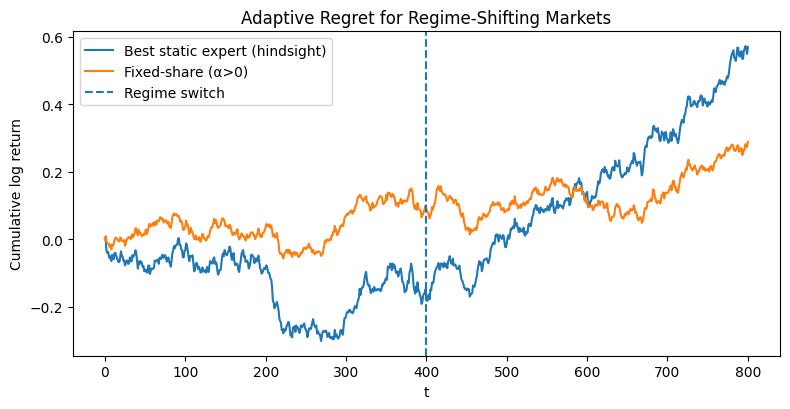

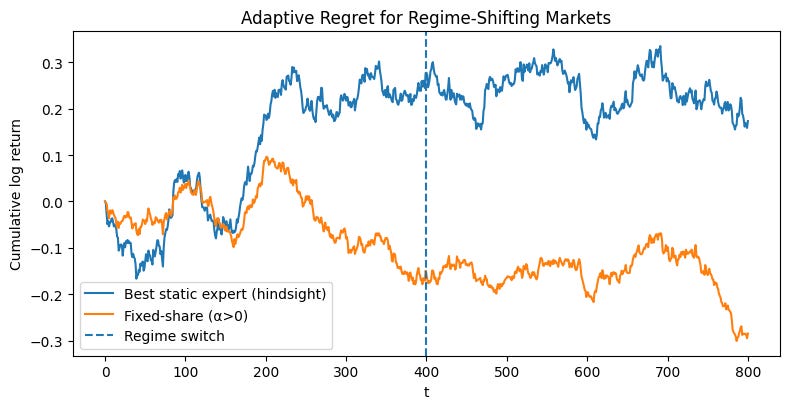

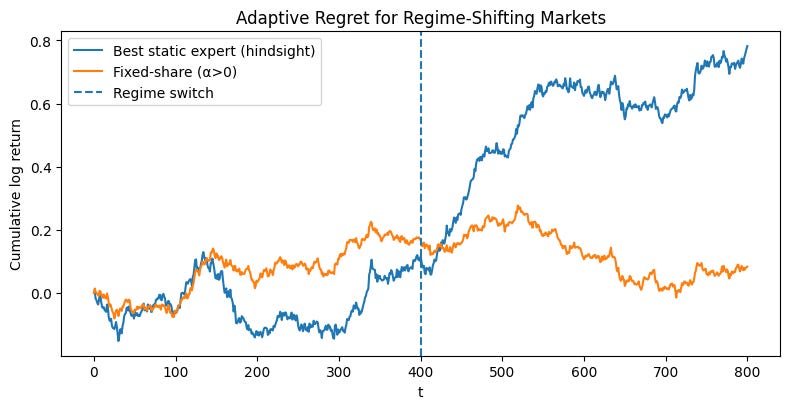

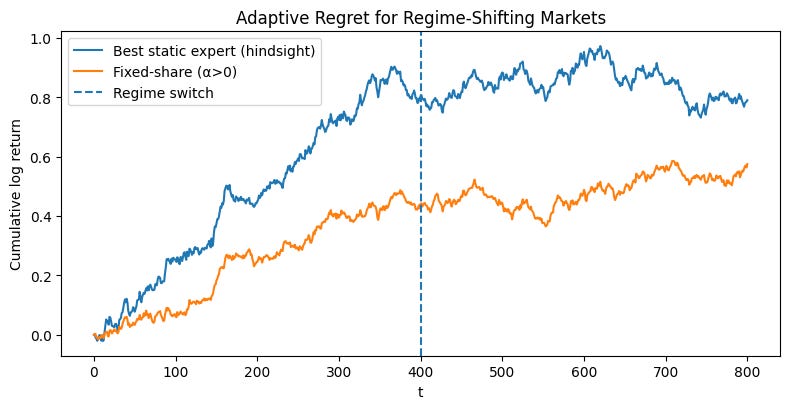

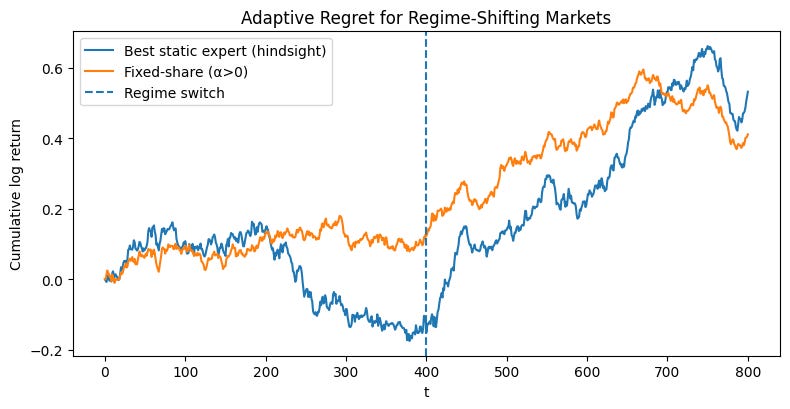

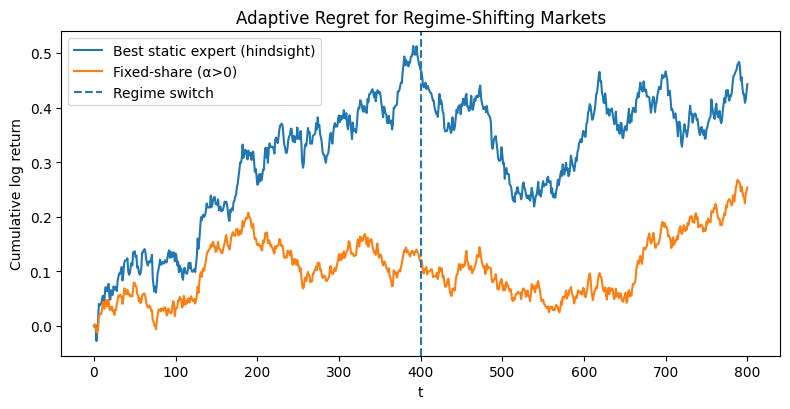

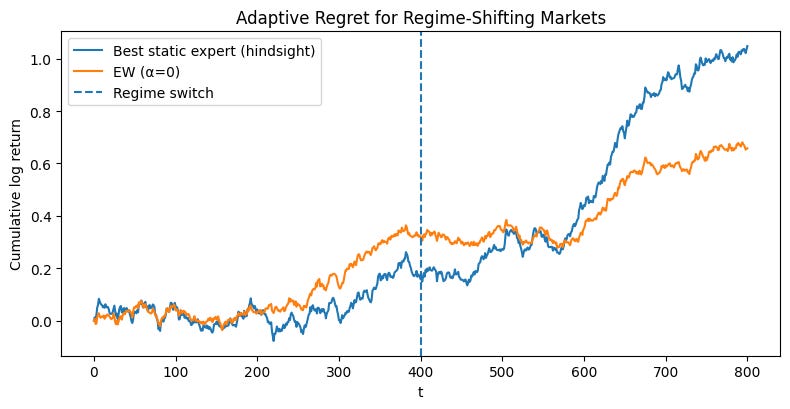

We will now deploy the fully-featured FixedShareAggregator on our two-regime synthetic market. A demo function that orchestrates a direct comparison between three approaches:

The adaptive aggregator: A

FixedShareAggregatorinstance with a positive mixing rate (alpha=one_switch) and transaction costs (lam > 0).The non-adaptive aggregator: An identical aggregator but with

alpha=0. This is equivalent to a standard Exponentially Weighted portfolio and serves as our baseline for slow adaptation.The static benchmark: The single best-performing expert in hindsight.

Okay, looks good. Maybe too good. Let’s play with different seeds and see what happens.

As you can see, there’s quite a bit of variability depending on the previous regimen. Remember, this algorithm isn’t designed to maximize performance, but rather to minimize regret. So these results are what you’d expect.

Meta-optimization dilemma

As mentioned in the previous article, free-parameter optimization is not 0 parameter optimization o less parameter optimization… Remember to check it here:

The FixedShareAggregator itself possesses parameters: the learning rate η, the mixing rate α, the transaction cost λ, and the trust band. Have we simply traded one optimization problem for another?

The short answer is no. The parameters of the underlying experts (e.g., the lookback window of a moving average) are parameters of signal generation. They are tied to the specific statistical properties of a market regime. In contrast, the parameters of the aggregator are meta-parameters of adaptation. They do not define what the signal is, but rather how quickly the system should react to changes in signal quality. They govern the behavior of the risk management and capital allocation overlay, not the alpha models themselves.

Nevertheless, understanding the sensitivity of the system to these meta-parameters is a vital part of practical implementation. The most critical of these is α, the mixing rate, which controls the assumed frequency of regime change. Let’s conduct a sensitivity analysis to see how the final net CAGR of our portfolio changes as we vary α. We will run our simulation multiple times, sweeping α from 0 (no adaptation) to a high value (assuming frequent changes).

The sensitivity plot reveals several critical insights.

The performance at α=0 is significantly suboptimal—this is the cost of doing nothing. This reinforces our primary thesis: in a changing world, a failure to incorporate a mechanism for adaptation is costly.

The curve rises sharply from α=0 and then plateaus, forming a broad peak before slowly declining. This is a highly desirable property. It suggests that while there is an optimal value of α for this specific historical path, the performance is robust across a wide range of reasonable α values. We do not need to pinpoint the exact optimal value to reap most of the benefits of adaptation. Choosing an α that corresponds to a plausible regime-change frequency (e.g., once every few years) is likely to yield near-optimal results.

As α becomes excessively large, performance begins to degrade. This is because a high mixing rate leads to constant, unnecessary shuffling of the portfolio weights, causing the aggregator to be too forgetful and to pay excessive transaction costs. It starts to overfit to noise, believing every small fluctuation is a new regime.

This analysis demonstrates that while meta-parameters exist, they operate on a different conceptual level. The goal is not to find the single correct α via exhaustive backtesting (which would be a form of overfitting), but to select a sensible value based on a qualitative understanding of market dynamics and to verify that the system’s performance is robust to small variations around that choice.

We’ll continue with more interesting algorithms later. Until then, take care! You’ve done a great job today, team! 🦾

PS: Do you like the topic of these articles? Would you like to explore online ML?

This is an invitation-only access to our QUANT COMMUNITY, so we verify numbers to avoid spammers and scammers. Feel free to join or decline at any time. Tap the WhatsApp icon below to join

Appendix

Full script:

from __future__ import annotations

import numpy as np

from dataclasses import dataclass

from typing import Dict, Optional, List, Tuple, Union

import matplotlib.pyplot as plt

ArrayLike = Union[np.ndarray]

# Data structures

@dataclass

class FitResult:

equity: np.ndarray # (T+1,) cumulative log equity of aggregator (after costs)

eq_gross: np.ndarray # (T+1,) cumulative log equity before costs

weights: np.ndarray # (T+1, N) weights history (including t=0)

costs: np.ndarray # (T+1,) per-step costs paid (0 at t=0)

turnover: np.ndarray # (T+1,) L1 turnover (0 at t=0)

loss_agg: np.ndarray # (T,) losses of aggregator (excludes costs; costs tracked separately)

loss_experts: np.ndarray # (T, N) losses per expert

returns_agg: np.ndarray # (T,) aggregator log returns before costs

returns_experts: np.ndarray # (T, N) expert log returns

params: Dict # parameters snapshot used

# Helper functions

def _clip(x: np.ndarray, lo: Optional[float], hi: Optional[float]) -> np.ndarray:

if lo is None and hi is None:

return x

if lo is None:

return np.minimum(x, hi)

if hi is None:

return np.maximum(x, lo)

return np.clip(x, lo, hi)

# Fixed-Share Aggregator class

class FixedShareAggregator:

“”“

Exponential Weights with Fixed-Share mixing on the simplex (weights >= 0, sum = 1).

Correct online protocol at step t:

1) EARN with current portfolio w_t on return r_t.

2) UPDATE using loss_t to get w~_{t+1} (exp-weights) and then mix to w_{t+1}.

3) PAY transaction cost lam * ||w_{t+1} - w_t||_1.

Update equations:

w~_{t+1,i} ∝ w_{t,i} * exp(-eta_t * loss_{t,i})

w_{t+1} = (1 - alpha) * w~_{t+1} + alpha * (1/N) * 1

“”“

def __init__(

self,

eta: Union[str, float] = “scale_free”,

alpha: Union[str, float] = “one_switch”,

lam: float = 0.0,

trust_band: float = 0.0,

clip_loss: Optional[Tuple[Optional[float], Optional[float]]] = None,

init_weights: Optional[np.ndarray] = None,

random_state: Optional[int] = None

):

“”“

Parameters

----------

eta : “scale_free” or float

If “scale_free”: eta_t = sqrt(2 log N / t). If float: constant eta.

alpha : “one_switch” or float in [0,1]

If “one_switch”: alpha = 1/(T-1) resolved at fit time. Else fixed float.

lam : float

Transaction cost per unit L1 turnover (both sides aggregated).

trust_band : float

If ||w_new - w_old||_1 <= trust_band, skip the rebalance to avoid tiny trades.

clip_loss : (lo, hi) or None

Clip losses before updating (robustness for heavy tails).

init_weights : np.ndarray or None

Initial weights on simplex; if None, use uniform.

“”“

self.eta = eta

self.alpha = alpha

self.lam = float(lam)

self.trust_band = float(trust_band)

self.clip_loss = clip_loss

self.init_weights = init_weights

self.random_state = random_state

def fit(

self,

returns_experts: ArrayLike,

returns_type: str = “log”

) -> FitResult:

“”“

Online aggregation over T steps.

Parameters

----------

returns_experts : (T, N) array

Expert returns, log by default. If arithmetic, set returns_type=”arith”.

returns_type : {”log”, “arith”}

Returns

-------

FitResult

“”“

R = np.asarray(returns_experts, dtype=float)

if returns_type not in {”log”, “arith”}:

raise ValueError(”returns_type must be ‘log’ or ‘arith’”)

if returns_type == “arith”:

R = np.log1p(R) # convert safely to log-returns

T, N = R.shape

losses = -R.copy() # loss = -log-return

if self.clip_loss is not None:

lo, hi = self.clip_loss

losses = _clip(losses, lo, hi)

# resolve alpha

if isinstance(self.alpha, str):

if self.alpha.lower() == “one_switch”:

alpha_val = 1.0 / max(1, T - 1)

else:

raise ValueError(”Unknown alpha specifier”)

else:

alpha_val = float(self.alpha)

if not (0.0 <= alpha_val <= 1.0):

raise ValueError(”alpha must be in [0,1]”)

# init weights

if self.init_weights is None:

w = np.ones(N) / N

else:

w = np.asarray(self.init_weights, dtype=float)

if w.shape != (N,) or np.any(w < 0) or not np.isclose(w.sum(), 1.0):

raise ValueError(”init_weights must be shape (N,), on simplex”)

weights_hist = np.zeros((T + 1, N))

weights_hist[0] = w

eq = np.zeros(T + 1) # net (after costs)

eq_gross = np.zeros(T + 1) # gross (before costs)

costs = np.zeros(T + 1)

turnover = np.zeros(T + 1)

loss_agg = np.zeros(T)

ret_agg = np.zeros(T)

for t in range(T):

# --- (1) earn with current portfolio w_t ---

ret = float(np.dot(w, R[t]))

ret_agg[t] = ret

loss_agg[t] = -ret

eq_gross[t+1] = eq_gross[t] + ret

# --- (2) compute multiplicative update on observed loss_t ---

if isinstance(self.eta, str):

if self.eta.lower() == “scale_free”:

eta_t = np.sqrt(2.0 * np.log(N) / max(1, t + 1))

else:

raise ValueError(”Unknown eta specifier”)

else:

eta_t = float(self.eta)

w_tilde = w * np.exp(-eta_t * losses[t])

s = w_tilde.sum()

if s <= 0 or not np.isfinite(s):

w_tilde = np.ones(N) / N

else:

w_tilde /= s

# fixed-share mix

w_new = (1.0 - alpha_val) * w_tilde + alpha_val * (np.ones(N) / N)

# trust band (skip tiny turnover)

turn = np.abs(w_new - w).sum()

if turn <= self.trust_band:

w_new = w.copy()

cost = 0.0

turn = 0.0

else:

cost = self.lam * turn

# --- (3) pay cost and move to t+1 ---

eq[t+1] = eq[t] + ret - cost

costs[t+1] = cost

turnover[t+1] = turn

w = w_new

weights_hist[t+1] = w

return FitResult(

equity=eq,

eq_gross=eq_gross,

weights=weights_hist,

costs=costs,

turnover=turnover,

loss_agg=loss_agg,

loss_experts=losses,

returns_agg=ret_agg,

returns_experts=R,

params={

“eta”: self.eta,

“alpha”: self.alpha,

“alpha_resolved”: alpha_val,

“lam”: self.lam,

“trust_band”: self.trust_band,

“clip_loss”: self.clip_loss

}

)

# Regret metrics

@staticmethod

def static_regret(loss_agg: np.ndarray, loss_experts: np.ndarray) -> float:

“”“

R_T^static = sum_t loss_agg[t] - min_i sum_t loss_experts[t, i]

“”“

L_agg = float(loss_agg.sum())

L_i = loss_experts.sum(axis=0) # (N,)

return L_agg - float(np.min(L_i))

@staticmethod

def interval_regret_windows(

loss_agg: np.ndarray,

loss_experts: np.ndarray,

windows: List[int] = [20, 60, 120]

) -> Dict[int, float]:

“”“

Adaptive (interval) regret restricted to fixed window lengths.

Returns max over all starting t for each window W:

max_t sum_{s=t}^{t+W-1} loss_agg[s] - min_i sum_{s=t}^{t+W-1} loss_experts[s, i]

“”“

T, N = loss_experts.shape

P_agg = np.concatenate(([0.0], np.cumsum(loss_agg)))

P_exp = np.vstack((np.zeros((1, N)), np.cumsum(loss_experts, axis=0)))

out = {}

for W in windows:

if W > T:

out[W] = np.nan

continue

worst = -np.inf

for t in range(0, T - W + 1):

L_a = P_agg[t + W] - P_agg[t]

seg = P_exp[t + W] - P_exp[t] # (N,)

best_expert_seg = float(np.min(seg))

regret = L_a - best_expert_seg

if regret > worst:

worst = regret

out[W] = worst

return out

@staticmethod

def full_interval_regret(

loss_agg: np.ndarray,

loss_experts: np.ndarray

) -> float:

“”“

Full adaptive regret: max over all intervals [r, s].

O(T^2 * N); use with care when T is large.

“”“

T, N = loss_experts.shape

P_agg = np.concatenate(([0.0], np.cumsum(loss_agg)))

P_exp = np.vstack((np.zeros((1, N)), np.cumsum(loss_experts, axis=0)))

worst = -np.inf

for r in range(T):

for s in range(r, T):

L_a = P_agg[s + 1] - P_agg[r]

seg = P_exp[s + 1] - P_exp[r]

regret = L_a - float(seg.min())

if regret > worst:

worst = regret

return worst

def dynamic_regret(

self,

loss_agg: np.ndarray,

loss_experts: np.ndarray,

S: int,

include_costs: bool = True

) -> float:

“”“

Dynamic (shifting) regret vs best comparator sequence with up to S switches.

Returns:

R_T^dyn(S) = sum_t loss_agg[t] - min_{u sequence, ≤S switches} sum_t loss_{t, u_t} [+ switching_costs]

If include_costs=True, comparator pays 2*lam per switch (L1 distance between expert one-hots).

“”“

T, N = loss_experts.shape

lam = self.lam if include_costs else 0.0

INF = 1e100

DP = np.full((T, N, S + 1), INF, dtype=float)

# init at t=0

for i in range(N):

DP[0, i, 0] = loss_experts[0, i]

# transitions

for t in range(1, T):

for i in range(N):

li = loss_experts[t, i]

# stay with i (no new switch)

for s in range(S + 1):

DP[t, i, s] = min(DP[t, i, s], DP[t - 1, i, s] + li)

# switch from j != i (pay 2*lam)

for j in range(N):

if j == i:

continue

for s in range(1, S + 1):

DP[t, i, s] = min(DP[t, i, s], DP[t - 1, j, s - 1] + li + 2.0 * lam)

best_comp = float(np.min(DP[T - 1]))

return float(loss_agg.sum()) - best_comp

# Utilities

def metrics_summary(res: FitResult) -> str:

“”“Quick textual summary of performance metrics.”“”

eq = res.equity

eq_gross = res.eq_gross

rets = np.diff(eq) # net (after costs)

rets_gross = np.diff(eq_gross)

def annualize(x):

T = len(x)

if T == 0:

return np.nan

return np.exp(np.sum(x) * 252 / T) - 1.0

def sharpe(x):

sd = x.std()

if sd == 0:

return np.nan

return x.mean()/sd * np.sqrt(252)

# drawdown on net equity

cum = np.exp(eq) # convert log-equity to level

peak = np.maximum.accumulate(cum)

dd = (cum / peak) - 1.0

max_dd = dd.min()

avg_turnover = res.turnover.mean()

return (

f”CAGR (net): {annualize(rets):6.2%}\n”

f”CAGR (gross): {annualize(rets_gross):6.2%}\n”

f”Sharpe (net): {sharpe(rets):5.2f}\n”

f”Max DD (net): {max_dd:6.2%}\n”

f”Avg L1 turnover/step: {avg_turnover:6.3f}\n”

f”Total cost paid (log): {res.costs.sum():.6f}\n”

f”alpha resolved: {res.params[’alpha_resolved’]:.6f} | lam: {res.params[’lam’]:.5f} | trust_band: {res.params[’trust_band’]:.5f}”

)

# Demo

def demo(seed: int = 11, plot: bool = True, choose: str = “auto”,

ir_windows=(20, 60, 120), eq_tol=1e-4, ir_tol=1e-3):

“”“

Synthetic two-regime demo.

choose:

- “auto”: plot only the better of fixed-share vs EW

- “fs”: force fixed-share

- “ew”: force EW

Auto rule prefers EW if differences are below tolerances.

“”“

np.random.seed(seed)

T = 800

N = 2

sigma = 0.012

switch = T // 2

mu1 = np.array([0.0010, -0.0005]) # expert 0 wins early

mu2 = np.array([-0.0005, 0.0010]) # expert 1 wins late

r = np.zeros((T, N))

r[:switch] = mu1 + sigma*np.random.randn(switch, N)

r[switch:] = mu2 + sigma*np.random.randn(T - switch, N)

# --- train both variants ---

fs = FixedShareAggregator(eta=”scale_free”, alpha=”one_switch”, lam=0.0002, trust_band=0.0)

ew = FixedShareAggregator(eta=”scale_free”, alpha=0.0, lam=0.0002, trust_band=0.0)

res_fs = fs.fit(r)

res_ew = ew.fit(r)

# --- pick which to display ---

if choose == “auto”:

# interval-regret improvement (lower is better)

ir_fs = FixedShareAggregator.interval_regret_windows(res_fs.loss_agg, res_fs.loss_experts, list(ir_windows))

ir_ew = FixedShareAggregator.interval_regret_windows(res_ew.loss_agg, res_ew.loss_experts, list(ir_windows))

ir_improvement = max(ir_ew[w] - ir_fs[w] for w in ir_windows) # positive => FS better

eq_diff = res_fs.equity[-1] - res_ew.equity[-1] # positive => FS better

if (eq_diff > eq_tol) or (ir_improvement > ir_tol):

choice = “fs”; res = res_fs

else:

choice = “ew”; res = res_ew

elif choose == “fs”:

choice = “fs”; res = res_fs

else:

choice = “ew”; res = res_ew

# --- best static expert (hindsight) for reference ---

cum_exp = r.cumsum(axis=0)

best = int(np.argmax(cum_exp[-1]))

eq_best = np.concatenate(([0.0], r[:, best].cumsum()))

# --- report ---

print(f”Chosen: {(’Fixed-Share’ if choice==’fs’ else ‘EW (alpha=0)’)}”)

print(metrics_summary(res))

# also show regret diagnostics for transparency

R_static = FixedShareAggregator.static_regret(res.loss_agg, res.loss_experts)

IR = FixedShareAggregator.interval_regret_windows(res.loss_agg, res.loss_experts, list(ir_windows))

DR1 = (fs if choice==’fs’ else ew).dynamic_regret(res.loss_agg, res.loss_experts, S=1, include_costs=True)

print(”\nRegrets:”)

print(f”Static regret: {R_static:.4f}”)

print(f”Interval regret (max over windows {ir_windows}): {IR}”)

print(f”Dynamic regret S=1 (comp pays switching costs): {DR1:.4f}”)

# --- plot (only one aggregator) ---

if plot and plt is not None:

plt.figure(figsize=(8, 4.2))

plt.plot(eq_best, label=”Best static expert (hindsight)”)

lbl = “Fixed-share (α>0)” if choice == “fs” else “EW (α=0)”

plt.plot(res.equity, label=lbl)

plt.axvline(switch, linestyle=”--”, label=”Regime switch”)

plt.title(”Adaptive Regret for Regime-Shifting Markets”)

plt.xlabel(”t”); plt.ylabel(”Cumulative log return”)

plt.legend(); plt.tight_layout(); plt.show()

return {

“chosen”: choice,

“equity_final”: res.equity[-1],

“interval_regret”: IR,

“dynamic_regret_S1_costs”: DR1}

if __name__ == “__main__”:

demo(plot=True)

![[WITH CODE] Optimization: Parameter-free opt.](https://substackcdn.com/image/fetch/$s_!AJt2!,w_140,h_140,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F5cedd76e-1949-481c-a904-be1a249336c5_1280x1280.png)