Table of contents:

Introduction.

The conflict between research and production.

Micro-machines architecture.

Design point 1: Cache-line.

Design point 2: Coherence engineering.

Design point 3: Topology-aware state.

Design point 4: Page-level engineering.

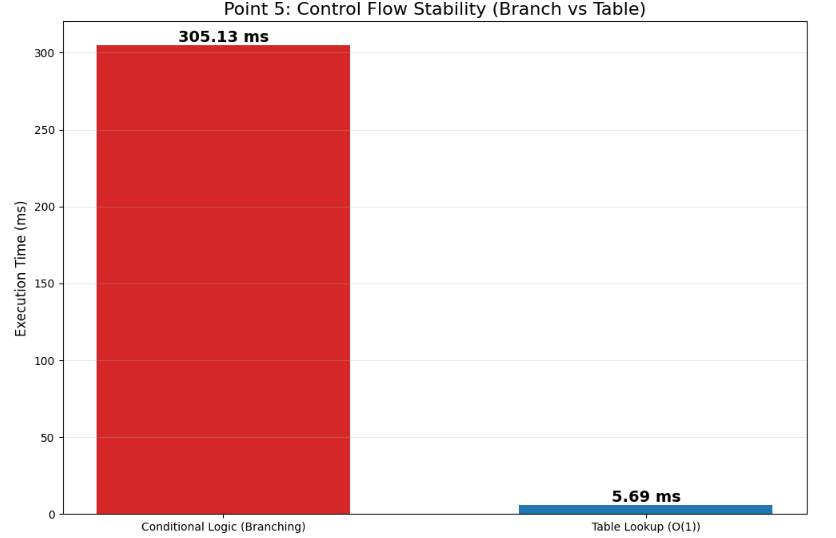

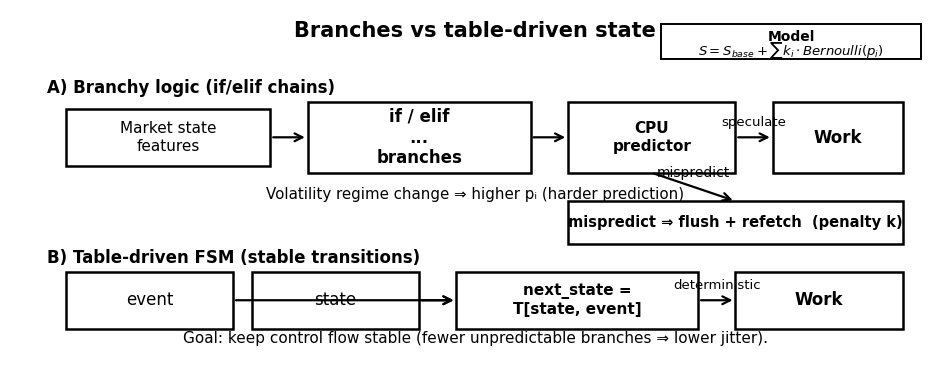

Design point 5: Control-flow predictability.

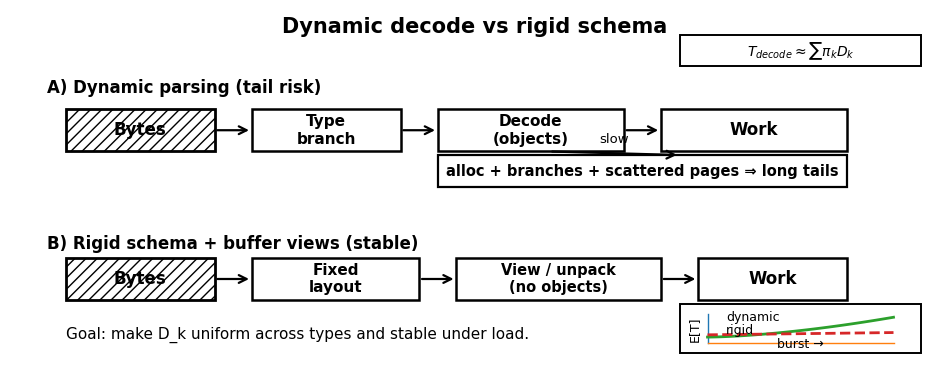

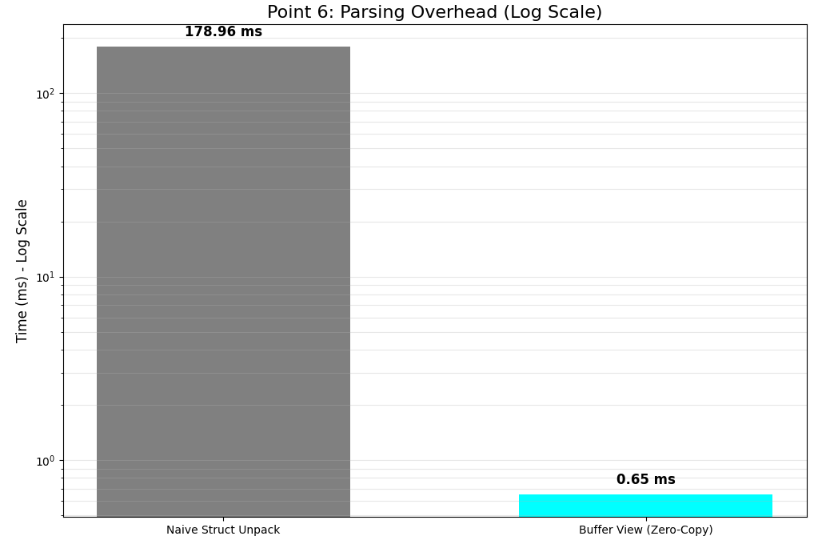

Design point 6: Wire-format and parsing structures.

Design point 7: Time and numeric representation.

Before you begin, remember that you have an index with the newsletter content organized by clicking on “Read full story” in this image.

Introduction

Most performance debates in trading start at the wrong layer. Quants argue about languages, compilers, and fast code, as if latency were a property of instructions. In production, latency is a property of structure: how state is laid out, how it is shared, how often the machine is forced to translate addresses, and how predictable the control flow remains. The micro-pauses that ruin fills are concrete events.

The real conflict is that research optimizes for average throughput, while production lives under the question: did the system took the decision before the market boundary moved?

That boundary compresses exactly when volatility rises—precisely when your edge is largest and the penalty for being late is most asymmetric. This is why mean latency is a comforting metric and a dangerous one: the tail determines whether cancels land, hedges actually hedge, and risk gates engage on time.

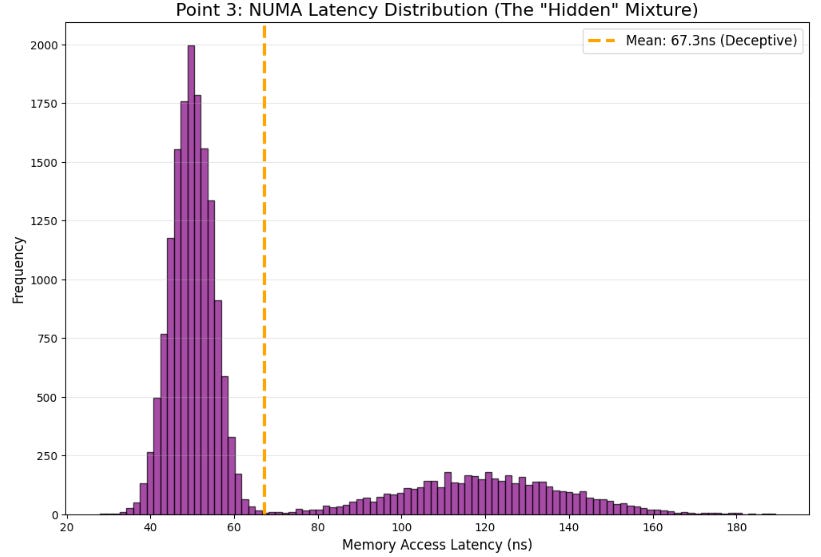

Most systems fail here because their data structures are designed for convenience, not for invariants. Pointer-heavy graphs, heterogeneous containers, dynamic dispatch, and ad-hoc parsing become regime-dependent under load. The result is a bimodal latency distribution: stable in calm sessions, unstable when message rates spike.

The solution is to design micro-machines architectures: treat the CPU as a collection of finite budgets rather than a generic processor. There is a cache-line budget (useful bytes per fetch), a coherence budget (how often lines can bounce between cores), a translation budget (distinct pages touched per unit time), and a prediction budget (branch stability). When you exceed these budgets, the penalties arrive as discontinuities that dominate p99 behavior, not as smooth slowdowns that averages can summarize.

If you want to go deeper in this topic, check this PDF:

The design points that follow are for stability constraints: engineer cache-line layouts to keep hot fields hot, engineer coherence to prevent false sharing and line bouncing, make state topology-aware to avoid NUMA randomness, engineer at the page level to keep translation predictable, stabilize control flow with table-driven state transitions, parse with rigid wire formats that don’t allocate or branch unpredictably, and use deterministic time and numeric representations to eliminate threshold drift. The target is a tighter distribution so the engine you deploy is the engine you validated.

The conflict between research and production

In the research phase, a pipeline is evaluated primarily by mathematical correctness and average throughput. We treat the compute substrate as deterministic. But in live production, the objective function collapses to a binary state: did you express the decision before the market boundary shifted?

This boundary is not static. It is a dynamic variable that compresses aggressively during volatility bursts—precisely the regime where your edge is highest. The dilemma is effectively a race against a shrinking liquidity window.

Most traders accept this premise theoretically, yet define their systems with convenient structures—pointer-heavy graphs, dynamic dispatch, and heterogeneous containers—that practically guarantee failure. The result is a system with a bimodal latency distribution.

In the Asian session (quiet regimes), the workflow fits within the window. However, during the London session or when this session overlaps with the American session, state consistency degrades, branch predictors go astray, the CPU gets stuck on page traversals, and cache misses occur. Sometimes, you even experience micro-pauses that disappear in the average statistics but manifest as failed data fills.

This is not due to a problem with the code or the interpreter, but rather a structural mismatch between the data design and the hardware you are using.

As you already know, a live trading model is a composition of a signal and a timing apparatus. If the timing apparatus behaves stochastically under load, the realized model differs from the validated one. This divergence manifests in four specific failure modes:

State incoherence under concurrency means risk flags or inventory states may be consistent within one thread but stale in another at the moment of decision.

Service time variance explodes as the compute work per message becomes regime-dependent due to cache misses or coherence traffic.

Latency amplification occurs where small increases in mean service time produce non-linear increases in p99 latency as utilization approaches saturation.

Numerical representation drift in float-heavy state machines creates rounding artifacts that lead to inconsistent thresholds around cancel/replace boundaries.

These issues are not resolved by faster code but by selecting data layouts that behave predictably on specific hardware.

The failure that necessitates this architectural shift is rarely a crazy outage but a repeating small incident. For example, a hedge engine receives a burst and computes a cancel/replace based on a risk condition that was true when reading one field but false when reading another. The system sends the wrong intent, and the market punishes the strategy via adverse selection. While post-mortems often debate signals or slippage models, the conflict is that the internal data structure did not provide atomicity at the semantic level required by the strategy. The alpha survived, the code executed, but the structure of state rendered the decision non-deterministic.

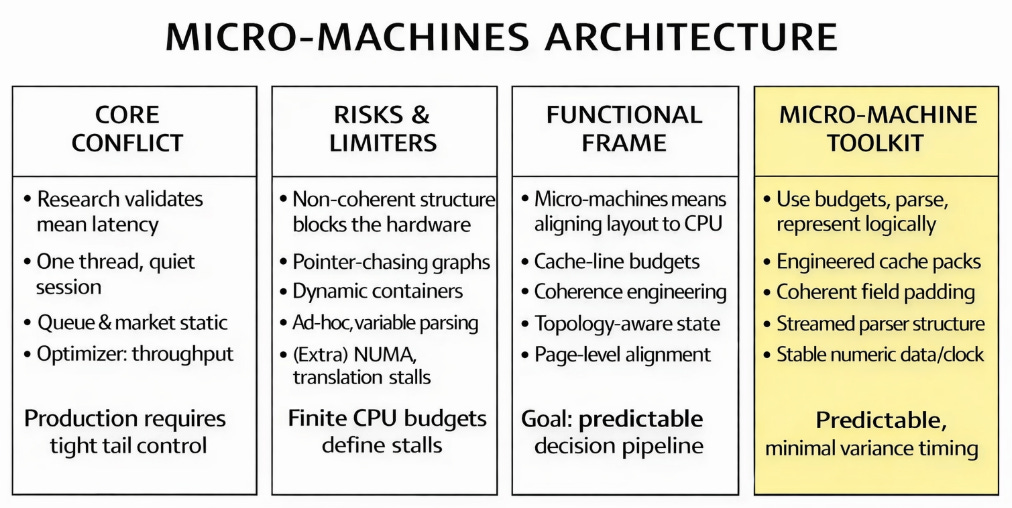

Micro-machines architecture

The next step in latency engineering is accepting that the microarchitecture often behaves adversarially because data layouts provide it no alternative. A senior quant must view the CPU as a collection of finite budgets rather than a generic processor. These include the cache line budget (managed at 64-byte granularity), the coherence budget (the frequency of forced invalidations), the translation budget (distinct pages touched per unit time), and the prediction budget (stability of branches) . These budgets are concrete; when exceeded, they manifest as measurable stalls.

Addressing these budgets involves overcoming practical obstacles:

Averages deceive: a 10% improvement in mean compute time is irrelevant if the tail is driven by coherence or translation events.

Concurrency changes everything: a single-core benchmark that performs well can become unstable when the pipeline is split across cores.

State is not scalar: it is a set of fields that must be read and written with a semantic consistency boundary.

Finally, the dynamic nature of Python, while convenient, poses a significant danger in the hot path. The challenge is to build structures that enforce predictability even when the flow rate changes abruptly.

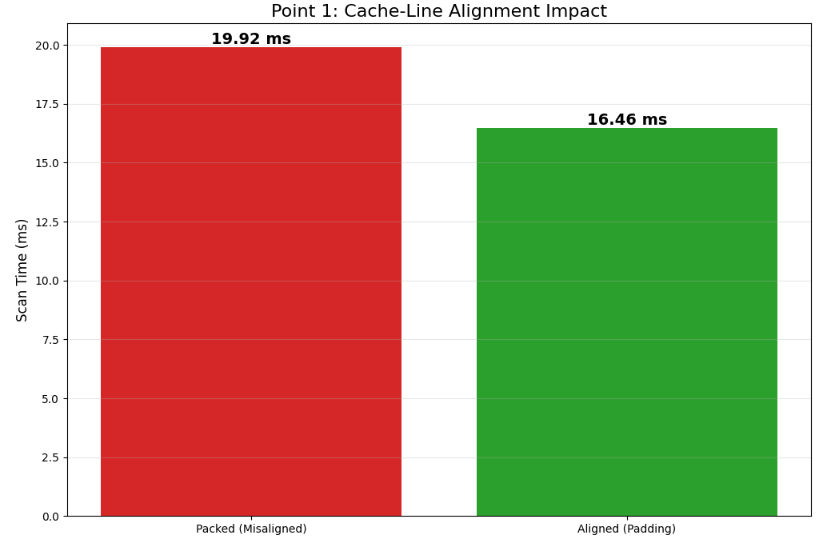

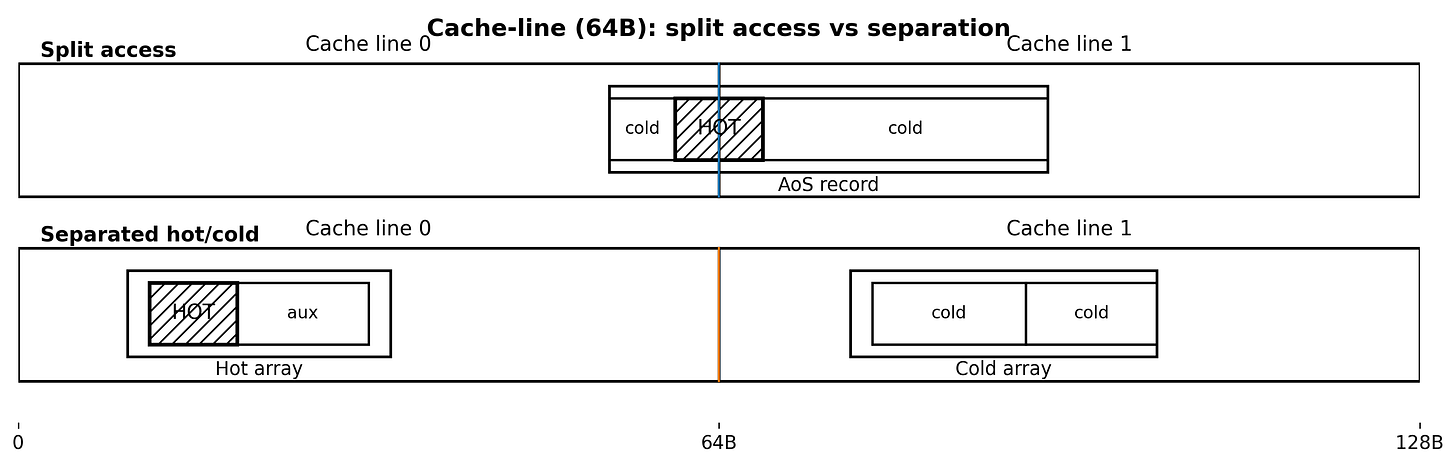

Design point 1: Cache-line

A common misconception is that contiguous memory guarantees speed. While contiguity is necessary, it is insufficient. If fields straddle cache lines or structures force split loads, the system can remain contiguous yet suffer unpredictable behavior. In trading, this risk is acute because order state is accessed under stress. If a hot field, such as best_bid_px, shares a cache line with a cold field, like client_tag, accessing the cold data drags the line into the cache, evicting necessary data and creating churn that manifests as jitter.

Cache lines are fetched as a unit, typically 64 bytes. Two mechanisms degrade performance: split accesses, where a load crosses a line boundary requiring two fills, and line pollution, where cold fields waste cache capacity. We model the cost of reading a field x as:

Here, I(x) indicates a cache miss and J(x) indicates a split access. The variance of C(x) is driven by the Bernoulli events I(x) and J(x) rather than the baseline cost C0. The engineering objective is to drive P(I=1) and P(J=1) toward zero in the hot path.

One cannot force L1 residency from Python, but one can enforce alignment and separation. The first step is an alignment audit to ensure arrays begin at stable offsets relative to cache lines.

import numpy as np

CACHELINE = 64

def addr_mod_cacheline(arr: np.ndarray) -> int:

return arr.ctypes.data % CACHELINE

# Packed vs Aligned Dtypes

packed = np.dtype([('px', 'f8'), ('qty', 'f4'), ('ts', 'u8'), ('tag', 'u8')], align=False)

aligned = np.dtype([('px', 'f8'), ('qty', 'f4'), ('ts', 'u8'), ('tag', 'u8')], align=True)

A = np.zeros(1024, dtype=packed)

B = np.zeros(1024, dtype=aligned)

print('packed addr%64:', addr_mod_cacheline(A))

print('aligned addr%64:', addr_mod_cacheline(B))The structural solution involves separating hot and cold fields into distinct arrays keyed by the same index, ensuring that access patterns remain predictable and cache lines are populated only with relevant data.

N = 1024 # random chosen for this example

# HOT: fields used on every message

hot_px = np.empty(N, dtype=np.float64)

hot_qty = np.empty(N, dtype=np.float32)

hot_ts = np.empty(N, dtype=np.uint64)

# COLD: metadata rarely used in hot path

cold_tag = np.empty(N, dtype=np.uint64)

cold_flags = np.empty(N, dtype=np.uint32)By enforcing alignment and segregating hot/cold fields, we minimize local stalls and maximize the density of useful data per fetch .

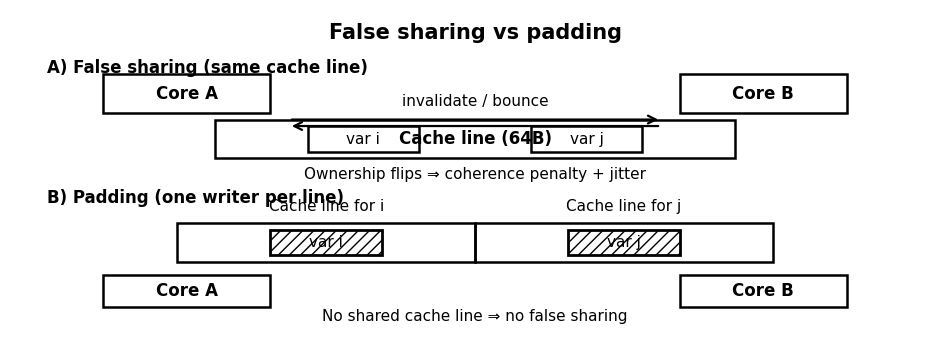

Design point 2: Coherence engineering

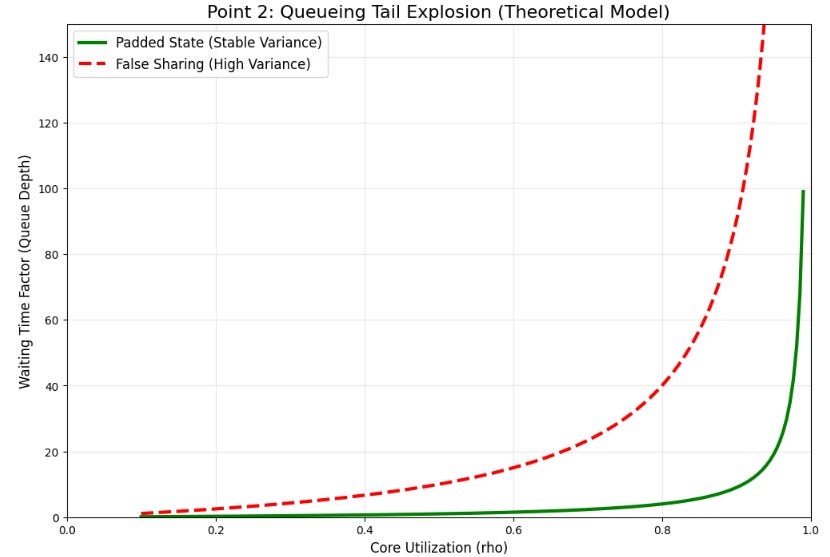

The belief that splitting work across cores inevitably lowers latency is dangerous. Multi-core performance is not additive when state forces coherence traffic. Worst-case coherence patterns appear in critical paths: per-symbol risk flags, shared order state, and global counters. If two cores write to different variables that share a cache line, false sharing occurs, causing the line to bounce between cores despite the data being logically independent.

Best protocols maintain a single writer for each line. If Core A writes to a line and Core B writes to a different word on the same line, the line invalidates repeatedly. This produces a latency term Tcoh≈nbounces × tinvalidate, where nbounces scales with the event rate.

We treat service time per message as S=S0+B, where B is the coherence penalty. If bounces occur as a Poisson process with rate ν and cost τ, then:

This variance is critical because queueing tail behavior is highly sensitive to service time variance. As utilization ρ→1, waiting time quantiles explode non-linearly.

To avoid false sharing without complex lock-free structures, we use padding. Each worker’s counter must occupy its own cache line.

import numpy as np

CACHELINE = 64

PAD_WORDS = CACHELINE // 8

n_workers = 8

# One uint64 counter per worker, padded to one cache line each

counters = np.zeros(n_workers * PAD_WORDS, dtype=np.uint64)

def counter_view(i: int) -> np.ndarray:

return counters[i * PAD_WORDS : (i+1) * PAD_WORDS]

# Worker i increments without touching Worker j's cache line

counter_view(3)[0] += 1For global metrics like inventory, we avoid updating a global scalar from multiple workers. Instead, we maintain per-shard state and reduce it only on demand or on a schedule, decoupling the write frequency from the read frequency .

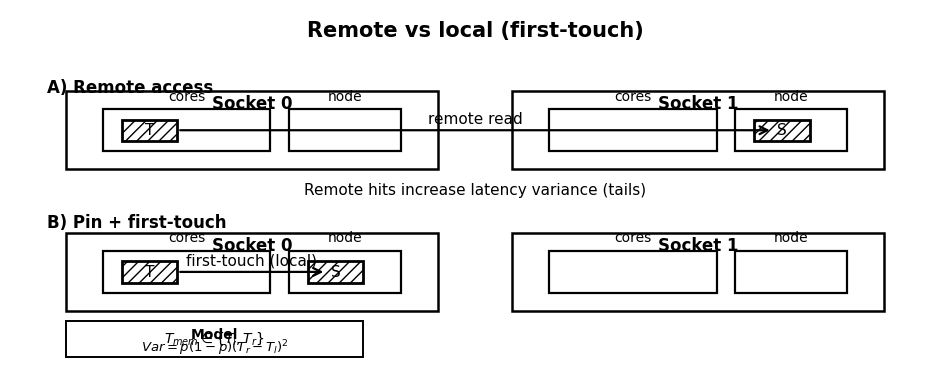

Design point 3: Topology-aware state

It is a misconception that memory is uniform; on multi-socket machines, memory access possesses locality. Allocating state on one node and reading it from another incurs a penalty that is regime-dependent. This risk appears in large symbol universes or multi-process architectures where processes are pinned to different sockets . In calm markets, cross-node penalties are masked, but in bursts, they become part of the tail.

A NUMA miss changes effective bandwidth and increases variance. We model access time as:

If the probability of remote access p changes with thread scheduling, Tmem becomes stochastic. The variance is given by:

This variance again feeds into queueing tails.

Since the OS kernel typically uses a first touch policy—where the node that first writes to a page owns it—we must ensure the consumer process initializes the state.

import numpy as np

import os

def allocate_and_touch(shape, dtype, touch_value=0):

x = np.empty(shape, dtype=dtype)

x[...] = touch_value # Touch all pages by writing

return x

def pin_to_cores(cores):

os.sched_setaffinity(0, set(cores))

# Pin to cores [0,1,2,3] and then allocate state locally

pin_to_cores([0,1,2,3])

state = allocate_and_touch((10000,), np.float64)As you see, pinning is much more than performance optimization; indeed, it is a stability mechanism that reduces the randomness in execution location, eliminating a hidden mixture component in the latency distribution.

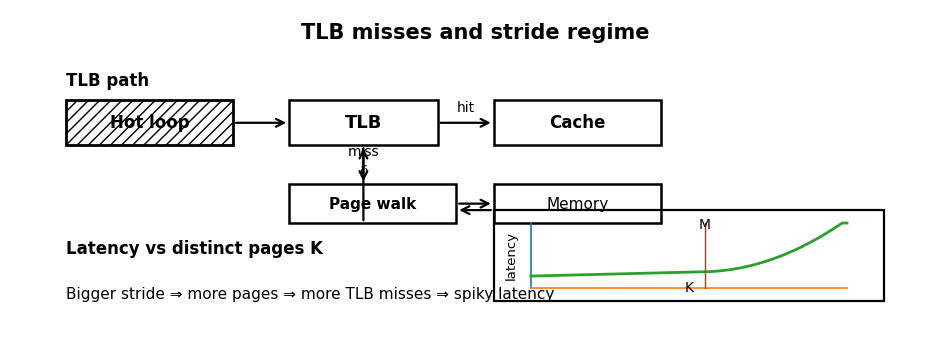

Design point 4: Page-level engineering

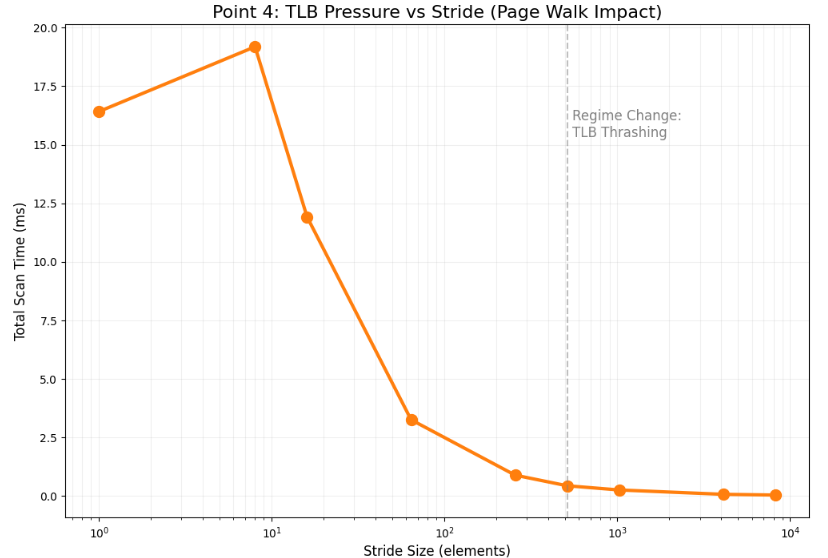

Another pervasive misconception in low-latency systems is that cache misses represent the primary memory bottleneck. In reality, caches are only half the story; before the CPU can fetch a cache line, it must translate the virtual address to a physical address via the Translation Lookaside Buffer (TLB) . If a strategy touches too many pages too quickly, the TLB misses, and the hardware page walker intervenes, stalling the pipeline.

This risk is acute when scanning large state arrays with poor stride patterns or maintaining sparse per-symbol objects spread across the heap . A classic pathology involves a hot loop that reads just a few bytes from a vast number of pages; while the data cache sees a low volume of bytes, the TLB sees an overwhelming number of distinct pages.

We model the translation cost as a variance injection term:

where δ is the penalty of a page walk and p(K) is the probability of a miss given K pages touched per message. As K exceeds the TLB’s coverage capacity M, the miss rate increases sharply, causing latency to become spiky.

The impact of access patterns is quantified by approximating the number of distinct pages touched when scanning N elements of size b with stride s:

where P is the page size (typically 4KB). This relationship reveals that stride is a multiplier for page footprint. A stride that forces jumps across page boundaries can turn a computationally trivial loop into a translation nightmare.

We can diagnose this regime change qualitatively by measuring scan latency as a function of stride. The following Python snippet shows the non-linear latency jump that occurs when the stride causes page thrashing:

import numpy as np

import time

def scan_sum(arr: np.ndarray, step: int) -> float:

t0 = time.perf_counter_ns()

s = float(arr[::step].sum())

t1 = time.perf_counter_ns()

return (t1 - t0) / 1e6 # ms

N = 50000000

arr = np.ones(N, dtype=np.float64)

# Observe regime change as step size increases

for step in [1, 2, 4, 8, 64, 512, 4096]:

print(step, scan_sum(arr, step))The engineering response is to keep hot loops page-local by operating on contiguous blocks and avoiding sparse pointer chasing. Where appropriate, utilizing huge pages can increase effective coverage, though this requires OS support and careful operational discipline.

Design point 5: Control-flow predictability

The statement "branches are free if logic is correct" ignores the microarchitectural reality that branches are a performance contract. Predictable branches are cheap, but unpredictable branches are expensive in ways that manifest as high volatility . Trading logic is inherently conditional—market state changes trigger different paths, and risk thresholds flip behavior.

When the CPU mispredicts a branch, it must flush speculative work and refetch the correct path. We model the service time S for a message requiring m branches as:

where κi is the penalty and pi is the misprediction probability for branch i. A volatility regime change increases both the message rate λ and the misprediction probabilities pi simultaneously, pushing the service time distribution’s tail upward exactly when queueing pressure is highest.

The objective is not to eliminate logic but to stabilize control flow. We achieve this by replacing if/elif chains with table-driven finite state transitions.

import numpy as np

# States and Events

NEW, LIVE, PARTIAL, FILLED, CANCELED, REJECTED = range(6)

ACK, FILL, CANCEL_ACK, REJECT = range(4)

# Transition table: next_state[state, event]

next_state = np.array([

[LIVE, NEW, NEW, REJECTED], # from NEW

[LIVE, PARTIAL, CANCELED, REJECTED], # from LIVE

# ... (other states)

], dtype=np.int8)

state = LIVE

# Deterministic transition via array indexing rather than branching

state = int(next_state[state, FILL])Similarly, risk gating can be implemented by evaluating conditions into a bitmask and indexing a policy array, reducing complex branching logic to a single, stable lookup.

Design point 6: Wire-format and parsing structures

Parsing is frequently dismissed as I/O, yet it is stateful computation that can poison the latency distribution if it allocates memory, branches unpredictably, or touches scattered pages. In fast markets, the diversity of message types—auctions, trades, partial frames—increases. If parsing logic relies on dynamic dictionaries or ad-hoc string parsing, the decode time becomes the primary driver of tail latency.

Parsing hits every budget simultaneously: variable offsets disrupt prefetching, and string decoding is computationally expensive. We model decode time as a mixture:

where Dk is the decode time for message type k and πk is its frequency. During bursts, the mixture weights πk shift toward more complex message types. The design objective is to make Dk uniform across types and stable under load.

The solution is to design rigid schemas and parse using buffer views rather than creating Python objects.

import struct

import numpy as np

# Fixed-width schema: ts:u64, px:i32, qty:i32, flags:u16, type:u8

FMT = '<QiiHB'

def decode_one(buf: memoryview, offset: int):

# Unpack directly into scalars

return struct.unpack_from(FMT, buf, offset)

# Batch decode via numpy view

dtype = np.dtype([('ts', 'u8'), ('px', 'i4'), ('qty', 'i4'),

('flags', 'u2'), ('type', 'u1'), ('pad', 'u1')], align=True)

def decode_batch(buf: bytes) -> np.ndarray:

return np.frombuffer(memoryview(buf), dtype=dtype)This approach transforms decoding from per-message parsing into view creation, ensuring that decode cost remains stable regardless of the message mixture.

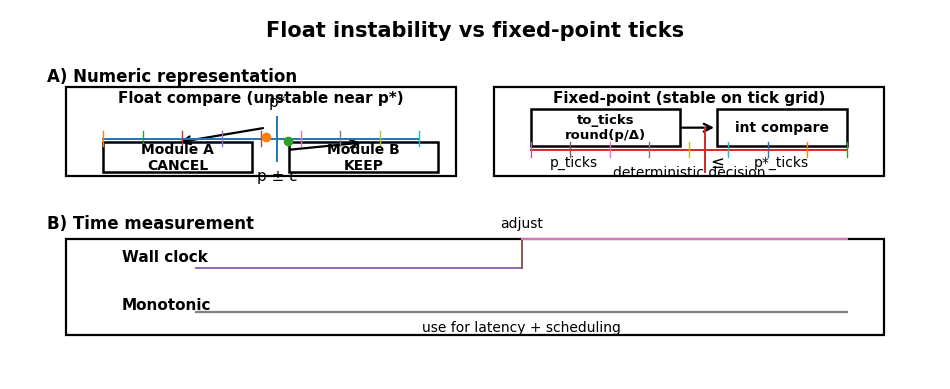

Design point 7: Time and numeric representation

Floats are acceptable for analysis but dangerous for the control surface of discrete decisions. The problem is not the speed of float arithmetic but the fact that it is not a deterministic ledger. Rounding artifacts can produce inconsistent thresholds, leading to situations where one module decides to cancel and another decides to keep, or where PnL attribution becomes unreproducible.

Trading decisions often take the form of comparing a price p to a threshold p*. With floating-point representation p+ε, decision error occurs when |ε|>|p*-p|. Near thresholds, this difference is often a single tick, making the decision unstable. Fixed-point representation avoids this by enforcing that all values lie on the tick grid:

where Δ is the tick size.

We strictly use monotonic time for latency measurement and scheduling, avoiding wall clocks which are subject to adjustments. For prices, we implement a fixed-point grid.

from dataclasses import dataclass

@dataclass(frozen=True)

class TickGrid:

tick_size: float

def to_ticks(self, px: float) -> int:

return int(round(px / self.tick_size))

GRID = TickGrid(tick_size=0.25)

# 1. Define the Threshold (e.g., Stop Price)

stop_ticks = GRID.to_ticks(5123.75)

# 2. Define the Incoming Market Price (The missing part)

last_px_float = 5123.50

last_px_ticks = GRID.to_ticks(last_px_float)

# 3. Deterministic Comparison

if last_px_ticks <= stop_ticks:

trigger_stop()This ensures that comparisons are integer-exact and reproducible across runs and components.

Okay guys! If the reaction to this article is that it demands too much for a given strategy, that is a healthy response; the correct next step is to calculate the value of timing precision for that specific strategy class. However, for any domain where cancel timing, hedging, or queue positioning is material, these topics are not engineering vanity—they are part of the model. The most pragmatic approach is not to chase micro-optimizations but to choose data structures that behave predictably on the hardware.

Alright, books are closed on this session. Until the next open: keep your thresholds strict, your latency deterministic, and your discipline absolute. Let the market bring the chaos; you bring the structure. Stay liquid, respect the tails, and let the edge play out one microsecond at a time. 🏛️⚡

By the way, as voted in the previous poll:

You can read a sample from the new series here:

This is an invitation-only access to our QUANT COMMUNITY, so we verify numbers to avoid spammers and scammers. Feel free to join or decline at any time. Tap the WhatsApp icon below to join

Appendix

Full script:

import numpy as np

import os

import time

import struct

import sys

from dataclasses import dataclass

def run_design_point_1_cache_line():

print("\n Design Point 1: Cache-line")

CACHELINE = 64

def addr_mod_cacheline(arr: np.ndarray) -> int:

return arr.ctypes.data % CACHELINE

# Packed vs Aligned Dtypes

packed = np.dtype([('px', 'f8'), ('qty', 'f4'), ('ts', 'u8'), ('tag', 'u8')], align=False)

aligned = np.dtype([('px', 'f8'), ('qty', 'f4'), ('ts', 'u8'), ('tag', 'u8')], align=True)

A = np.zeros(1024, dtype=packed)

B = np.zeros(1024, dtype=aligned)

print('packed addr%64:', addr_mod_cacheline(A))

print('aligned addr%64:', addr_mod_cacheline(B))

# Structural solution: Separating hot and cold fields

N = 1024

# HOT: fields used on every message

hot_px = np.empty(N, dtype=np.float64)

hot_qty = np.empty(N, dtype=np.float32)

hot_ts = np.empty(N, dtype=np.uint64)

# COLD: metadata rarely used in hot path

cold_tag = np.empty(N, dtype=np.uint64)

cold_flags = np.empty(N, dtype=np.uint32)

print("Hot/Cold arrays initialized.")

def run_design_point_2_coherence():

print("\n Design Point 2: Coherence Engineering")

CACHELINE = 64

PAD_WORDS = CACHELINE // 8

n_workers = 8

# One uint64 counter per worker, padded to one cache line each

counters = np.zeros(n_workers * PAD_WORDS, dtype=np.uint64)

def counter_view(i: int) -> np.ndarray:

return counters[i * PAD_WORDS : (i+1) * PAD_WORDS]

# Worker i increments without touching Worker j's cache line

# Example: Worker 3

counter_view(3)[0] += 1

print(f"Worker 3 counter: {counter_view(3)[0]}")

def run_design_point_3_topology():

print("\n Design Point 3: Topology-aware state")

def allocate_and_touch(shape, dtype, touch_value=0):

x = np.empty(shape, dtype=dtype)

x[...] = touch_value # Touch all pages by writing

return x

def pin_to_cores(cores):

# os.sched_setaffinity is available on Linux only

if hasattr(os, 'sched_setaffinity'):

try:

os.sched_setaffinity(0, set(cores))

print(f"Process pinned to cores: {cores}")

except Exception as e:

print(f"Could not pin cores (likely permission/OS issue): {e}")

else:

print("Skipping pin_to_cores: os.sched_setaffinity not available on this platform.")

# Pin to cores [0,1,2,3] and then allocate state locally

pin_to_cores([0,1,2,3])

state = allocate_and_touch((10000,), np.float64)

print(f"State allocated with shape {state.shape} and initialized.")

def run_design_point_4_page_level():

print("\n Design Point 4: Page-level Engineering")

def scan_sum(arr: np.ndarray, step: int) -> float:

t0 = time.perf_counter_ns()

s = float(arr[::step].sum())

t1 = time.perf_counter_ns()

return (t1 - t0) / 1e6 # ms

# Reduced N slightly for quick execution in example context

N = 10_000_000

print(f"Scanning array of size {N}...")

arr = np.ones(N, dtype=np.float64)

# Observe regime change as step size increases

print("Stride | Latency (ms)")

for step in [1, 2, 4, 8, 64, 512, 4096]:

latency = scan_sum(arr, step)

print(f"{step:6} | {latency:.4f}")

def run_design_point_5_control_flow():

print("\n Design Point 5: Control-flow Predictability")

# States and Events

NEW, LIVE, PARTIAL, FILLED, CANCELED, REJECTED = range(6)

ACK, FILL, CANCEL_ACK, REJECT = range(4)

# Transition table: next_state[state, event]

next_state = np.zeros((6, 4), dtype=np.int8)

# Define transitions (Example logic)

next_state[NEW] = [LIVE, NEW, NEW, REJECTED]

next_state[LIVE] = [LIVE, PARTIAL, CANCELED, REJECTED]

# Filling others to avoid index errors in generic usage

next_state[PARTIAL] = [PARTIAL, FILLED, CANCELED, REJECTED]

state = LIVE

event = FILL

# Deterministic transition via array indexing rather than branching

new_state = int(next_state[state, event])

state_names = ["NEW", "LIVE", "PARTIAL", "FILLED", "CANCELED", "REJECTED"]

print(f"Transition: {state_names[state]} + Event(FILL) -> {state_names[new_state]}")

def run_design_point_6_parsing():

print("\n Design Point 6: Wire-format and Parsing")

# Fixed-width schema: ts:u64, px:i32, qty:i32, flags:u16, type:u8

FMT = '<QiiHB'

# Batch decode via numpy view

dtype_schema = np.dtype([

('ts', 'u8'),

('px', 'i4'),

('qty', 'i4'),

('flags', 'u2'),

('type', 'u1'),

('pad', 'u1') # Explicit padding field from user schema

], align=True) # align=True may force total size to be multiple of 8 (e.g., 24 bytes)

def decode_batch(buf: bytes) -> np.ndarray:

return np.frombuffer(memoryview(buf), dtype=dtype_schema)

# 1. Create the raw packed data (19 bytes)

# ts=100, px=10, qty=1, flags=0, type=1

raw_data = struct.pack(FMT, 100, 10, 1, 0, 1)

# 2. Add the explicit 'pad' byte defined in the numpy schema fields

raw_data += b'\x00'

# 3. Add alignment padding

# If align=True, numpy pads the total struct size to a multiple of 8.

# We calculate the required size dynamically to avoid hardcoding errors.

current_size = len(raw_data)

required_size = dtype_schema.itemsize

if current_size < required_size:

raw_data += b'\x00' * (required_size - current_size)

decoded = decode_batch(raw_data)

print(f"Batch decoded: {decoded}")

print(f"Struct itemsize: {dtype_schema.itemsize} bytes (Aligned)")

def run_design_point_7_numeric():

print("\n Design Point 7: Time and Numeric Representation")

@dataclass(frozen=True)

class TickGrid:

tick_size: float

def to_ticks(self, px: float) -> int:

return int(round(px / self.tick_size))

def trigger_stop():

print("STOP TRIGGERED")

GRID = TickGrid(tick_size=0.25)

# 1. Define the Threshold (e.g., Stop Price)

stop_ticks = GRID.to_ticks(5123.75)

# 2. Define the Incoming Market Price

last_px_float = 5123.50

last_px_ticks = GRID.to_ticks(last_px_float)

print(f"Stop ticks: {stop_ticks}, Last px ticks: {last_px_ticks}")

# 3. Deterministic Comparison

if last_px_ticks <= stop_ticks:

trigger_stop()

else:

print("Conditions not met.")

if __name__ == "__main__":

run_design_point_1_cache_line()

run_design_point_2_coherence()

run_design_point_3_topology()

run_design_point_4_page_level()

run_design_point_5_control_flow()

run_design_point_6_parsing()

run_design_point_7_numeric()

![[QUANT LECTURE] Inefficiency as an Information Claim](https://substackcdn.com/image/fetch/$s_!674C!,w_140,h_140,c_fill,f_auto,q_auto:good,fl_progressive:steep,g_auto/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F9307ed27-0c72-4d5d-8eef-4d50bd9ac71e_582x451.png)