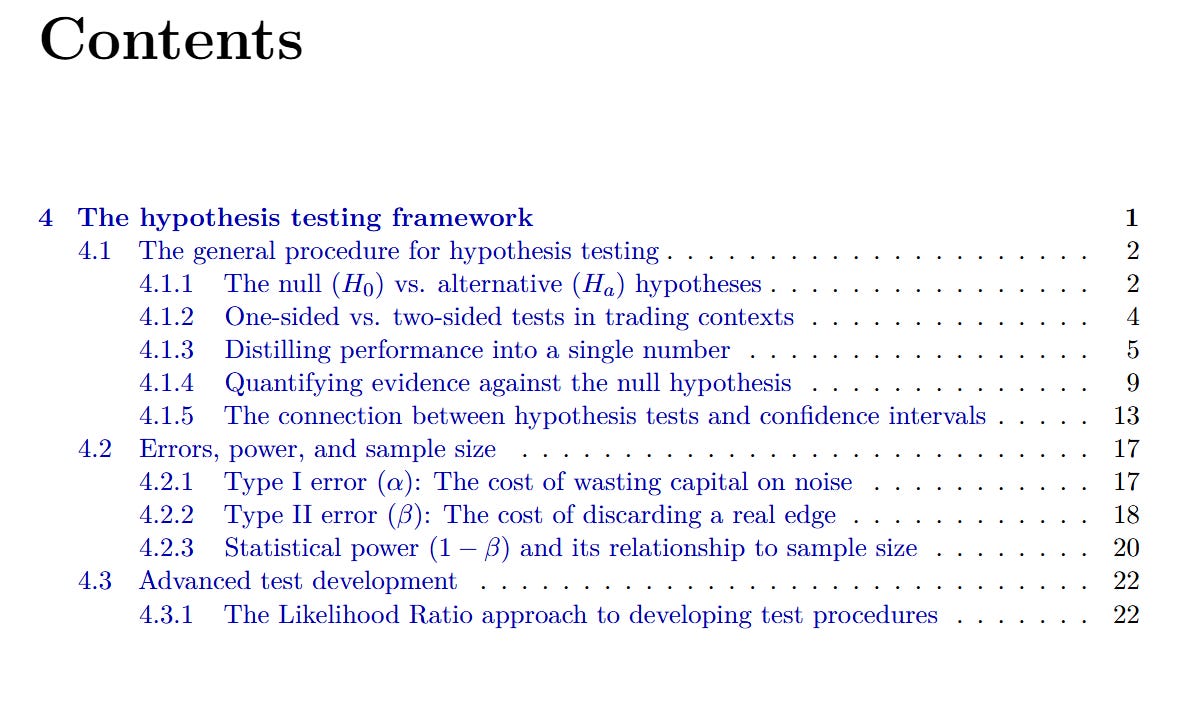

The Hypothesis-Testing Framework

This chapter turns estimation into a decision process: from a single backtest path to a formal, falsifiable test that separates repeatable edge from noise and supports real capital decisions. It adopts a skeptical starting point (“no edge”) and advances with robust test statistics, defensible p-values/alphas, confidence-interval thinking, and explicit power analysis.

What’s inside:

From estimation to decision. Why measuring uncertainty (point & interval estimates) isn’t enough—and how hypothesis testing supplies the courtroom-style procedure for a go/no-go verdict.

Defining the claims. How to state precise null vs. alternative hypotheses for traders: mean excess return, Sharpe, regression alpha, correlations, and model comparisons (including costs via “µ ≤ friction”).

Test statistics done right. Distilling performance into “signal ÷ noise,” using HAC/Newey–West errors and joint tests (F-tests) when factors are assessed together.

From numbers to evidence. Mapping a test statistic to a p-value and choosing α as a business threshold; what p-values are—and aren’t.

Intervals as decisions. Duality between tests and confidence intervals; using bootstrap CIs (e.g., Sharpe) and deciding on the lower bound vs. a cost-aware hurdle.

Errors and power. Type I (false edge) vs. Type II (missed edge), their costs, and the levers that set statistical power: α, effect size, variance, and sample size.

Backtest length trade-off. Long samples boost power but threaten stationarity; short samples fit regime but risk underpowered conclusions—plus ways to balance the tension.

Advanced testing via Likelihood Ratio. A general, nested-model framework (with χ² reference) to test structural breaks, factor redundancy, and justified model complexity.

A disciplined path from “looks good” to statistically defensible—and economically relevant—deployment decisions.

Check a sample of what you will find inside: