Table of contents:

Introduction.

From venture capital to sovereign debt.

The chinese threat.

Europe: The Draghi report, regulations, the impact of the AI and capital flight.

The physics of scaling: 106 GW by 2035.

Copper, uranium, and critical minerals.

Portfolio strategy 2026.

Introduction

For two decades, the dominant narrative was simple: software ate the world, and in doing so, freed itself from the constraints of the physical one. Asset-light business models, zero marginal cost, infinite scalability—these were not just buzzwords, they were the justification for paying 20–30x sales for anything with a login screen and an acronym in the name. That era is ending. The arrival of frontier AI has snapped the tether between tech and light. Training and deploying AGI is not a SaaS problem but an industrial policy problem. To know more about this take a look of:

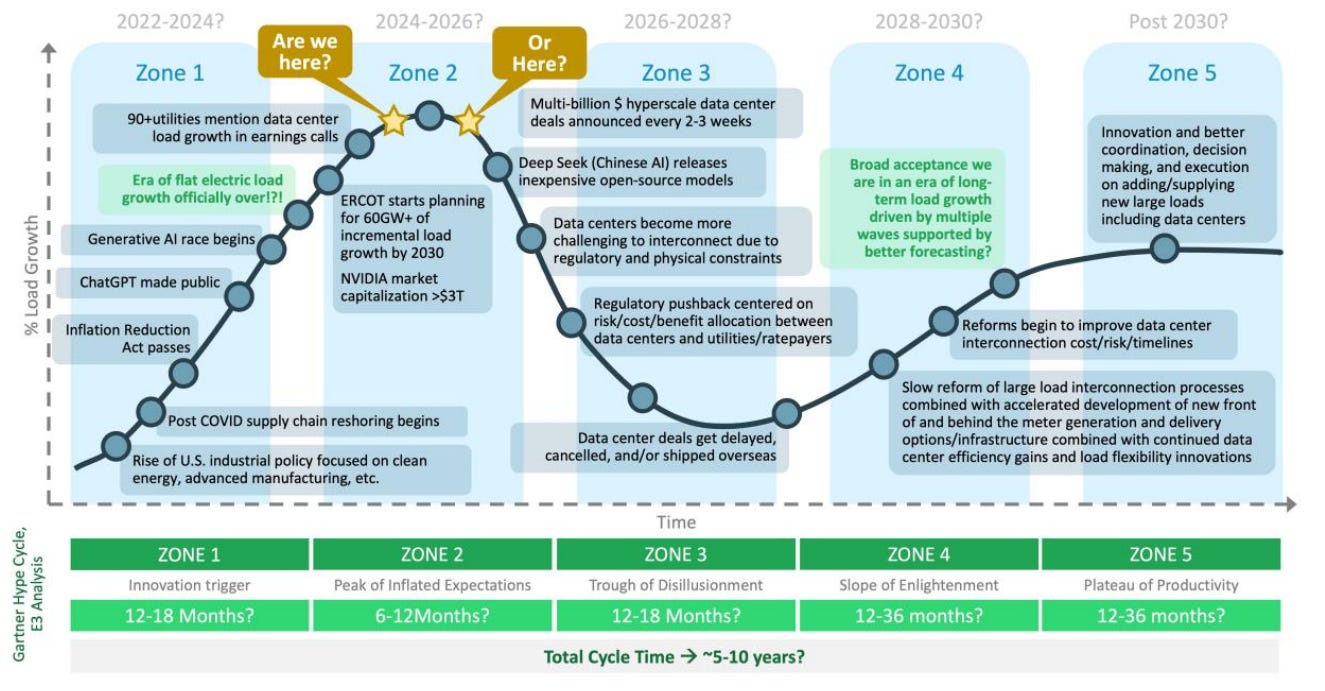

The data-center hype cycle suggests we are at the late stages of Zone 2—the peak of inflated expectations—just before AI load growth runs into grid bottlenecks, regulatory pushback, and cancelled projects. The portfolio strategy that follows assumes that the next phase is not cheaper compute, but a long, capital-intensive slog through those constraints.

OpenAI’s trillion-dollar infrastructure ask is the template. AI at scale demands gigawatts of baseload power, hundreds of billions in data-center capex, and secure access to copper, uranium, and critical minerals. The unit of competition is no longer monthly active users, but megawatts and tonnes. As the cost and risk of this buildout exceed what private markets can rationally bear, states are stepping in as the only balance sheets large enough to keep the experiment running.

At the same time, the geopolitical map is being redrawn around energy and regulation. China has quietly shifted the game from chip efficiency to energy abundance, subsidizing electricity to neutralize US export controls. Europe, lacking scale champions, has defaulted to a model of regulatory extraction that actively repels growth capital. The US, caught between its AI ambitions and an overstretched grid, is being forced into a messy blend of nuclear revival, extended fossil lifelines, and emergency grid spending just to keep data centers online.

From venture capital to sovereign debt

For twenty years, the prevailing wisdom in global markets was that technology companies were asset-light. They scaled infinitely with minimal marginal cost, delivering gross margins that justified stratospheric valuations. In December 2025, that era is demonstrably over. The financial architecture of Silicon Valley has buckled under the weight of the physical requirements of AGI.

Recent disclosures indicate that OpenAI, the vanguard of the generative AI revolution, has formally petitioned the United States government for loan guarantees to support an infrastructure buildout estimated at over $1.15 trillion. This figure represents a capital expenditure requirement roughly equivalent to the GDP of Indonesia. The request for a federal backstop is a tacit admission that the unit economics of AI, in its current training phase, do not function under traditional private equity models.

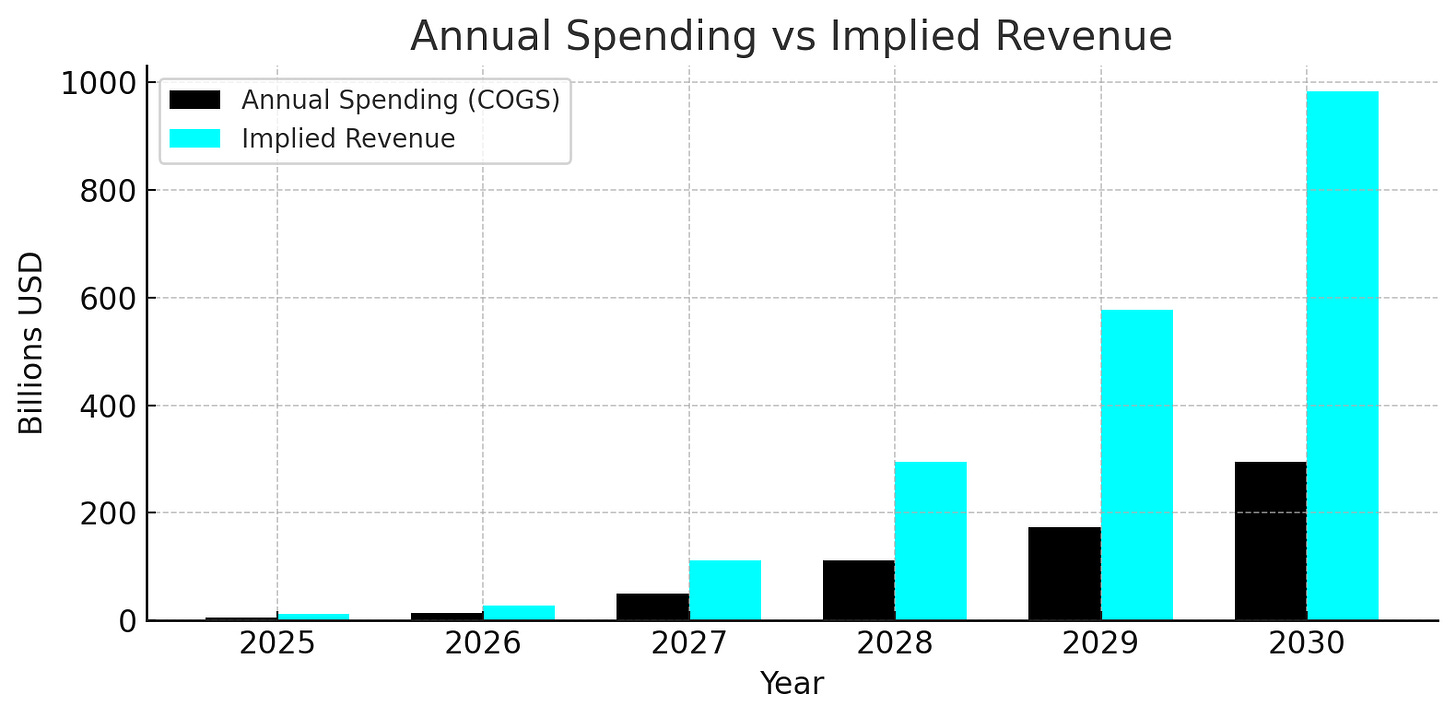

OpenAI projects a 48% gross profit margin in 2025, improving to 70% by 2029. If we assume all infrastructure spending flows through cost of goods sold, we can calculate the implied revenue needed to support these spending levels at OpenAI’s target margins.

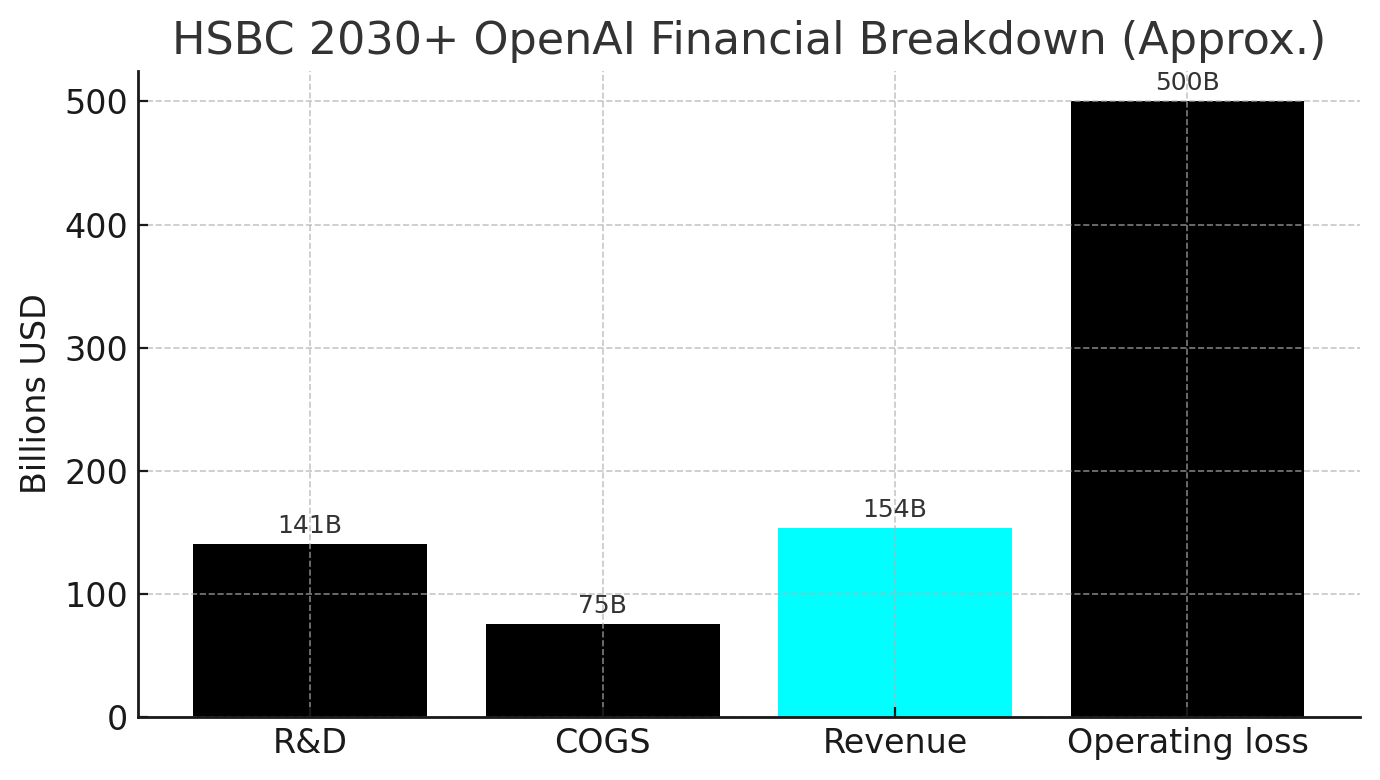

Financial modeling by HSBC suggests that without this sovereign intervention, OpenAI faces operating losses approaching $500 billion by 2030. The costs associated with training frontier models—specifically the energy consumption and hardware depreciation—have scaled faster than revenue monetization. The children of Silicon Valley, a term now used by market analysts to describe the entitlement of tech founders who assumed capital would always be free, are finding that the next phase of growth requires the balance sheet of the US Treasury rather than the risk appetite of Sand Hill Road.

This marks the transition of AI from a product to strategic infrastructure. Just as the transcontinental railroad or the nuclear power fleet could not have been built solely by unassisted private enterprise, the gigawatt-scale data centers required for the next leap in model performance—clusters consuming 5 GW or more—are effectively public works projects. Investors must recognize that companies relying solely on private funding for foundational model training face a high probability of insolvency. Value is shifting from the entities that design the models to the entities that build the state-backed infrastructure that hosts them.

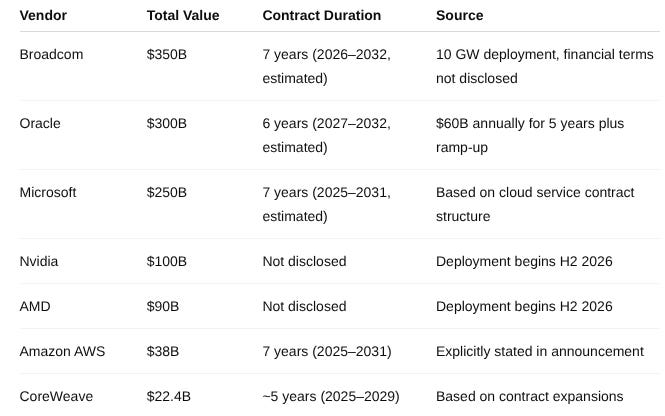

The scale of the proposed infrastructure buildout, often referred to under the codename Stargate, redefines industrial demand. Analysis of supply chain commitments reveals a spending plan allocated across seven major vendors, with Broadcom ($350 billion), Oracle ($300 billion), and Microsoft ($250 billion) absorbing the lion’s share of the capital flow between 2025 and 2035.

This infrastructure campaign is not merely about buying chips; it is about terraforming the American energy landscape. The Stargate project envisions a network of data centers that will consume vast quantities of power, necessitating a dedicated supply chain for energy generation and transmission. The document released by OpenAI in late 2025 outlines a requirement for 36 gigawatts of compute capacity by 2030. To put this in perspective, 36 GW is roughly equivalent to the total electrical generation capacity of the entire country of Argentina.

The implications for the US power grid are staggering. The Department of Energy has warned that data centers could consume up to 9% of total US electricity generation by 2030, a figure that necessitates the rapid construction of new baseload power. The Stargate initiative essentially forces the US government to become the guarantor of the energy transition, underwriting the risks of new nuclear builds and transmission lines that commercial utilities would otherwise deem too risky.

The chinese threat

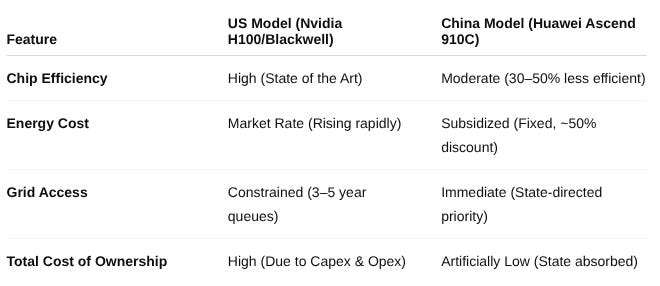

The primary external driver of this US pivot to state-backed AI is the unexpected resilience and acceleration of Chinese capability. Despite the comprehensive export controls imposed by the US Department of Commerce on advanced GPUs (specifically the Nvidia H100 and Blackwell series), China has not capitulated. Instead, Beijing has changed the rules of the game from silicon efficiency to energy abundance.

Intelligence surfacing in November and December 2025 confirms that the Chinese central government has initiated a massive subsidy program for its data center sector. Local governments in energy-rich provinces—specifically Gansu, Inner Mongolia, and Guizhou—have introduced policy directives slashing electricity costs by up to 50% for data centers that utilize domestic Chinese chips, such as those manufactured by Huawei (Ascend series) and Cambricon.

By subsidizing the energy input, the Chinese state renders the technical inefficiency of its domestic chips economically irrelevant. An analyst report from The Financial Times noted that while generating a token on Chinese hardware requires 30-50% more electricity than on Nvidia hardware, the 50% subsidy effectively neutralizes the US cost advantage.

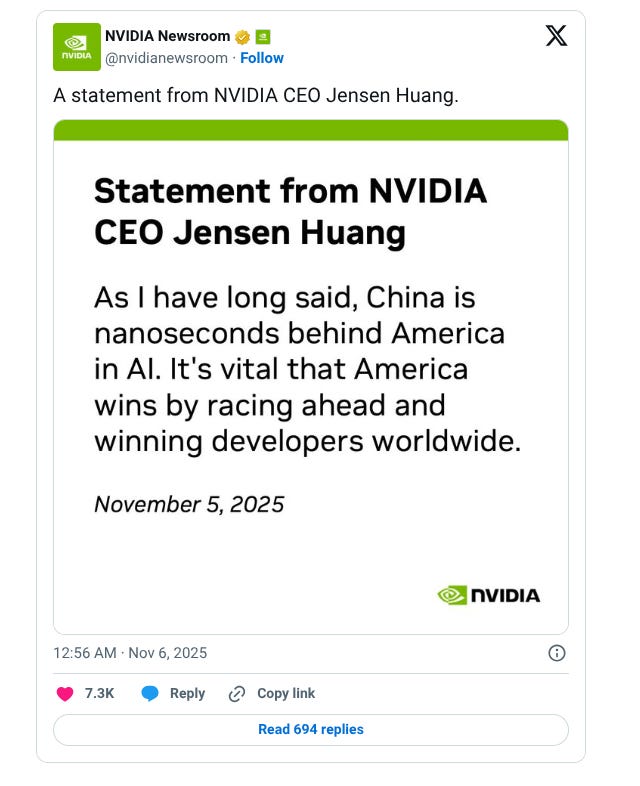

Furthermore, the velocity of infrastructure deployment in China vastly outpaces the US. Nvidia CEO Jensen Huang has starkly warned that while the US takes three to five years to permit, build, and power a new data center, China can assemble equivalent infrastructure in months. Huang’s assessment that China is “nanoseconds behind” the US in practical AI capability destroys the Western complacency that sanctions would permanently cripple the adversary. This realization is the catalyst for the US AI Action Plan, which reframes the data center buildout as a matter of national survival.

While Huang admires China’s construction speed, he’s even more concerned about energy capacity needed to support the AI boom. He noted that China has “twice the energy we have as a nation, yet our economy is bigger than theirs. It doesn’t make sense,” underscoring the challenge the U.S. faces in keeping up.