Table of contents:

Introduction.

Modeling framework development.

Factor selection.

Parameter estimation.

Model validation.

Asymmetric alpha architecture.

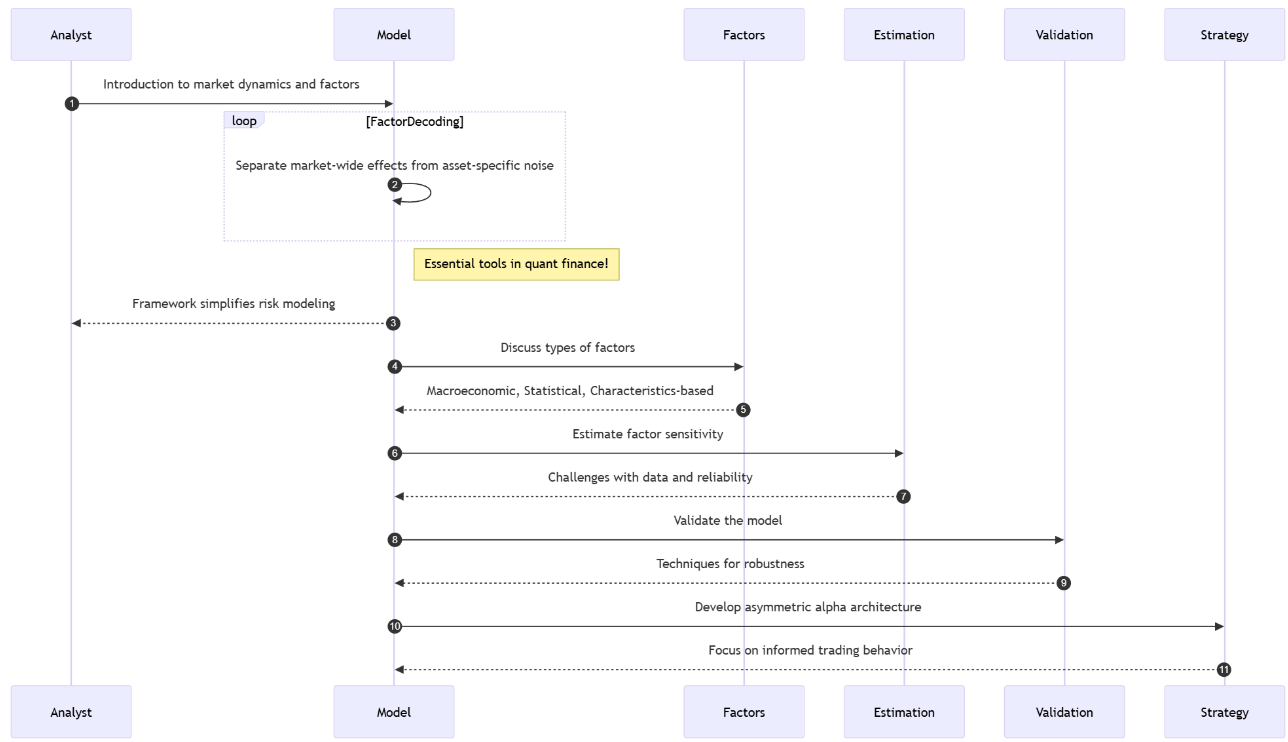

Introduction

Let me tell you about this fascinating puzzle in quantitative finance. You see individual stocks bouncing around like hyperactive electrons, right? But squint at the market long enough, and you start noticing this strange synchronicity–like they’re all humming along to some hidden melody. Which makes you wonder: how the hell do we model this group dance mathematically?

Now, modeling each asset in isolation? That’s financial fantasyland. It’s like assuming every instrument in an orchestra plays without sheet music. Reality? They’re all responding to the same conductors – interest rates, inflation shocks, that useless Trump tweet that tanked SP500 again. This is why we factor model nerds exist.

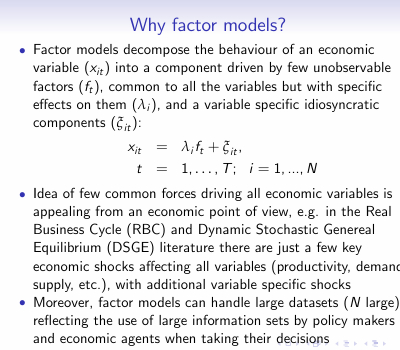

Let me break it down. Factor models are basically financial x-ray vision. I’m saying any asset’s return is really two things mashed together: stuff everyone’s exposed to—we call these factors—plus security-specific noise. Think of it like this—Do you remember this equation? We saw it in a previous post:

Translation: Your return rₜ is some baseline alpha, plus your factor exposures B times factor moves fₜ, plus whatever weirdness is unique to that stock εₜ. Simple equation, right? But here’s where it gets sexy–the covariance structure:

This bad boy reveals market risk’s DNA. The first term? That’s your systematic risk – the market forces we all swim in. The second term? That’s the idiosyncratic stuff you might actually diversify away. Beautiful, but…

[Pauses dramatically] …here’s where PhD students start crying. Four landmines await:

Specification risk: Choose factors that miss latent drivers? It’s like building a weather model that ignores atmospheric pressure. Your tech stock factor might completely whiff on AI revolution dynamics, leaving you blind to concentrated exposures.

Estimation risk: That delicious-looking 20% factor loading? Might be statistical mirage. Financial data’s signal-to-noise ratio makes particle physics look clean–sampling errors compound, turning elegant math into overfit fiction.

Non-stationarity risk: Markets have the memory of a goldfish. Today’s ironclad factor relationship—value vs. growth, anyone?—might invert tomorrow when macro regimes shift. Your backtested beauty becomes a real-time liability.

Multicollinearity risk: When factors bleed together – say, "quality" and "low volatility" doing an interpretive dance–your beta coefficients become Schrödinger’s parameters: simultaneously significant and meaningless.

Yet despite all this? We still use these models. Because when they work–when you nail the factors, tame the noise, respect the instability – you’re not just predicting returns. You’re seeing the market’s hidden skeleton.

Modeling framework development

Let’s start by observing market synchronicity to constructing a robust factor model is one of translating empirical patterns into a rigorous mathematical framework. The core conceptual leap, captured by the expression rₜ = α + Bfₜ + εₜ, is the decision to model the multivariate return vector not as n independent variables, but as a system driven by a smaller set of m common forces fₜ, mediated by a sensitivity matrix B, with residual asset-specific movements ϵₜ. This is the narrative about the idea–the quest to find the hidden levers that move the market.

Check more about this here:

In this framework:

rₜ is the vector of excess returns for n assets at time t.

α is the vector of asset-specific intercepts—often interpreted as alpha, though its estimation is fraught.

fₜ is the vector of factor returns at time t, where m≪n.

B is the factor loading matrix, quantifying the sensitivity of each asset to each factor. Bij represents the loading of asset i on factor j.

ϵₜ is the vector of idiosyncratic returns at time t.

The power of this linear decomposition lies in its ability to separate systematic risk—driven by fₜ and B—from idiosyncratic risk—captured by ϵₜ. This separation is not merely academic; it is fundamental to risk management and portfolio construction in algorithmic trading.

The elegance of the framework is amplified by a crucial assumption where the idiosyncratic returns are uncorrelated with the factor returns, and are also uncorrelated with each other across assets.

Mathematically:

where Ωϵ is a diagonal matrix.

This assumption, while simplifying the mathematics, represents a significant obstacle in practice. Markets often exhibit subtle, time-varying correlations even in residual terms, challenging the diagonal nature of Ωϵ.

Under these assumptions, the covariance matrix of asset returns, Ωr, takes on its canonical decomposed form we saw in the introduction and where

is the covariance matrix of the factors.

This equation is the engine of the factor model. It states that the total covariance between assets is the sum of the covariance driven by their shared factor exposures and the covariance arising from their independent idiosyncratic movements.

This decomposition offers a potent solution to the curse of dimensionality that plagues covariance estimation in large portfolios. A full n×n covariance matrix requires estimating n(n+1)/2 unique parameters. For n=1000 assets, this is nearly half a million parameters!

A factor model with m=10 factors, however, requires estimating nm elements for B, m(m+1)/2 elements for Ωf, and n elements for the diagonal Ωϵ. This significantly reduces the number of parameters, making estimation more tractable and less prone to error, especially with limited historical data.

The reduction is dramatic.

However, building this engine involves multiple obstacles:

The first is factor selection: what are the right factors? Are they macroeconomic variables, statistical constructs, or characteristics of the assets themselves? Each choice introduces its own set of assumptions and challenges.

The second is parameter estimation: accurately estimating B, Ωf, and Ωϵ from noisy, non-stationary financial data is a non-trivial task.

The third is validation: how do we rigorously test if our factor model is truly capturing the market's systematic structure or merely fitting historical noise?

These obstacles transform the elegant mathematical framework into a complex engineering challenge, demanding careful consideration at every step.

Factor selection

Choosing the right factors is a scientific discovery guided by economics, statistics, and sometimes, intuition. Do we use macroeconomic variables like interest rate changes or inflation surprises? Do we extract statistical factors using techniques like Principal Component Analysis? Or do we rely on asset characteristics like value, momentum, or size, inspired by empirical observations like the Fama-French factors?

Each choice carries its own baggage:

Macroeconomic factors: Directly linked to economic variables—e.g., changes in inflation, interest rates, GDP growth. They are intuitive but often suffer from low frequency, revision risk, and potential lag between the event and market reaction. Example: A surprise increase in interest rates impacting bond proxies and financials.

Statistical factors: Derived purely from the covariance structure of returns using techniques like Principal Component Analysis. PCA identifies orthogonal linear combinations of assets that explain the maximum variance. While statistically optimal for capturing covariance, the resulting factors may lack economic intuition and their interpretation can shift.

Characteristic factors: Based on observable asset characteristics—e.g., Price-to-Book ratio for Value, market capitalization for Size, past returns for Momentum, debt-to-equity for Quality. These are inspired by empirical anomalies or theoretical risk premia but are susceptible to data mining and the risk that the premium disappears once discovered and arbitraged away.

Choosing factors that miss key drivers is like navigating by stars on a cloudy night. If the rise of AI is a major market force, and your factor model lacks an adequate tech innovation factor—perhaps implicitly captured, but poorly—you might severely underestimate the correlated risk within a portfolio of AI-exposed stocks. This is the heart of specification risk–building a model that fundamentally misrepresents the underlying market structure.

For these reasons I like to use vola factors. Let’s code a method to identify them:

import numpy as np

import pandas as pd

from sklearn.linear_model import LinearRegression

# 1) SIMULATE SYNTHETIC DATA

np.random.seed(42)

t = 500 # time periods

n = 10 # number of assets

m = 3 # number of factors

# factor returns (t × m)

factor_returns = np.random.normal(scale=1.0, size=(t, m))

# true loadings B (n × m)

true_B = np.random.uniform(low=0.5, high=1.5, size=(n, m))

# idiosyncratic noise (t × n)

epsilon = np.random.normal(scale=0.5, size=(t, n))

# asset returns: R = F·Bᵀ + ε → shape (t × n)

R = factor_returns @ true_B.T + epsilon

# for clarity, optional DataFrames

dates = pd.date_range(start="2020-01-01", periods=t, freq="D")

assets = [f"Asset_{i+1}" for i in range(n)]

factors = [f"Factor_{j+1}" for j in range(m)]

df_R = pd.DataFrame(R, index=dates, columns=assets)

df_F = pd.DataFrame(factor_returns, index=dates, columns=factors)

# 2) ESTIMATE LOADINGS VIA CROSS‐SECTIONAL REGRESSION

estimated_B = np.zeros((n, m))

for i in range(n):

model = LinearRegression().fit(df_F.values, df_R.iloc[:, i].values)

estimated_B[i, :] = model.coef_

# 3) COMPUTE SYSTEMATIC RETURNS (t × n)

sys_R = factor_returns @ estimated_B.T

# 4) COMPUTE VARIANCES PER ASSET (axis=0)

var_total = R.var(axis=0) # shape (n,)

var_systematic = sys_R.var(axis=0) # shape (n,)

# 5) FRACTION OF VARIANCE EXPLAINED

explained_ratio = var_systematic / var_total

# sanity check: all values should be between 0 and 1, no NaNs

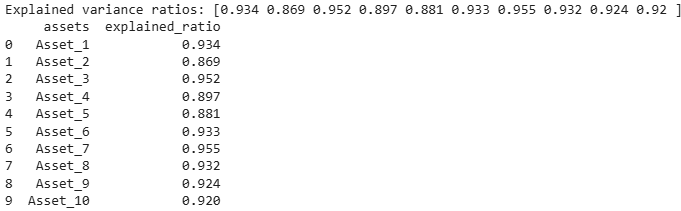

print("Explained variance ratios:", np.round(explained_ratio, 3))

output = {"assets": assets, "explained_ratio": np.round(explained_ratio, 3).tolist()}The output would be:

Here we see how variability could explain which factor to choose. But it's not the only method; ideally, one should choose one that matches the nature of the factor—the data must be consistent with the method.

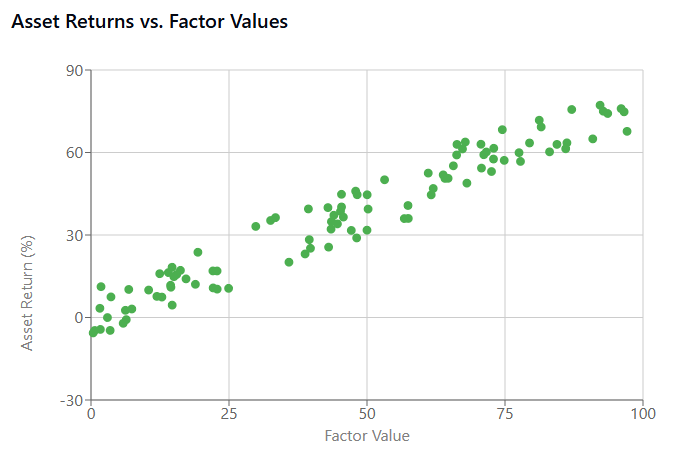

For example if we visualize a strong factor, it shows a clear linear relationship between the factor and asset returns. This suggests the factor captures a genuine market dynamic that could be exploited.

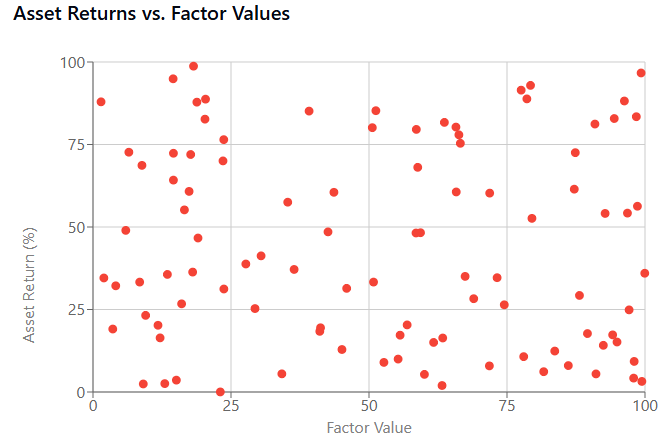

A poor factor exhibits a random cloud pattern, indicating no meaningful relationship. Using this factor would introduce specification risk as it doesn't capture any relevant market dynamics.

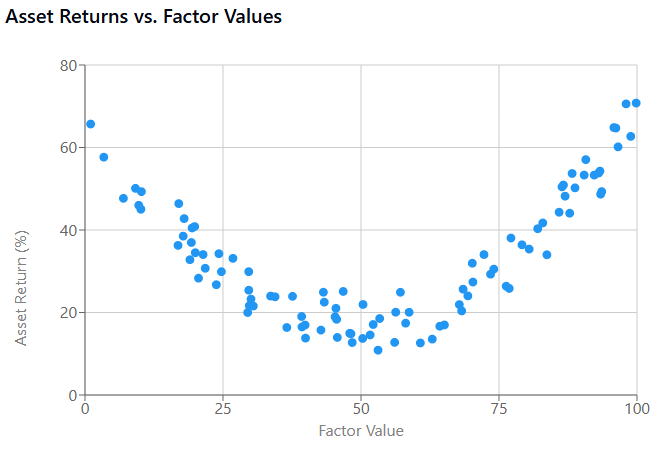

Besides, some factors have nonlinear relationships with returns. Traditional linear models might miss these patterns, creating another type of specification risk.

Parameter estimation

Even with factors chosen, accurately estimating the factor loadings matrix B and the factor covariance matrix Ωf from noisy, finite financial data is a formidable task. The standard approach often involves running time-series regressions for each asset against the chosen factors to estimate the loadings.

Consider a simple multiple linear regression for a single asset i:

Estimating the β coefficients via Ordinary Least Squares seems straightforward:

where R is the matrix of asset returns and F is the matrix of factor returns. However, financial data has a notoriously low signal-to-noise ratio. Outliers, structural breaks, and simple random fluctuations can heavily influence these estimates. The delicious-looking 20% factor loading might indeed be a statistical mirage, an artifact of estimation error rather than a true, stable exposure.

Furthermore, estimating Ωf requires estimating the covariance matrix of the factors themselves. While m≪n, factors can still be correlated, and estimating their covariance matrix from limited data introduces further estimation risk.

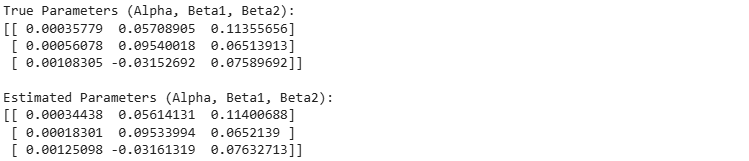

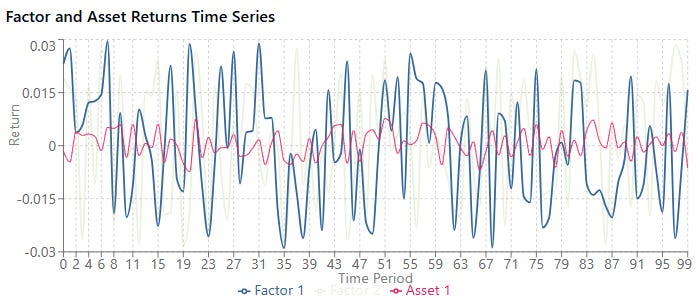

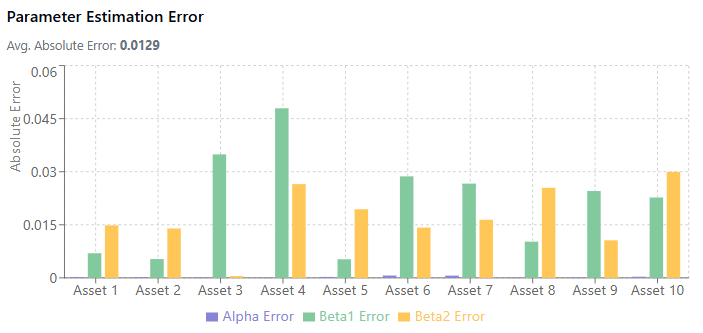

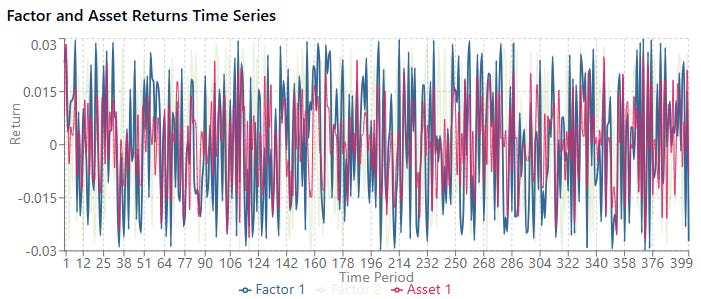

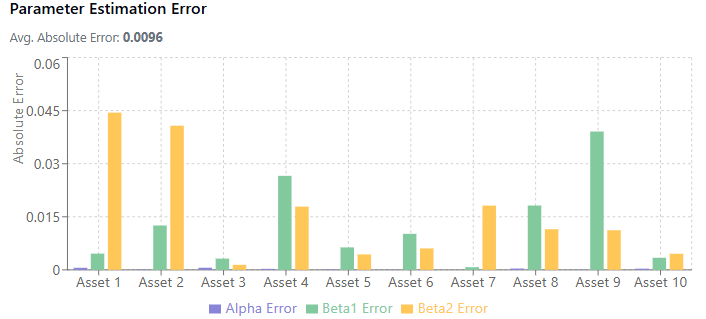

Let’s simulate some noisy data following a factor model structure and then estimate the parameters using OLS. Show how estimation error can occur with limited data.

import numpy as np

import pandas as pd

import statsmodels.api as sm

# Simulate data

np.random.seed(42)

T = 100 # Time periods (limited data)

m = 2 # Number of factors

n = 10 # Number of assets

# Simulate factor returns (e.g., market and size factors)

F = np.random.randn(T, m)

F = sm.add_constant(F, prepend=True) # Add constant for alpha

# Simulate true factor loadings and alpha

true_alpha = np.random.randn(n, 1) * 0.001 # Small alpha

true_B = np.random.randn(n, m) * 0.1 # True loadings

true_params = np.hstack((true_alpha, true_B))

# Simulate idiosyncratic noise

epsilon = np.random.randn(T, n) * 0.005 # Small idiosyncratic risk

# Simulate asset returns

R = F @ true_params.T + epsilon

# --- Estimation ---

# Estimate parameters for each asset

estimated_params = []

for i in range(n):

model = sm.OLS(R[:, i], F)

results = model.fit()

estimated_params.append(results.params)

estimated_params = np.array(estimated_params)

print("True Parameters (Alpha, Beta1, Beta2):")

print(true_params[:3, :]) # Print for first 3 assets

print("\nEstimated Parameters (Alpha, Beta1, Beta2):")

print(estimated_params[:3, :]) # Print for first 3 assets

# Note: With T=100, the estimates will likely be quite different from true values

# This snippet illustrates the concept of estimating B via regression for each assetThe output would be:

Something to keep in mind:

How factor loadings are estimated from financial data.

The impact of sample size on estimation accuracy.

How noise levels affect parameter estimation.

For example, a small sample, low noise levels and beta size achieved these results:

Versus a bigger sample, higher noise and beta size:

Damn! So ugly!

When the noise level is high relative to the beta size, estimates become much less reliable. This is the low signal-to-noise ratio problem in financial data.

While not directly visualized, the estimation of the factor covariance matrix Ωf introduces additional estimation risk, especially when factors are correlated.

Model validation

Validating a factor model's performance is a perpetual challenge. Standard backtesting involves testing the model on historical data not used in estimation. But given the non-stationarity of markets, a model that fit historical data well might fail to predict future relationships. How do you rigorously test if your model is capturing enduring systematic structure versus simply overfitting to past noise?

The fundamental paradox lies in trying to validate a model designed to predict dynamics in a non-stationary future using only the static record of a past that may not repeat itself. A model can fit historical data exquisitely, capturing all its nuances and patterns, yet fail catastrophically when faced with a new market regime, an unprecedented shock, or a fundamental shift in relationships. This isn't just a challenge, it's an existential threat to the model's utility, where performance relies on accurate, forward-looking inputs.

Standard backtesting, which involves estimating the model on one historical period—the in-sample data—and evaluating its performance on a subsequent, distinct period—the out-of-sample data—is a necessary first step but is profoundly insufficient on its own. It can tell you if the model had explanatory power over a specific past period it didn't see during training, but it provides limited assurance that those relationships will hold in the future.

Robust validation requires a multi-faceted approach, examining different aspects of the model's performance and assumptions:

How much of the variation in asset returns—either over time for a single asset or across assets at a single point in time—does the model actually explain? This is often measured by R2. High time-series R2 for an individual asset regression indicates its returns are strongly driven by the factors. High cross-sectional R2 at a given time indicates that the factor exposures effectively explain the differences in returns across assets at that moment.

While high R2 is desirable–it suggests the factors are capturing common variance–it is a measure of fit, not necessarily predictive power. A model can have high historical R2 by overfitting to noise or temporary relationships.

Are the estimated factor loadings Bij statistically significant? Standard t-tests on the regression coefficients are used here.

A statistically insignificant loading suggests that, based on the historical data, you cannot confidently say the asset has exposure to that factor. Beyond statistical significance, the loadings should also be economically sensible.

Does the sign and magnitude of a stock's beta to the energy factor align with what you know about the company? Inconsistent or illogical loadings can signal model misspecification, data errors, or multicollinearity.

How well does the model predicts future returns ak alpha and, crucially, future risk aka covariance? Predicting alpha is notoriously difficult due to its low signal-to-noise ratio. Validating risk predictions is more tractable and often the primary goal of factor models in practice.

The inherent difficulty is that a model validated on data from the 2018s might fail to capture the dynamics of a 2020s market shaped by new technologies, pandemics, and geopolitical shifts. The market's memory is short, and its structure is fluid.

The resolution to this paradox lies in adopting dynamic validation methodologies that acknowledge and simulate this non-stationarity. Some of the minimum and sanity tests would be:

Explanatory power.

Significance test.

Residual analysis and diagnostics.

Rolling window validation.

Walk-forward validation.

Regime-based validation.

Monte Carlo robustness.

Of course, there are more, and you don't have to use all of the above. Choose a combination that checks a minimum of the factor properties.

Personally, although I haven't included it in the list above, I prefer combinatorial cross-validation with competing agents—we'll see what this is in future articles. For now I leave you the code for all the techniques mentioned above in the appendix.

Asymmetric alpha architecture

Alpha architecture is built on the premise that asymmetric information leaves footprints—in price action, volume patterns, options flow, and sectoral behavior. By systematically scanning for these anomalies, quants can construct directional and volatility-based positions that anticipate institutional moves.

Let’s outline the structural components of such a strategy, divided into three main branches:

Long bias:

The long bias component of the architecture is designed to capture anticipatory accumulation in equities or the broader index—eg. via SPY ETF. Institutional buyers, informed insiders, and smart-money operators often move in advance of catalysts such as earnings beats, mergers, or positive macro data. They cannot hide their footprints entirely. Here's how we identify them:

a. Options volume and dark pool activity:

Unusual options volume in near-term, out-of-the-money calls—particularly when not justified by news—often signals insider expectations of a bullish event. These moves are even more predictive when coupled with dark pool accumulation, where institutional trades are executed out of the public limit order book to avoid detection. Surges in dark pool activity—above 3x average volume—suggest quiet accumulation.

b. Clustered insider buying:

Legal insider trades—e.g., Form 4 filings—are public, but largely ignored. When multiple executives at the same company buy within a short window, the predictive power spikes. This clustered buying often precedes rallies, as insiders rarely risk personal capital without high conviction. The architecture prioritizes multi-executive buys rather than single, token purchases.

c. Leading earnings or macro releases:

When price and volume begin to move several days before a known event, and this movement aligns with increased options and dark pool signals, it can indicate anticipation of favorable data. The architecture interprets these moves not as random walk anomalies, but as strategic positioning by informed actors.

Execution logic:

Upon the confluence of these three conditions:

A long position is entered in the flagged equity or in SPY if the signal is broad-based.

Position sizing is constrained by the volatility of the asset and the strength of the signal.

Time stops are used: positions are closed if no follow-through occurs within 3–5 trading days.

Short bias:

The short bias logic mirrors the Long Bias, but focuses on the footprints of negative asymmetric information: knowledge of weak earnings, regulatory risk, economic downgrades, or company-specific issues. Here, we're looking for signs that the informed money is quietly exiting or hedging.

a. Put accumulation and skew changes:

Sharp spikes in put volume—particularly deep OTM puts—signal bearish expectations. When this is paired with rising skew—implied volatility of puts rising faster than calls—the implication is that participants are expecting downside volatility. These are not small retail bets but likely institutional hedges—or speculative shorts informed by privileged insight.

b. Insider selling and dark pool exits:

While insider selling is less predictive than buying—due to diversification and tax reasons—it becomes meaningful when paired with dark pool exits. A surge in dark pool sell volume, coupled with Form 4 filings of insider sales, suggests strategic de-risking.

c. Sector rotation and defensive flows:

Often, asymmetric information relates not to one firm but to macro themes. For instance, if large funds rotate from tech to staples or utilities—especially during a period of calm markets—it may imply that informed players anticipate risk-off events, such as Fed policy tightening or recessionary prints. This logic applies not just to equities, but to sector ETFs—XLF, XLK, XLU, etc.

Execution logic:

Upon identification of these bearish footprints:

A short position is entered via puts on the individual equity, sector ETF, or SPY.

Alternatively, inverse ETFs—e.g., SH or SDS—can be used in non-margin environments.

Signals are time-bound to 3–5 days unless the catalyst is imminent—e.g., earnings tomorrow.

Risk is defined sharply: no averaging down on shorts; stop-losses must be honored 🙃

Volatility:

Markets often underprice volatility, especially when no catalyst is on the horizon. Ironically, this is when informed traders move—because they know what’s coming. The volatility component of the alpha architecture exploits this inefficiency by purchasing straddles or strangles in low-volatility conditions when event-driven movement is suspected.

a. Cheap implied volatility (IV) relative to realized volatility (RV):

One of the cleanest asymmetry signals is when IV is low but RV is rising or expected to rise. This divergence suggests that market-makers are complacent, and traders can load up on long-volatility positions at favorable prices.

b. Overlap with asymmetric footprints:

If dark pool activity, options flow, or insider moves suggest a big directional move, but the direction is uncertain, the architecture chooses not to guess. Instead, it buys volatility. Straddles—buying both a call and a put at the same strike—or strangles—same logic, different strikes—profit from large moves in either direction.

This is common before:

FOMC meetings.

Earnings from bellwethers—AAPL, JPM, etc.

Major macro releases—NFP, CPI, ISM, etc.

Geopolitical events.

c. Event calendar overlay:

The architecture includes a calendar of market-moving scheduled events, and cross-references them with current footprints of informed activity. When overlap occurs (e.g., dark pool surge in SPY + low IV + Fed meeting in 48 hours), volatility plays are prioritized over directional bets.

Execution logic:

Trades are placed via options on SPY or liquid large-cap stocks.

Greeks are monitored; theta (time decay) is minimized by keeping exposure short-dated.

Delta-neutrality is maintained where possible until the move begins.

Okay, okay, I know you'd like more, but it's time to wrap things up here. Great work, guys! I hope you enjoyed this one and can get some use out of it.

Rest up today: clear the clutter, sharpen your heuristics, and let every anomaly spark advantage. Keep your code nimble, your risk tighter, and your edge diamond‑keen. Stay fearless, stay quant. 🚀

PS: Do you see alpha decay faster in ML-based strategies vs. simpler quant strategies?

This is an invitation-only access to our QUANT COMMUNITY, so we verify numbers to avoid spammers and scammers. Feel free to join or decline at any time. Tap the WhatsApp icon below to join

Appendix

Model validation script:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import statsmodels.api as sm

from statsmodels.stats.stattools import durbin_watson

from scipy import stats

from sklearn.model_selection import TimeSeriesSplit

from sklearn.metrics import r2_score

import seaborn as sns

class FactorModelValidator:

"""

A comprehensive class for validating factor models while accounting for

non-stationarity in financial markets.

"""

def __init__(self, returns_df, factors_df):

"""

Initialize with returns and factors DataFrames

Parameters:

-----------

returns_df : pandas DataFrame

Asset returns with dates as index and assets as columns

factors_df : pandas DataFrame

Factor returns with matching dates as index and factors as columns

"""

self.returns = returns_df

self.factors = factors_df

self.aligned_data = self._align_data()

def _align_data(self):

"""Align returns and factors on matching dates"""

common_dates = self.returns.index.intersection(self.factors.index)

return {

'returns': self.returns.loc[common_dates],

'factors': self.factors.loc[common_dates]

}

def fit_factor_model(self, asset, factors=None):

"""

Fit a factor model for a single asset

Parameters:

-----------

asset : str

Column name of the asset in the returns DataFrame

factors : list, optional

List of factor names to include (defaults to all)

Returns:

--------

model_results : statsmodels RegressionResults

Results of the factor model regression

"""

if factors is None:

factors = self.factors.columns.tolist()

y = self.aligned_data['returns'][asset]

X = self.aligned_data['factors'][factors]

X = sm.add_constant(X)

model = sm.OLS(y, X)

results = model.fit()

return results

def calculate_explanatory_power(self, asset, factors=None):

"""

Calculate R² for a given asset's factor model

Returns:

--------

r_squared : float

The R² value representing explanatory power

"""

results = self.fit_factor_model(asset, factors)

return results.rsquared

def factor_significance_test(self, asset, factors=None):

"""

Test statistical and economic significance of factors

Returns:

--------

significance_df : pandas DataFrame

DataFrame with factor t-stats, p-values, and coefficients

"""

results = self.fit_factor_model(asset, factors)

# Extract statistical significance metrics

significance_df = pd.DataFrame({

'coefficient': results.params[1:], # Skip intercept

't_statistic': results.tvalues[1:],

'p_value': results.pvalues[1:],

'significant_5pct': results.pvalues[1:] < 0.05

})

# Add economic significance

significance_df['economic_impact'] = significance_df['coefficient'] * \

self.aligned_data['factors'][significance_df.index].std()

return significance_df

def residual_analysis(self, asset, factors=None):

"""

Analyze residuals for serial correlation and heteroskedasticity

Returns:

--------

dict : Dictionary of test results

"""

results = self.fit_factor_model(asset, factors)

residuals = results.resid

# Durbin-Watson test for serial correlation

dw_stat = durbin_watson(residuals)

# Breusch-Pagan test for heteroskedasticity

bp_test = sm.stats.diagnostic.het_breuschpagan(residuals, results.model.exog)

# Shapiro-Wilk test for normality

sw_test = stats.shapiro(residuals)

return {

'durbin_watson': dw_stat,

'serial_correlation': 'Present' if (dw_stat < 1.5 or dw_stat > 2.5) else 'Not detected',

'breusch_pagan_p_value': bp_test[1],

'heteroskedasticity': 'Present' if bp_test[1] < 0.05 else 'Not detected',

'shapiro_p_value': sw_test[1],

'residuals_normal': 'Yes' if sw_test[1] >= 0.05 else 'No'

}

def plot_residual_diagnostics(self, asset, factors=None):

"""Generate diagnostic plots for residuals"""

results = self.fit_factor_model(asset, factors)

residuals = results.resid

fitted = results.fittedvalues

fig, axes = plt.subplots(2, 2, figsize=(14, 10))

# Residuals vs Fitted plot

axes[0, 0].scatter(fitted, residuals, alpha=0.5)

axes[0, 0].axhline(y=0, color='r', linestyle='-')

axes[0, 0].set_xlabel('Fitted Values')

axes[0, 0].set_ylabel('Residuals')

axes[0, 0].set_title('Residuals vs Fitted')

# QQ plot

sm.qqplot(residuals, line='45', ax=axes[0, 1])

axes[0, 1].set_title('QQ Plot')

# Histogram of residuals

axes[1, 0].hist(residuals, bins=30, edgecolor='k', alpha=0.7)

axes[1, 0].set_xlabel('Residual Value')

axes[1, 0].set_ylabel('Frequency')

axes[1, 0].set_title('Residual Distribution')

# Residual autocorrelation plot

sm.graphics.tsa.plot_acf(residuals, lags=20, ax=axes[1, 1])

axes[1, 1].set_title('Residual Autocorrelation')

plt.tight_layout()

return fig

def rolling_window_validation(self, asset, factors=None, window_size=252):

"""

Perform rolling window validation to test model stability

Parameters:

-----------

window_size : int

Size of rolling window in trading days (default 252 = 1 year)

Returns:

--------

rolling_metrics : pandas DataFrame

Time series of validation metrics

"""

if factors is None:

factors = self.factors.columns.tolist()

returns_series = self.aligned_data['returns'][asset]

factors_df = self.aligned_data['factors'][factors]

dates = factors_df.index[window_size:]

rolling_r2 = []

for i in range(len(dates)):

end_idx = window_size + i

start_idx = i

window_X = factors_df.iloc[start_idx:end_idx]

window_y = returns_series.iloc[start_idx:end_idx]

X = sm.add_constant(window_X)

model = sm.OLS(window_y, X).fit()

rolling_r2.append({

'date': dates[i],

'r2': model.rsquared,

'adj_r2': model.rsquared_adj

})

return pd.DataFrame(rolling_r2).set_index('date')

def walk_forward_validation(self, asset, factors=None, n_splits=5):

"""

Perform walk-forward validation (expanding window)

Parameters:

-----------

n_splits : int

Number of validation periods

Returns:

--------

wf_metrics : dict

Dictionary of out-of-sample performance metrics for each split

"""

if factors is None:

factors = self.factors.columns.tolist()

returns_series = self.aligned_data['returns'][asset]

factors_df = self.aligned_data['factors'][factors]

tscv = TimeSeriesSplit(n_splits=n_splits)

metrics = []

for train_idx, test_idx in tscv.split(factors_df):

# Train set

X_train = factors_df.iloc[train_idx]

y_train = returns_series.iloc[train_idx]

# Test set

X_test = factors_df.iloc[test_idx]

y_test = returns_series.iloc[test_idx]

# Add constant and fit model

X_train_const = sm.add_constant(X_train)

model = sm.OLS(y_train, X_train_const).fit()

# Predict on test set

X_test_const = sm.add_constant(X_test)

y_pred = model.predict(X_test_const)

# Calculate metrics

oos_r2 = r2_score(y_test, y_pred)

mae = np.mean(np.abs(y_test - y_pred))

rmse = np.sqrt(np.mean((y_test - y_pred)**2))

# Store results

test_period = f"{factors_df.index[test_idx[0]].strftime('%Y-%m')} to {factors_df.index[test_idx[-1]].strftime('%Y-%m')}"

metrics.append({

'period': test_period,

'oos_r2': oos_r2,

'mae': mae,

'rmse': rmse,

'train_r2': model.rsquared

})

return pd.DataFrame(metrics)

def regime_based_validation(self, asset, factors=None, regime_column=None):

"""

Validate model performance across different market regimes

Parameters:

-----------

regime_column : str or pd.Series

Column name in factors DataFrame or Series with regime labels

Returns:

--------

regime_metrics : pandas DataFrame

Performance metrics for each regime

"""

if factors is None:

factors = self.factors.columns.tolist()

if isinstance(regime_column, str):

regimes = self.factors[regime_column]

else:

regimes = regime_column

# Ensure regimes align with our data

regimes = regimes.loc[self.aligned_data['factors'].index]

returns_series = self.aligned_data['returns'][asset]

factors_df = self.aligned_data['factors'][factors]

unique_regimes = regimes.unique()

regime_results = []

# Fit overall model for comparison

X_all = sm.add_constant(factors_df)

overall_model = sm.OLS(returns_series, X_all).fit()

for regime in unique_regimes:

regime_mask = (regimes == regime)

# Skip if not enough data points

if sum(regime_mask) < len(factors) + 5:

continue

X_regime = factors_df.loc[regime_mask]

y_regime = returns_series.loc[regime_mask]

X_regime_const = sm.add_constant(X_regime)

regime_model = sm.OLS(y_regime, X_regime_const).fit()

# Compare factor loadings

factor_changes = {}

for factor in factors:

overall_coef = overall_model.params[factor]

regime_coef = regime_model.params[factor]

factor_changes[f'{factor}_change'] = regime_coef - overall_coef

factor_changes[f'{factor}_pct_change'] = (regime_coef - overall_coef) / overall_coef if overall_coef != 0 else np.nan

regime_results.append({

'regime': regime,

'obs_count': sum(regime_mask),

'r2': regime_model.rsquared,

'adj_r2': regime_model.rsquared_adj,

**factor_changes

})

return pd.DataFrame(regime_results)

def monte_carlo_robustness(self, asset, factors=None, n_simulations=1000, bootstrap_pct=0.8):

"""

Test model robustness using bootstrapped samples

Parameters:

-----------

n_simulations : int

Number of bootstrap simulations

bootstrap_pct : float

Percentage of data to sample in each simulation

Returns:

--------

bootstrap_results : dict

Distribution of model parameters across simulations

"""

if factors is None:

factors = self.factors.columns.tolist()

returns_series = self.aligned_data['returns'][asset]

factors_df = self.aligned_data['factors'][factors]

# Storage for results

coef_distributions = {factor: [] for factor in factors}

coef_distributions['intercept'] = []

r2_values = []

n_samples = int(len(returns_series) * bootstrap_pct)

for _ in range(n_simulations):

# Generate bootstrap sample indices

sample_indices = np.random.choice(len(returns_series), size=n_samples, replace=True)

# Extract bootstrap sample

X_sample = factors_df.iloc[sample_indices]

y_sample = returns_series.iloc[sample_indices]

# Fit model

X_sample_const = sm.add_constant(X_sample)

bs_model = sm.OLS(y_sample, X_sample_const).fit()

# Store results

r2_values.append(bs_model.rsquared)

coef_distributions['intercept'].append(bs_model.params['const'])

for factor in factors:

coef_distributions[factor].append(bs_model.params[factor])

# Calculate stability metrics

results = {

'r2_mean': np.mean(r2_values),

'r2_std': np.std(r2_values),

'r2_5th': np.percentile(r2_values, 5),

'r2_95th': np.percentile(r2_values, 95),

}

# Add coefficient stability metrics

for factor in ['intercept'] + factors:

coef_array = np.array(coef_distributions[factor])

results[f'{factor}_mean'] = np.mean(coef_array)

results[f'{factor}_std'] = np.std(coef_array)

results[f'{factor}_5th'] = np.percentile(coef_array, 5)

results[f'{factor}_95th'] = np.percentile(coef_array, 95)

# Sign stability (% of samples where sign matches full sample estimate)

if factor == 'intercept':

full_sample_coef = bs_model.params['const']

else:

full_sample_coef = bs_model.params[factor]

sign_consistency = np.mean((coef_array > 0) == (full_sample_coef > 0))

results[f'{factor}_sign_stability'] = sign_consistency

return results

def plot_factor_correlation_heatmap(self, factors=None):

"""Generate heatmap of factor correlations"""

if factors is None:

factors = self.factors.columns.tolist()

corr_matrix = self.aligned_data['factors'][factors].corr()

plt.figure(figsize=(10, 8))

sns.heatmap(corr_matrix, annot=True, cmap='coolwarm', center=0, fmt='.2f')

plt.title('Factor Correlation Matrix')

return plt.gcf()

# Example usage:

# Create sample data

dates = pd.date_range(start='2015-01-01', end='2022-12-31', freq='B')

np.random.seed(42)

# Generate factor returns

n_dates = len(dates)

factors_data = {

'Market': 0.0001 + 0.01 * np.random.randn(n_dates),

'Size': -0.0002 + 0.008 * np.random.randn(n_dates),

'Value': 0.0001 + 0.009 * np.random.randn(n_dates),

'Momentum': 0.0003 + 0.012 * np.random.randn(n_dates)

}

# Add regime indicator (simplified)

regimes = []

for i in range(n_dates):

if i < n_dates // 3:

regimes.append('Bull Market')

elif i < 2 * n_dates // 3:

regimes.append('Crisis')

else:

regimes.append('Recovery')

factors_data['Regime'] = regimes

factors_df = pd.DataFrame(factors_data, index=dates)

# Generate asset returns

true_betas = {

'Asset1': {'Market': 1.2, 'Size': 0.5, 'Value': -0.3, 'Momentum': 0.1},

'Asset2': {'Market': 0.8, 'Size': -0.2, 'Value': 0.7, 'Momentum': -0.4}

}

returns_data = {}

for asset, betas in true_betas.items():

asset_returns = 0.0002 # alpha

for factor, beta in betas.items():

asset_returns += beta * factors_df[factor]

# Add idiosyncratic risk

asset_returns += 0.015 * np.random.randn(n_dates)

returns_data[asset] = asset_returns

returns_df = pd.DataFrame(returns_data, index=dates)

# Create validator

validator = FactorModelValidator(returns_df, factors_df.drop('Regime', axis=1))

# Perform validation

asset = 'Asset1'

explanatory_power = validator.calculate_explanatory_power(asset)

print(f"Explanatory Power (R²): {explanatory_power:.4f}")

factor_significance = validator.factor_significance_test(asset)

print("\nFactor Significance:")

print(factor_significance)

residual_tests = validator.residual_analysis(asset)

print("\nResidual Analysis:")

for test, result in residual_tests.items():

print(f"{test}: {result}")