Table of contents:

Introduction.

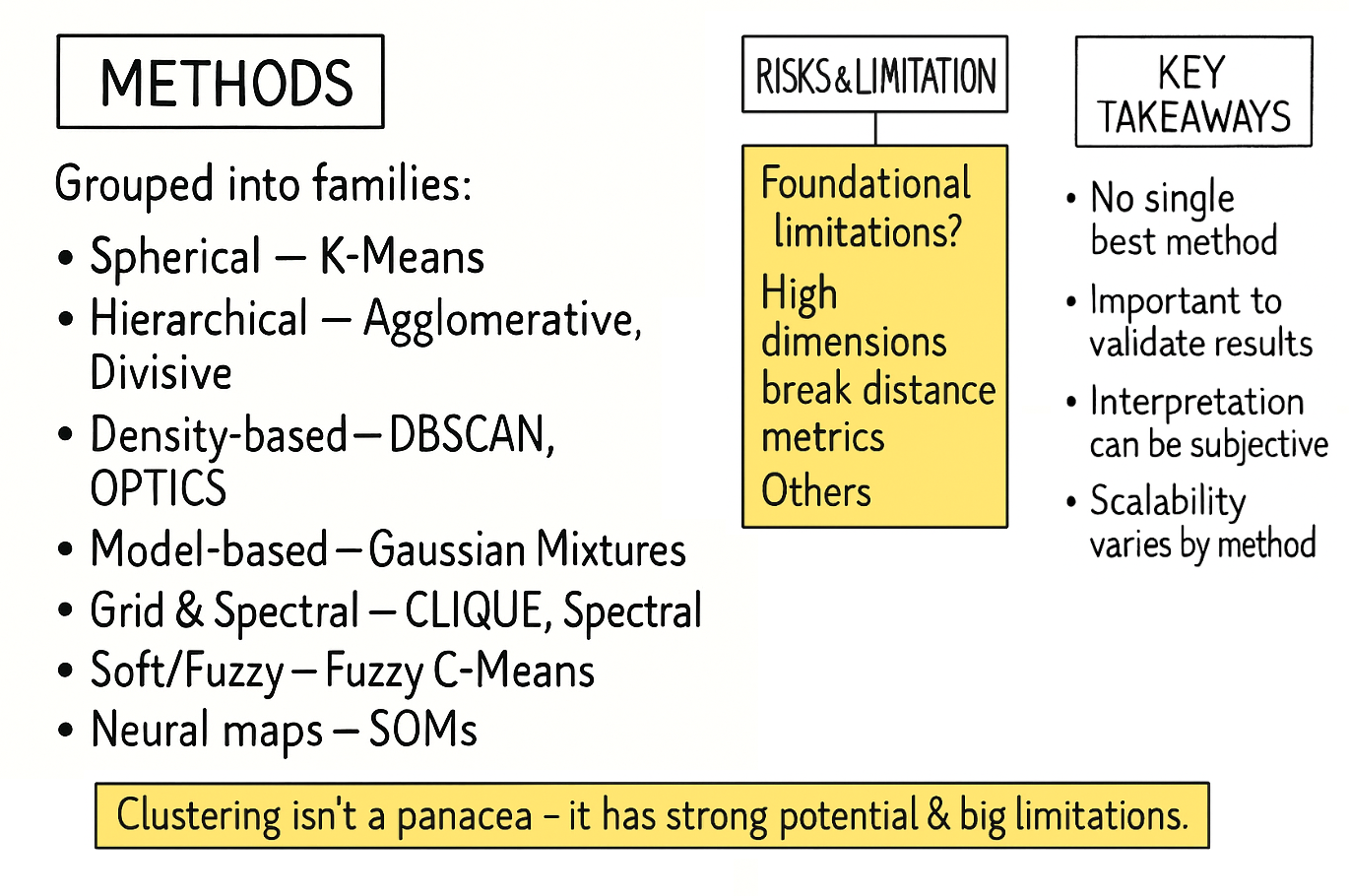

Risk and model limitations.

Spherical approaches.

K-Means.

K-Medoids.

Hierarchical approaches.

Density-based techniques.

DBSCAN.

OPTICS.

Model-based methods.

Grid-based & spectral methods.

CLIQUE.

Spectral clustering.

Fuzzy clustering.

Neural network-based methods.

Introduction

Alright, let’s establish first principles. Before deploying capital into algorithmic strategies, one must confront the paradigm shift that distinguishes durable firms from those erased by structural blind spots: financial markets are not monolithic stochastic processes but non-stationary systems governed by latent regime dynamics. This is not heuristic philosophy—it’s an empirical reality quantified through decades of regime-dependent risk premia and structural break analysis.

Every junior quant, armed with asymptotic theorems and clean data matrices, begins with the same intellectual shit: the assumption that markets exhibit global ergodicity. You construct elegant factor models—momentum, mean reversion, cointegration—optimized on historical time series under the illusion of stationarity. For a finite horizon, these models perform beautifully, their Sharpe ratios inflated by periods of regime persistence. Your backtests become exercises in overfitting, mistaking coincident stability for invariant truth.

This is the danger of deterministic thinking in a probabilistic domain. Your strategy thrives in a local equilibrium, calibrated to a specific regime’s statistical fingerprints—low realized volatility, persistent auto-correlation, stable cross-asset dependencies. But markets are adaptive systems. The moment regime boundaries shift—triggered by macroeconomic thresholds, liquidity phase transitions, or endogenous feedback loops—your model’s assumptions collapse. A momentum strategy tuned to trending markets withers under mean-reverting volatility spikes; a statistical arbitrage framework predicated on tight spreads disintegrates during liquidity droughts. The mathematics remain valid within the regime, but the system itself has mutated.

The epiphany arrives via PnL volatility: markets are better modeled as a mixture of distributions than a single stochastic process. Regime identification becomes the critical challenge. Formally, a regime is a metastable state characterized by distinct joint probability distributions over asset returns, volatility, correlation matrices, and microstructure signals. These states—think the "Complacent Bull" (low vol, positive drift), "Panicked Bear" (high vol, negative skew), and "Sideways Chop" (structural breakouts failing due to range-bound noise)—exhibit path dependency and memory effects.

Legacy models fail because they ignore regime segmentation, akin to applying linear regression to a piecewise function. The solution lies in unsupervised learning: algorithms that detect regime boundaries without labeled data. We are going to start by something simple like clustering techniques. Features might include realized volatility surfaces, cross-asset correlation eigenvalues, orderbook imbalance metrics, or entropy measures of liquidity concentration.

Clustering isn’t a panacea. It demands rigorous validation via silhouette scores, gap statistics, or out-of-sample stability tests. More critically, it requires domain-specific feature engineering to capture regime-defining characteristics. Seasoned practitioners know that regime detection is inherently recursive: today’s clusters inform tomorrow’s risk models, which refine the features used to identify the next regime shift.

If you are curious, check this:

There are no universal solutions, only frameworks for dynamic adaptation. The algorithms we’ll explore are not tools but lenses: each revealing partial truths about the market’s ever-shifting anatomy. Your survival hinges on switching these lenses faster than the system changes its shape. Let’s begin.

Risk and model limitations

Clustering is an essential technique for exploratory data analysis, allowing us to uncover latent structures in unlabeled data. However, it is crucial to recognize that a clustering algorithm is not an oracle that reveals pre-ordained truths. It is a mathematical procedure that optimizes a specific objective, and its results are highly sensitive to initial assumptions, algorithmic choices, and human interpretation. Understanding its limitations is the key to using it effectively.

The risks can be broadly categorized into three categories:

Foundational risks → Flaws in the premise:

These risks arise before any algorithm is run. They are fundamental to how the problem is framed.

The entire output of a clustering analysis hinges on the definition of "similarity" or "distance." This choice is made based on assumptions. An algorithm has no inherent understanding of context; it simply processes the mathematical representation of the features you provide. Choosing irrelevant or poorly scaled features will lead to mathematically valid but practically meaningless clusters.

As the number of features used to describe each data point increases, the concept of distance becomes less meaningful. In a high-dimensional space, the distance between any two points can appear to be almost equal. This makes it incredibly difficult for algorithms that rely on distance metrics—like K-Means or DBSCAN—to differentiate between a point's "close" and "distant" neighbors. The algorithm may find patterns, but these are often just artifacts of the vast, sparse space, not genuine structures in the data.

Price is not static, it evolves. A clustering model built on data from last year may be a poor representation of the current reality. This "concept drift" means that clusters are not permanent structures but transient states, and models must be regularly re-evaluated and retrained to remain relevant.

Algorithmic risks → Bias of the tools:

Every clustering algorithm has an intrinsic bias regarding the types of structures it is designed to find. Using the wrong tool for the data's underlying geometry is a common mistake.

Different algorithms are biased towards finding clusters of specific shapes. K-Means, for instance, will always try to find spherical, roughly equal-sized clusters because it works by minimizing variance around a central point. If the true clusters in your data are long and thin, or intertwined like crescent moons, K-Means will fail, incorrectly splitting them apart or merging them. DBSCAN can find arbitrary shapes but assumes all meaningful clusters have a similar density, which may not be the case. The algorithm will always return an answer, but that answer will be distorted if the data's true shape doesn't match the algorithm's bias.

Most clustering algorithms require the quant to set crucial parameters beforehand. The most famous is the number of clusters,

K, in K-Means. How do you know the correct number of clusters in advance? While methods exist to guide this choice—like the Elbow method—they are often ambiguous. Other algorithms are sensitive to different parameters, like the neighborhood radius—eps—in DBSCAN. A small change in these initial settings can lead to vastly different clustering outcomes, making the results seem arbitrary and unstable if the parameters cannot be robustly justified.Some algorithms are highly sensitive to outliers or noise. Since methods like K-Means attempt to assign every single point to a cluster, a few extreme outliers can significantly skew the position of a cluster's center, thereby corrupting the entire group. While some algorithms like DBSCAN have an explicit notion of "noise," those that don't can produce misleading results.

Interpretation risks → Fallacies in the conclusion:

Once the algorithm produces an output, the final and most critical set of risks emerges from human interpretation.

A clustering algorithm will always find clusters, even in perfectly random data. The human tendency is to seek patterns and assign narratives to these groupings. Just because you find a cluster and can describe its members' common characteristics does not mean the cluster represents a meaningful, underlying phenomenon. It might simply be a statistical coincidence. Attributing causality or deep significance to a mathematically derived group without external validation is a dangerous leap of faith.

It is easy to create a clustering model that perfectly partitions the data you used to build it. However, the true test of a model is how well it generalizes to new, unseen data. A model that is too complex or too finely tuned to the initial dataset has likely overfit the noise and random quirks present in that sample. When new data arrives, this overfit model will fail to assign it to the correct clusters, making it useless for any predictive or real-time application.

The boundaries between clusters are often fuzzy. A data point near a boundary can be re-assigned to a different cluster if the data is slightly perturbed or the model is re-run. This instability can make it difficult to rely on the classification for any critical downstream task. Furthermore, while we can mathematically describe a cluster by the average values of its features, this does not always translate to a simple, human-understandable concept.

The devil was in the execution. Clustering promised to be our compass, yet its limitations emerged like landmines in the path of progress. Further we will see how to avoid them.

Spherical approaches

My first instinct was to start simple. The most intuitive idea of clustering is simply to partition the data into a pre-specified number of groups, K. This leads us straight to the workhorse of clustering: K-Means.

K-Means

The concept is beautifully straightforward. Imagine you want to group all the trading days from the last five years into three regimes (K=3): "Bullish," "Bearish," and "Neutral." K-Means tries to find the best three central points—called centroids—to represent these regimes.

The algorithm is iterative:

Initialization: First, it randomly places K centroids in your data space. In our case, imagine a 2D plot where the X-axis is the daily return of the S&P 500 and the Y-axis is the daily change in the VIX—volatility index. We'd just plop three points down randomly on this chart.

Assignment step: It goes through every single trading day and assigns it to the nearest centroid. The nearness is typically measured by standard Euclidean distance.

Update step: After assigning all the days, it recalculates the position of each centroid to be the mean—the average—of all the data points assigned to it.

Repeat: It repeats the assignment and update steps until the centroids stop moving, meaning a stable solution has been found.

The objective function that K-Means tries to minimize is the sum of squared distances from each data point xi to its assigned cluster's centroid μj. This is called the within-cluster sum of squares.

Where Cj is the set of all points in cluster j.

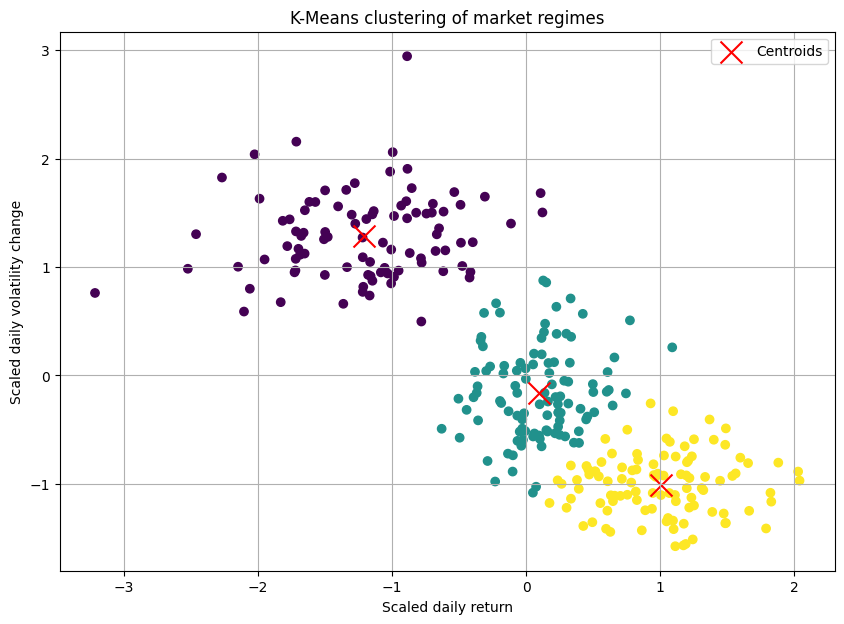

Let's simulate some market data. We'll create three distinct regimes:

Positive returns, low volatility change—bull quiet.

Negative returns, high volatility change—bear volatile.

Near-zero returns, moderate volatility change—sideways chop.

import numpy as np

from sklearn.cluster import KMeans

from sklearn.preprocessing import StandardScaler

# --- Generate synthetic market data ---

# We create data representing daily (Return, Volatility Change)

np.random.seed(42)

# Regime 1: Bull Quiet

bull_quiet = np.random.randn(100, 2) * [0.5, 0.5] + [1.0, -1.0]

# Regime 2: Bear Volatile

bear_volatile = np.random.randn(100, 2) * [0.6, 0.8] + [-1.2, 3.0]

# Regime 3: Sideways Chop

sideways_chop = np.random.randn(100, 2) * [0.3, 0.7] + [0.1, 0.5]

market_data = np.vstack([bull_quiet, bear_volatile, sideways_chop])

# --- Preprocessing ---

# It's crucial to scale data for distance-based algorithms like K-Means

scaler = StandardScaler()

scaled_data = scaler.fit_transform(market_data)

# --- Apply K-Means ---

kmeans = KMeans(n_clusters=3, random_state=42, n_init='auto')

clusters = kmeans.fit_predict(scaled_data)

centroids = kmeans.cluster_centers_The resulting plot would show our three blobs of data points, each colored differently, with a red 'X' marking the calculated center of each cluster. It looked beatufully artificial! Okay, we had successfully partitioned our market days.

Strengths:

K-Means is computationally efficient, making it suitable for large datasets.

Its logic is intuitive, making it a great starting point.

Pitfalls and our "oh, crap" moment:

The initial success quickly faded when we applied it to more complex data. The pitfalls became glaringly obvious.

We have to specify the number of clusters, K, beforehand. How do we know if there are 3, 4, or 10 market regimes? Choosing the wrong K leads to nonsensical groupings. We can use methods like the "Elbow Method," but often the "elbow" i ambiguous, leaving the choice subjective.

The initial random placement of centroids can lead to different final clusters. Running the algorithm multiple times with different random starts is a must.

K-Means implicitly assumes that clusters are spherical and evenly sized—this is a killer. Financial data is rarely so well-behaved. What if a "panic" regime is a long, thin, oddly shaped cluster? K-Means would fail spectacularly to capture it. It draws a circle where it should be drawing a snake.

A single extreme outlier event—like news—could drag a centroid way off course, corrupting an entire cluster.

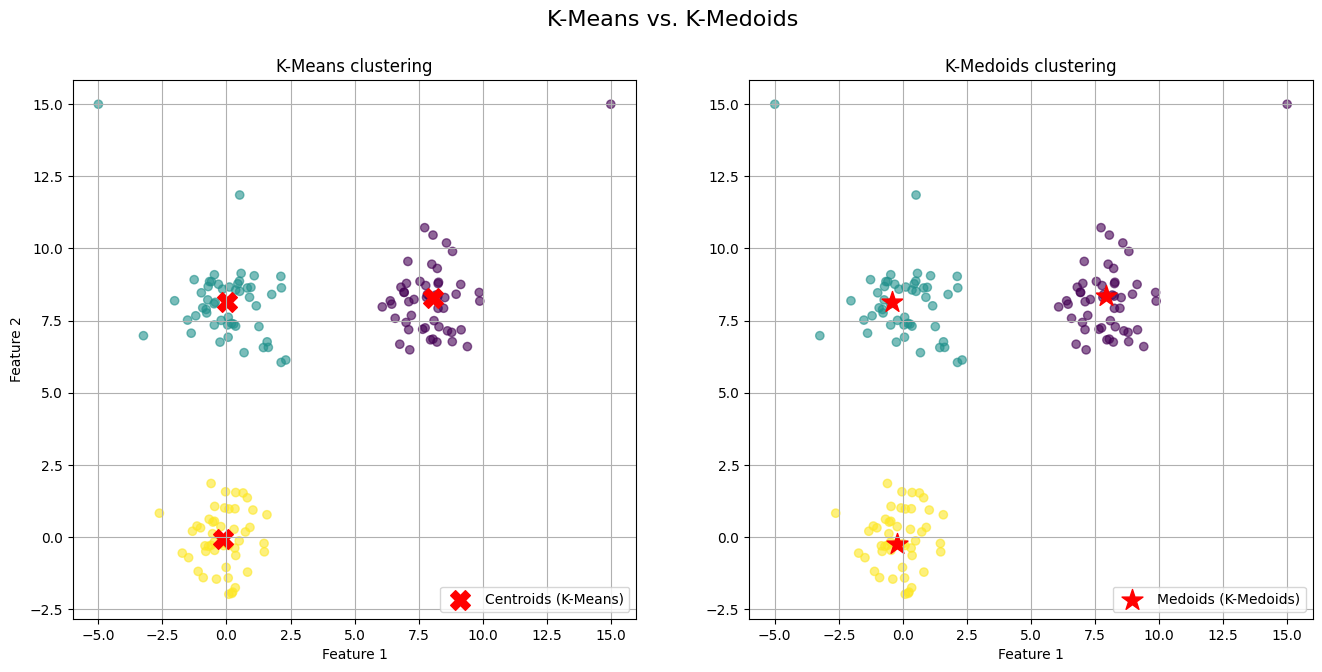

To combat the outlier problem, we briefly experimented with K-Medoids—PAM - Partitioning Around Medoids. Instead of using the mean which is sensitive to outliers as the center, K-Medoids uses a medoid—an actual data point from the cluster—as the center.

K-Medoids

From a methodological standpoint, K-Medoids is a direct and robust alternative to K-Means. The primary limitation of K-Means stems from its use of the centroid—the mean of the points in a cluster—as the cluster prototype. The mean is a statistical measure that is notoriously sensitive to outliers. A single, aberrant data point can significantly skew the centroid, pulling it away from what we would intuitively consider the cluster's "center."

K-Medoids addresses this deficiency by a simple but key change: instead of a centroid, it uses a medoid. A medoid is constrained to be an actual data point from the dataset. It is defined as the point within a cluster that has the minimum average dissimilarity to all other points in that same cluster. By constraining the center to be an existing point, the algorithm becomes substantially more robust to the influence of extreme outliers and noise.

The most common algorithm for K-Medoids is PAM—Partitioning Around Medoids. Its objective is to minimize the sum of dissimilarities between the points and their respective cluster's medoid. However, it is much more computationally expensive and still suffered from the "K" and shape assumption problems. Our simple partitioning approach was too rigid for the fluid, strangely shaped reality of the market.

The algorithmic procedure PAM can be summarized as follows:

Initialization: Select K points from the dataset X to serve as the initial medoids.

Assignment: Assign each data point to the cluster corresponding to the nearest medoid. The total cost is the sum of the distances of all points to their medoid.

Update: For each cluster, consider swapping its medoid with a non-medoid point from the same cluster. Calculate the change in the total cost for each potential swap. If a swap would reduce the total cost, perform the swap that yields the greatest cost reduction.

Iteration: Repeat steps 2 and 3 until the medoids no longer change, indicating that the algorithm has converged to a local minimum.

The objective function of the K-Medoids algorithm is to find the set of medoids M and the corresponding partition C that minimizes the total within-cluster dissimilarity. This is expressed as:

Let's dissect this formula:

The inner summation calculates the sum of distances from every point xj within a single cluster Ci to that cluster's medoid, mi. This value represents the "cost" or total dissimilarity for that specific cluster.

The outer summation sums up the costs of all k individual clusters. The result is the total cost for the entire clustering configuration defined by the set of medoids M.

The minimization indicates that we are searching for the specific set of k medoids, chosen from the original dataset X, that results in the minimum possible value for this total cost function.

In essence, the formula seeks the most centrally located data points to act as exemplars for each cluster, where "centrality" is defined by the minimization of the sum of distances to all other points in that cluster.

Unlike K-Means, which minimizes the sum of squared Euclidean distances to an artificial centroid, the K-Medoids objective function typically uses the sum of absolute distances or other dissimilarity measures. This lack of squaring is a key reason for its increased robustness to outliers, as extreme distances are not amplified quadratically.

Let’s code it:

import numpy as np

from sklearn.cluster import KMeans

from sklearn.metrics import pairwise_distances

# --- Simplified K-Medoids (PAM) implementation ---

def kmedoids_simple(X, n_clusters, max_iter=100):

"""

A simplified implementation of the K-Medoids (PAM) algorithm.

Args:

X (np.ndarray): The input data, shape (n_samples, n_features).

n_clusters (int): The number of clusters (K).

max_iter (int): The maximum number of iterations.

Returns:

tuple: A tuple containing:

- labels (np.ndarray): Cluster labels for each point.

- medoid_centers (np.ndarray): The final medoid points.

"""

# 1. Initialize: Randomly select K data points as the initial medoids.

n_samples = X.shape[0]

initial_medoid_indices = np.random.choice(n_samples, n_clusters, replace=False)

medoids = X[initial_medoid_indices, :]

# Placeholder for the previous medoids to check for convergence

old_medoids = np.zeros_like(medoids)

for i in range(max_iter):

# 2. Assignment Step: Assign each point to the nearest medoid.

distances = pairwise_distances(X, medoids, metric='euclidean')

labels = np.argmin(distances, axis=1)

# 3. Update Step: For each cluster, find the point that minimizes the

# sum of distances within that cluster (the new medoid).

new_medoids = np.zeros_like(medoids)

for k in range(n_clusters):

# Get all points belonging to the current cluster

cluster_points = X[labels == k]

# If a cluster is empty, which can happen with bad initializations,

# re-assign a random point to it.

if len(cluster_points) == 0:

new_medoids[k, :] = X[np.random.choice(n_samples, 1), :]

continue

# Calculate the sum of distances from each point in the cluster

# to all other points in the same cluster.

intra_cluster_distances = pairwise_distances(cluster_points, metric='euclidean')

sum_of_distances = np.sum(intra_cluster_distances, axis=1)

# The point with the minimum sum of distances is the new medoid.

new_medoid_index_in_cluster = np.argmin(sum_of_distances)

new_medoids[k, :] = cluster_points[new_medoid_index_in_cluster, :]

# Check for convergence: If the medoids have not changed, stop.

if np.all(new_medoids == medoids):

break

medoids = new_medoids

return labels, medoids

# --- 1. Generate synthetic data ---

# This section is identical to your original code.

np.random.seed(42)

# Create three distinct clusters

X1 = np.random.randn(50, 2) + np.array([0, 0])

X2 = np.random.randn(50, 2) + np.array([8, 8])

X3 = np.random.randn(50, 2) + np.array([0, 8])

# Add two significant outliers

outliers = np.array([

[15, 15], # Outlier affecting cluster X2

[-5, 15] # Outlier affecting cluster X3

])

# Combine into a single dataset

X = np.vstack([X1, X2, X3, outliers])

# --- 2. Apply clustering algorithms ---

# K-Means Algorithm (unchanged)

kmeans = KMeans(n_clusters=3, random_state=42, n_init=10)

kmeans_labels = kmeans.fit_predict(X)

kmeans_centers = kmeans.cluster_centers_

# K-Medoids algorithm (using our self-contained function)

kmedoids_labels, kmedoids_centers = kmedoids_simple(X, n_clusters=3)The generated visualization provides a clear and direct comparison of the two algorithms' behaviors.

On the left plot (K-Means) we observe the red 'X' markers representing the centroids. Notice the centroids for the top-right and top-left clusters. They have been noticeably "pulled" away from the dense core of their respective clusters and shifted towards the outliers we introduced. The centroid is no longer a faithful representative of its cluster's central tendency; it is a mathematical average biased by extreme values.

On the right plot (K-Medoids) we observe the red star markers representing the medoids. The medoids for all three clusters are positioned squarely within the dense, central region of their assigned points. Because a medoid must be one of the actual data points, it cannot be an artificial point in space like a centroid. The algorithm selects the most "central" existing point, effectively ignoring the pull of distant outliers.

Now that we know the main strength, but pitfalls continue. It still suffers from the "K" and shape assumption problems.

Hierarchical approaches

Our failure with K-Means leads to a shift in perspective. Maybe market regimes aren't like separate, disconnected islands. Maybe they're related, nested within each other, like a family tree. For instance, a broad high volatility environment could contain sub-regimes like high-vol trend and high-vol chop. This line of thinking leads us to hierarchical clustering.

This method doesn't produce a single flat partition of the data but rather a hierarchy of clusters. There are two main approaches:

Agglomerative (bottom-up): This is the more common approach. It starts with every single data point in its own cluster. Then, in each step, it merges the two closest clusters until only one giant cluster—containing all the data—remains.

Divisive (top-down): It starts with all data points in a single cluster and recursively splits it into smaller clusters.

The real magic of hierarchical clustering is the dendrogram. It's a tree diagram that visualizes the entire hierarchy of merges—or splits. You can then "cut" the dendrogram at a certain height to get a specific number of clusters, but its real power is in showing the relationships.

The key component is the linkage criterion, which defines how to measure the distance between two clusters (not just two points). Common linkage criteria include:

Single linkage: Distance is the minimum distance between any two points in the two clusters. It can find long, thin clusters but is sensitive to noise.

\(d_{\text{single}}(C_A, C_B) = \min_{a \in C_A, b \in C_B} d(a, b)\)Complete linkage: Distance is the maximum distance between any two points. It produces more compact clusters.

\(d_{\text{complete}}(C_A, C_B) = \max_{a \in C_A, b \in C_B} d(a, b)\)Average linkage: Distance is the average distance between all pairs of points. It's a balance between the two.

\(d_{\text{average}}(C_A, C_B) = \frac{1}{|C_A| \cdot |C_B|} \sum_{a \in C_A} \sum_{b \in C_B} d(a, b)\)Ward's linkage: This is often the default. It merges the two clusters that result in the minimum increase in the total within-cluster variance.

\(\text{WCSS}(C_k) = \sum_{\boldsymbol{x}_i \in C_k} \|\boldsymbol{x}_i - \boldsymbol{\mu}_k\|^2\)

Besides the choice of the distance function d(⋅,⋅) is a critical modeling decision:

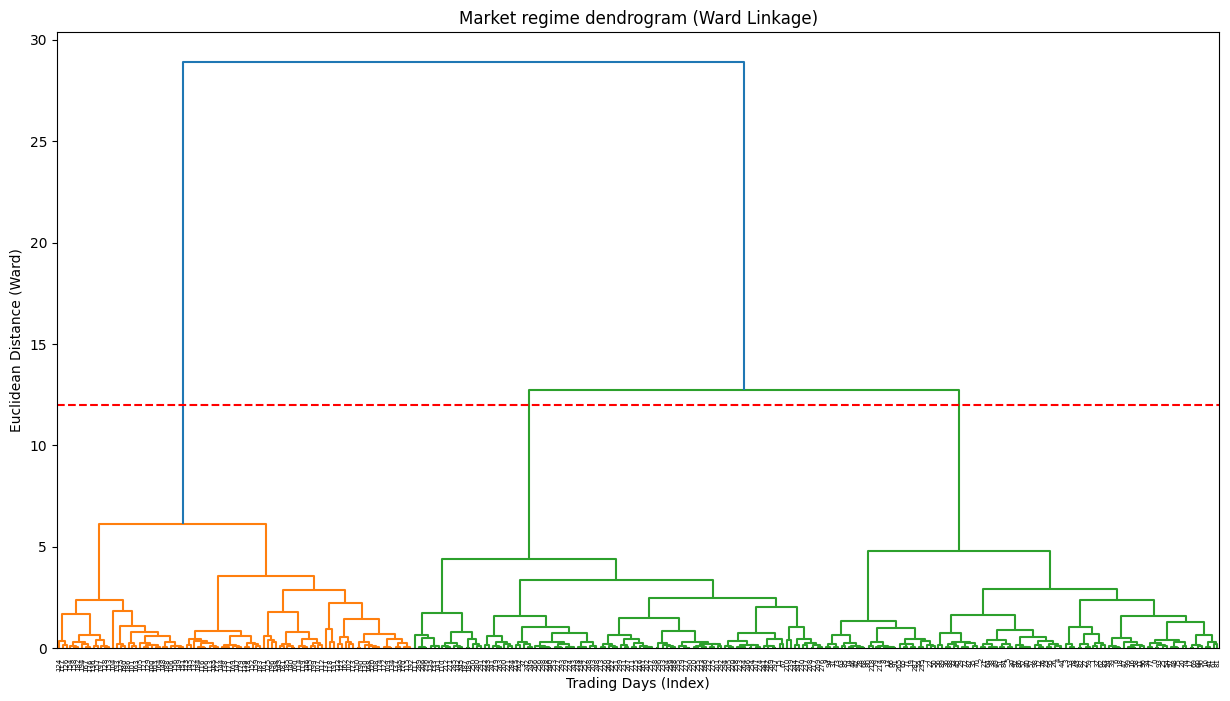

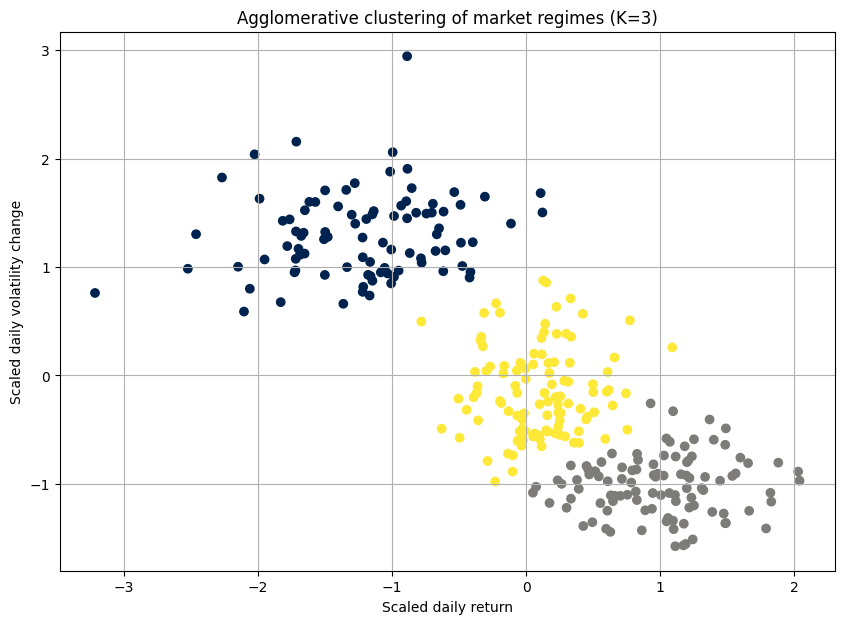

Let's apply this to the same market data:

import scipy.cluster.hierarchy as sch

from sklearn.cluster import AgglomerativeClustering

# Using Ward's linkage method

dendrogram = sch.dendrogram(sch.linkage(scaled_data, method='ward'))

# --- Perform agglomerative clustering ---

# Based on the dendrogram, we choose 3 clusters

agg_cluster = AgglomerativeClustering(n_clusters=3, metric='euclidean', linkage='ward')

clusters = agg_cluster.fit_predict(scaled_data)The dendrogram is the key output here. It's a beautiful, complex tree. The y-axis represents the distance at which clusters were merged. By drawing a horizontal line, we can select a number of clusters. For example, where our red dashed line cuts the tree, it crosses three vertical lines, suggesting that three is a reasonable number of clusters.

Strengths:

The number of clusters is not required beforehand. The dendrogram provides a rich visualization of possibilities.

It doesn't just partition; it shows how clusters relate to one another, which can be very insightful for understanding market dynamics.

It can work with any distance metric, not just Euclidean.

Pitfalls and a new frustrations:

Hierarchical clustering felt like a more honest approach. But it wasn't a silver bullet.

The basic agglomerative algorithm has a time complexity of at least O(N2logN), where N is the number of data points. For our dataset of thousands of trading days, it was slow.

While we don't have to specify K upfront, we still have to decide where to "cut" the dendrogram. This decision remains subjective and can dramatically change the interpretation.

Once a merge—in agglomerative—or a split—in divisive—is made, it's final. An early mistake can't be corrected later in the process.

For a high-dimensional dataset, the 2D representation of the dendrogram can be misleading. The distances it portrays might not capture the true, complex relationships in the data.

I have found a way to map the market's family tree, but the map was hard to read and computationally expensive to draw.

Density-based techniques

Until here we have been guided by a core assumption: everything must belong to a cluster:

K-Means forces every day into a regime. Hierarchical clustering assigns every day a place in the family tree. But what if that was wrong? What if some days aren't part of any regime? What if they are just... noise? Outliers? One-off events?

This leads us to a perspective shift, embodied by DBSCAN—Density-Based Spatial Clustering of Applications with Noise.

DBSCAN's philosophy is pretty interesting. It doesn't partition the entire dataset. Instead, it finds dense regions of data points and calls them clusters. Anything in a low-density region is classified as noise or an outlier. This was immediately appealing. We could use it to separate normal market behavior from anomalous crisis days.

DBSCAN has a simple, elegant logic based on two parameters:

eps(ϵ): A radius. This defines the neighborhood around a data point.min_samples: The minimum number of data points required to be within a point'sepsneighborhood for it to be considered a core point.

The algorithm works as follows:

Initialization: It picks an arbitrary starting point.

Assignment step: If this point is a core point—it has at least

min_samplesneighbors within radiuseps—it starts a new cluster. It then recursively finds all density-connected points —other core points or points on the edge of the cluster—and adds them to this cluster.Update step: If the starting point is not a core point, it's temporarily labeled as noise. It might be picked up later as an "edge" point of another cluster.

Repeat: It continues this process until all points have been visited.

A point p is a core point if its ϵ-neighborhood, Nϵ(p), contains at least min_samples points.

Where:

A point q is directly reachable from p if q∈Nϵϵ(p) and p is a core point. This chain of reachability defines the clusters.

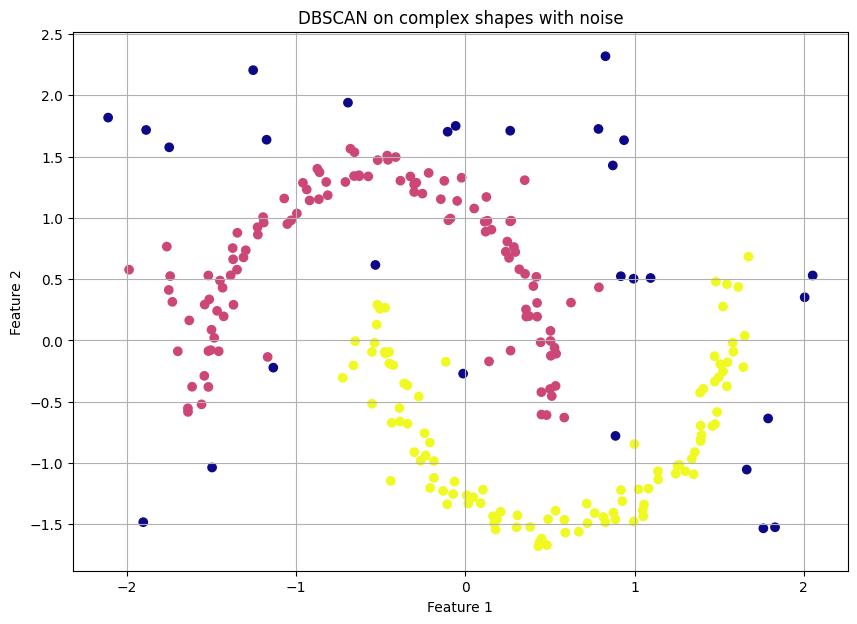

Let's create a dataset where the clusters are weirdly shaped and include some random noise points, something K-Means would fail at.

import numpy as np

from sklearn.cluster import DBSCAN

from sklearn.datasets import make_moons

# --- Generate synthetic data ---

# make_moons creates a dataset that K-Means would fail on. We would avoid market data for the sake fo this example.

moons_data, y = make_moons(n_samples=200, noise=0.05, random_state=42)

# Add some random noise points

noise = np.random.rand(50, 2) * [4, 2] + [-1.5, -0.5]

complex_data = np.vstack([moons_data, noise])

scaled_complex_data = StandardScaler().fit_transform(complex_data)

# --- Apply DBSCAN ---

# These parameters need tuning.

dbscan = DBSCAN(eps=0.3, min_samples=5)

clusters = dbscan.fit_predict(scaled_complex_data)The resulting plot is a revelation. DBSCAN perfectly identifies the two crescent moon shapes as distinct clusters—e.g., in yellow and purple—and correctly labels all the scattered random points as noise—e.g., in dark blue, label -1. This is something neither K-Means nor Hierarchical clustering could have done.

Strengths:

The algorithm finds the number of clusters on its own.

It is not limited to spherical clusters. It can find complex, non-linear shapes.

It has a built-in framework for classifying noise.

Pitfalls and the tuning nightmare:

DBSCAN felt like a massive leap forward. We could finally isolate true anomalies. But it introduced its own set of problems.

The performance of DBSCAN is critically dependent on the choice of

epsandmin_samples. A slightly wrongepscould result in one giant cluster or no clusters at all. Tuning these parameters for high-dimensional financial data was a dark art.DBSCAN assumes that all meaningful clusters have a similar density. It struggles if market regimes have different densities. For example, a panic regime might be extremely dense—many days with similar crash-like behavior—while a low-volatility drift might be very sparse. DBSCAN would find the panic but might label the drift as noise.

To address the varying density issue, we looked at its successor, OPTICS (Ordering Points To Identify the Clustering Structure).

OPTICS

If DBSCAN asks the question: Is this region dense for a specific radius eps? then OPTICS asks the far more profound question: What is the density structure of the data across all possible radii?

OPTICS's philosophy is a masterstroke of generalization. It recognizes that density is not a universal constant but a local property. Instead of producing discrete cluster labels directly, it produces an ordered list of points based on their density relationships. This ordering contains all the information needed to extract clusters for any eps you might have chosen, without having to re-run the algorithm.

To achieve this, OPTICS introduces two new, crucial concepts:

Core distance: For a given

min_samples, the core distance of a pointpis the distance to itsmin_samples-th nearest neighbor. If the point doesn't havemin_samplesneighbors, its core distance is undefined. This is the minimum radiusepsthat would make pointpa core point:\(\text{core_dist}(p) = \text{distance to the min_samples-th neighbor in } N_{\text{min_samples}}(p) \)Reachability distance: The reachability distance of a point

qwith respect to another pointpis the actual distance between them, but no smaller than the core distance ofp. Formally:\(\text{reachability_dist}(q, p) = \max(\text{core_dist}(p), \text{dist}(p, q))\)This is the key insight. It smooths out the density landscape. Points within a dense cluster will have low reachability distances, while points in sparse regions will have high ones.

The algorithm explores the data, always moving to the point with the lowest reachability distance from the set of points it has already processed, creating an ordered traversal of the dataset.

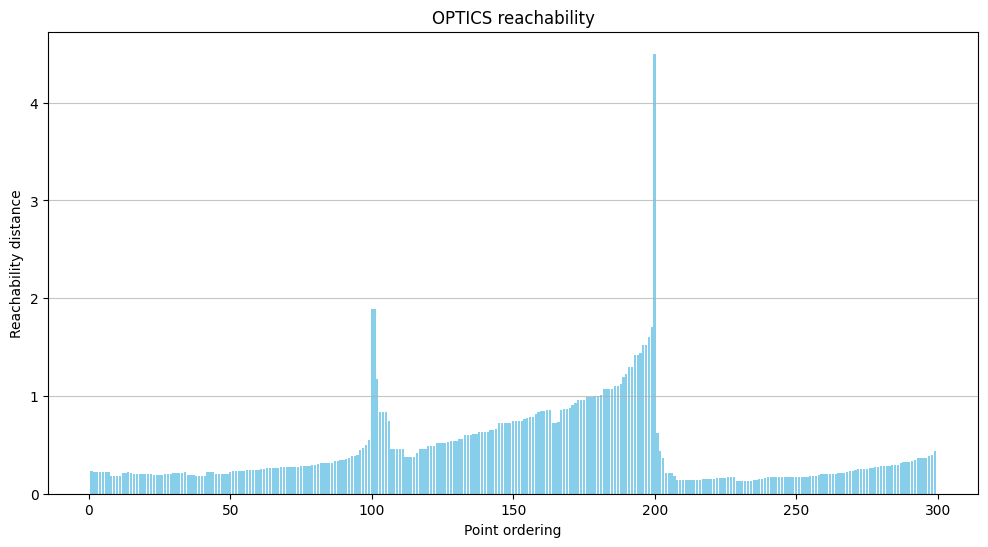

The primary output is not a scatter plot of colored clusters, but a reachability plot. This plot is the signature of OPTICS.

The x-axis is the ordered sequence of points generated by the algorithm.

The y-axis is the reachability distance for each point in that sequence.

You read this plot like a topographical map of density. Valleys represent dense clusters. The points at the bottom of a valley are the core points of a dense region, and they all have low reachability distances to one another. Peaks represent sparse areas or noise. A point with a high reachability distance is far from its neighbors. The depth and width of the valleys tell you about the density and size of the clusters.

Let's generate a dataset that would fool DBSCAN—one with clusters of varying densities. Then we'll see how OPTICS handles it.

import numpy as np

import matplotlib.pyplot as plt

from sklearn.cluster import OPTICS, cluster_optics_dbscan

from sklearn.datasets import make_blobs

# --- 1. Generate synthetic data with varying densities ---

# We create three blobs, two dense and one sparse.

np.random.seed(42)

# A dense cluster

X1, y1 = make_blobs(n_samples=100, centers=[[1, 2]], cluster_std=0.5)

# Another dense cluster

X2, y2 = make_blobs(n_samples=100, centers=[[6, 6]], cluster_std=0.4)

# A much sparser cluster

X3, y3 = make_blobs(n_samples=100, centers=[[-4, 5]], cluster_std=1.5)

X = np.vstack((X1, X2, X3))

# --- 2. Apply OPTICS ---

# We only need to set min_samples. OPTICS will figure out the rest.

# xi is a parameter to help with cluster extraction, determining steepness.

optics = OPTICS(min_samples=9, xi=0.05, min_cluster_size=0.1)

optics.fit(X)

# --- 3. Extract clusters ---

# We can now extract DBSCAN-like clusters from the OPTICS output

# for a given 'eps'. Let's try two different values to see the effect.

# A smaller eps might only find the densest clusters

labels1 = cluster_optics_dbscan(

reachability=optics.reachability_,

core_distances=optics.core_distances_,

ordering=optics.ordering_,

eps=0.7 # Smaller radius

)

# A larger eps can find the sparse clusters too

labels2 = cluster_optics_dbscan(

reachability=optics.reachability_,

core_distances=optics.core_distances_,

ordering=optics.ordering_,

eps=2.0 # Larger radius

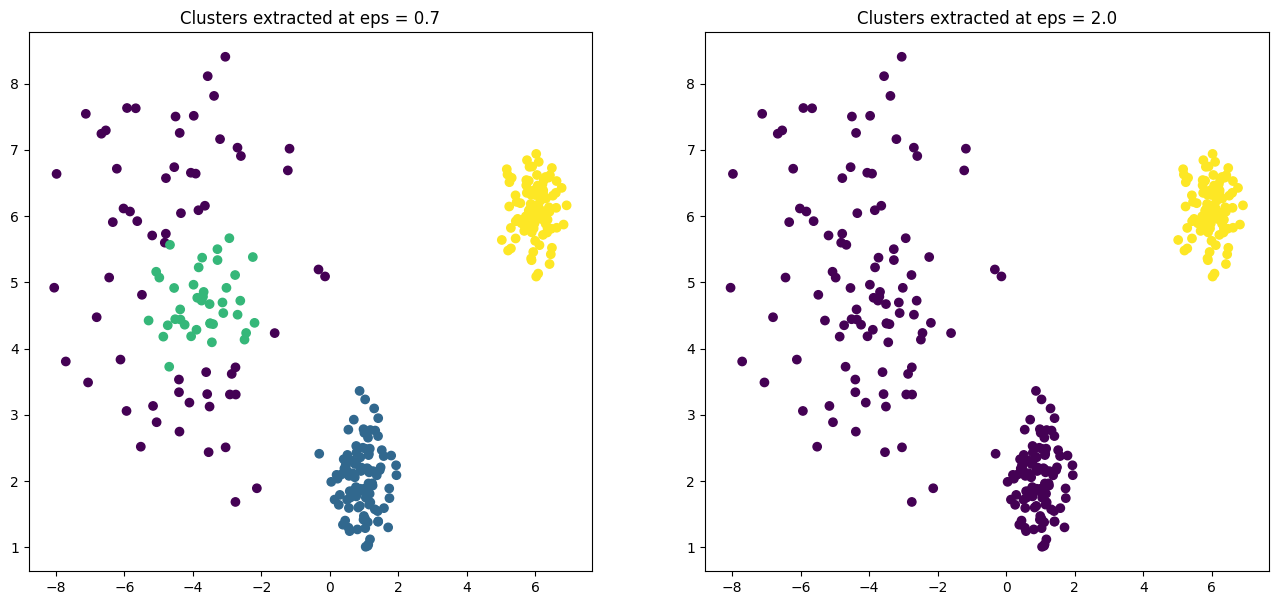

)The reachability plot is the crucial output. You can clearly see three distinct valleys, two of which are much deeper (lower reachability distance) than the third, corresponding perfectly to our two dense and one sparse cluster.

The subsequent scatter plots demonstrate the power of this approach. By setting a low eps (0.7), we extract only the two very dense clusters. By increasing eps to 2.0, we capture all three clusters. We did this without re-running the core algorithm, merely by post-processing its rich output.

Strengths:

It can identify clusters of different densities in a single run.

You no longer need to guess a global

eps. The reachability plot provides a multi-scale view of the cluster structure.It is still robust to outliers—which appear as peaks in the plot—and can find clusters of arbitrary shape.

Only one pitfall?

It doesn't eliminate all parameters. The choice of min_samples is still fundamental and has a significant effect on the output. It defines the scale of density you are looking for.

Model-based methods

Up to this point, our clustering methods provided hard assignments. A trading day was either in Cluster A or Cluster B. This black-and-white view felt unnatural. Markets are driven by probabilities, not certainties. A given day might have characteristics of both a trending and a reverting market.

This need to embrace ambiguity leads us to model-based clustering, specifically Gaussian Mixture Models.

A GMM assumes that the data points are generated from a mixture of a finite number of Gaussian distributions—bell curves—with unknown parameters. In our context, each Gaussian distribution represents a market regime. Unlike K-Means, which assigns a point to a single cluster, GMM provides a probability that a point belongs to each of the clusters.

Instead of finding centroids, GMM tries to find the parameters of the Gaussian distributions that best explain the data. For each cluster, it estimates:

Mean (μ): The center of the cluster.

Covariance (Σ): The shape of the cluster. This is a huge advantage over K-Means. A covariance matrix can describe clusters that are elliptical and rotated, not just spherical.

Mixing coefficient (π): The weight or prior probability of that cluster.

The algorithm typically used to fit these parameters is the Expectation-Maximization algorithm:

Initialization: Initialize the means, covariances, and mixing coefficients—e.g., using the output of K-Means.

Expectation step: For each data point, calculate the probability that it was generated by each of the Gaussian components. This is the soft assignment.

Maximization step: Update the parameters (μ, Σ, π) for each component using the probabilities calculated in the expectation step.

Repeat: Iterate the E and M steps until the model's likelihood of explaining the data converges.

The probability density of a data point x is given by a sum over the K components:

where N(x∣μk,Σk) is the Gaussian probability density function for cluster k.

Let's see how GMM handles elongated, non-spherical clusters that would fool K-Means:

import numpy as np

from sklearn.mixture import GaussianMixture

from matplotlib.patches import Ellipse

# --- Generate elongated, rotated data ---

# This section is identical to your original code.

np.random.seed(42)

C1 = np.array([[0.0, -0.7], [3.5, 0.7]])

X1 = np.dot(np.random.randn(100, 2), C1)

X2 = np.dot(np.random.randn(100, 2), np.random.randn(2, 2)) + [5, 5]

gmm_data = np.vstack([X1, X2])

# --- Apply Gaussian mixture model ---

# This section is identical to your original code.

gmm = GaussianMixture(n_components=2, random_state=42)

gmm.fit(gmm_data)

clusters = gmm.predict(gmm_data)

# Get the probabilistic assignments

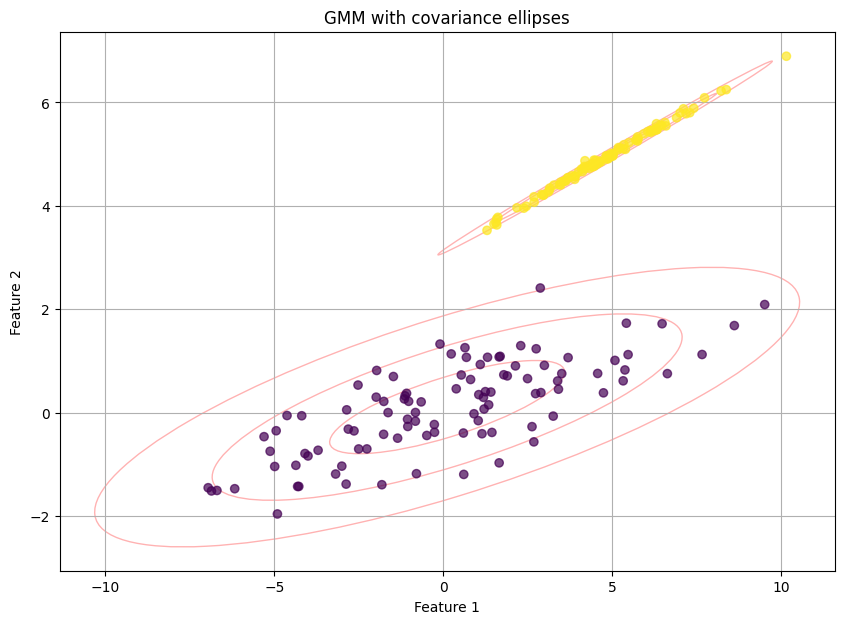

probs = gmm.predict_proba(gmm_data)The plot is pretty cool righ!? Not only are the points colored by their most likely cluster, but the red ellipses show the shape and orientation of the Gaussian distributions the model has learned. This visualizes the non-spherical nature of the clusters. The printed output would show, for example, that point 1 belongs to cluster 0 with 98% probability and cluster 1 with 2% probability—a much richer output than a simple "0".

Strengths:

The use of covariance matrices allows GMM to fit a wide variety of elliptical shapes.

Probabilistic assignments are more realistic for financial data and excellent for risk management. We could, for example, reduce trade size when the model is uncertain—e.g., a 55%/45% split.

Pitfalls and the risk of overfitting:

GMM felt like a panacea but its flexibility is a double-edged sword.

If a Gaussian component collapses onto a few data points, its covariance can become zero, leading to an infinite likelihood. This requires regularization or careful initialization.

The EM algorithm is iterative and can be slow, especially with many components or high-dimensional data.

The core assumption is that regimes are Gaussian-shaped. While more flexible than spheres, what if a regime has a fat tail or is bi-modal? GMM would struggle to model this, potentially underestimating extreme risks.

With enough components, a GMM can perfectly fit almost any dataset, essentially memorizing the data rather than finding true underlying structure. The BIC helps, but the risk of finding phantom clusters is high.

We have a model that could embrace uncertainty, but it also had the power to create a beautifully complex and utterly false picture of the market if we weren't extremely careful.

Grid-based & spectral methods

Our features are piling up. We aren't just looking at returns and volatility anymore. We are adding credit spreads, term structures, sentiment scores, and dozens of other factors. We are deep in the territory of the curse of dimensionality, where our intuitions about distance and density break down completely. Methods that relied on calculating distances in this 50-dimensional space, like K-Means or DBSCAN, are becoming unreliable and computationally intractable. We need methods designed specifically for this high-dimensional shit.

Let’s start with the first one: Grid-based clustering (CLIQUE).

The idea behind CLIQUE—Clustering In QUEst—is brilliant. Instead of trying to find clusters in the full, high-dimensional space, it tries to find dense regions in subspaces —i.e., using only a subset of the features at a time.

The workflow is defined as:

Partition: It divides each dimension—each feature—into a grid of intervals.

Find dense units: It finds dense units in 1-dimensional space—intervals with more data points than a threshold, τ.

Build up dimensions: It iteratively builds up from there. It will try to find dense units in 2-D space by joining dense 1-D units. Then 3-D from 2-D, and so on. This is based on an Apriori-like principle: if a region is dense in k-dimensions, all its projections onto (k-1) dimensions must also be dense.

Form clusters: Finally, it connects the dense k-dimensional units to form clusters.

The big win is that it automatically finds the subspaces in which clusters exist. It might discover that an effective risk-off regime is only visible when you look at the 3-D subspace of: VIX Change, High-Yield Spreads, USD/JPY Exchange Rate. Imagine, it is invisible in the other 47 dimensions.

The math behind this is:

A unit u is dense if the fraction of data points it contains is greater than the threshold τ. The entire CLIQUE algorithm is a procedure to efficiently find maximal sets of connected units that satisfy this condition, using the Apriori principle for pruning the search space.

Let’s code it:

import numpy as np

from itertools import combinations

from collections import defaultdict

def clique_clustering(X, xi, tau):

"""

Performs CLIQUE subspace clustering on a 2D dataset.

Args:

X (np.ndarray): The input data, shape (n_samples, n_features).

This implementation is simplified for 2D data.

xi (int): The number of intervals (bins) to partition each dimension into.

tau (float): The density threshold for a unit to be considered dense.

(e.g., 0.1 for 10% density).

Returns:

dict: A dictionary where keys are cluster IDs and values are the indices

of the data points belonging to that cluster.

"""

if X.shape[1] != 2:

raise ValueError("This simplified implementation only supports 2D data.")

n_samples = X.shape[0]

# --- 1. Discretize the data space into a grid ---

# Find the min/max for each dimension to define the grid boundaries

min_vals = np.min(X, axis=0)

max_vals = np.max(X, axis=0)

# Calculate the size of each interval (bin) in each dimension

unit_sizes = (max_vals - min_vals) / xi

# Assign each data point to its corresponding grid unit index (e.g., (bin_x, bin_y))

# This creates a list of tuples, where each tuple is the grid coordinate for a data point.

discretized_data = np.floor((X - min_vals) / unit_sizes).astype(int)

# Clamp the max value to the last bin

discretized_data = np.minimum(discretized_data, xi - 1)

# --- 2. Find 1-Dimensional Dense Units (Apriori Step 1) ---

dense_units_1d = []

# Check density along dimension 0 (X-axis)

for i in range(xi):

count = np.sum(discretized_data[:, 0] == i)

if (count / n_samples) > tau:

dense_units_1d.append((0, i)) # (dimension, interval_index)

# Check density along dimension 1 (Y-axis)

for j in range(xi):

count = np.sum(discretized_data[:, 1] == j)

if (count / n_samples) > tau:

dense_units_1d.append((1, j))

# --- 3. Find 2-Dimensional Dense Units (Apriori Step 2) ---

dense_units_2d = set()

unit_counts = defaultdict(int)

for point in discretized_data:

# Check if the 1D projections of this 2D unit are dense

is_x_dense = (0, point[0]) in dense_units_1d

is_y_dense = (1, point[1]) in dense_units_1d

# Apriori Pruning: Only count units whose 1D projections are dense

if is_x_dense and is_y_dense:

unit_counts[tuple(point)] += 1

# Identify the 2D units that meet the density threshold

for unit, count in unit_counts.items():

if (count / n_samples) > tau:

dense_units_2d.add(unit)

if not dense_units_2d:

print("No dense 2D units found. Try lowering tau or xi.")

return {}

# --- 4. Identify Clusters by Finding Connected Components ---

clusters = {}

cluster_id = 0

# Keep track of units we've already assigned to a cluster

visited = set()

for unit in dense_units_2d:

if unit not in visited:

# Start a new cluster search (using Breadth-First Search)

cluster_id += 1

clusters[cluster_id] = []

queue = [unit]

visited.add(unit)

while queue:

current_unit = queue.pop(0)

# Find all data points that fall into this dense unit

point_indices = np.where((discretized_data == current_unit).all(axis=1))[0]

clusters[cluster_id].extend(point_indices)

# Check for adjacent dense units (neighbors)

x, y = current_unit

for dx in [-1, 0, 1]:

for dy in [-1, 0, 1]:

if dx == 0 and dy == 0:

continue

neighbor = (x + dx, y + dy)

if neighbor in dense_units_2d and neighbor not in visited:

visited.add(neighbor)

queue.append(neighbor)

return clusters

# --- Example Usage and Visualization with Market Data ---

np.random.seed(42)

# Regime 1: Bull Quiet (Positive returns, low volatility)

bull_quiet = np.random.randn(150, 2) * [0.5, 0.5] + [1.0, -1.0]

# Regime 2: Bear Volatile (Negative returns, high volatility)

bear_volatile = np.random.randn(150, 2) * [0.6, 0.8] + [-1.2, 3.0]

# Regime 3: Sideways Chop (Near-zero returns, moderate volatility)

sideways_chop = np.random.randn(150, 2) * [0.3, 0.7] + [0.1, 0.5]

# Noise/Outlier data

noise = (np.random.rand(50, 2) - 0.5) * 15

data = np.vstack([bull_quiet, bear_volatile, sideways_chop, noise])

# Set CLIQUE parameters

num_intervals = 12 # xi: How fine the grid is

density_threshold = 0.01 # tau: Minimum percentage of points to be a dense unit

# Run the CLIQUE algorithm

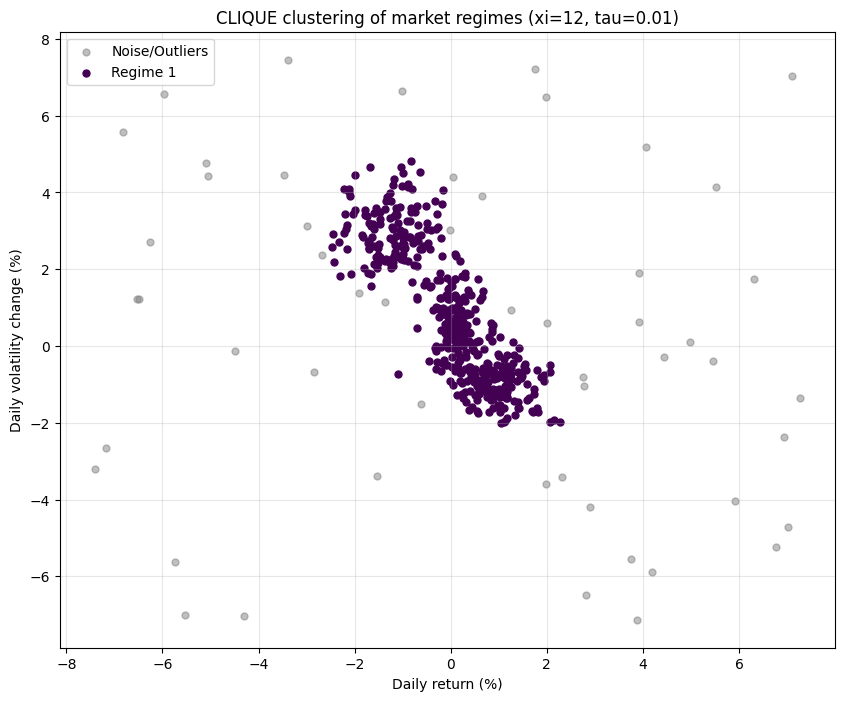

found_clusters = clique_clustering(data, xi=num_intervals, tau=density_threshold)The algorithm did not use distance between points like K-Means. Instead, it laid a 12x12 grid over the plot. It first found dense intervals along the Return axis and the Volatility axis independently. Then, using the Apriori principle, it only searched for 2D grid cells—squares—whose 1D projections were already dense. Finally, it grouped together all adjacent dense squares to form the final, arbitrarily-shaped clusters you see in the plot. The noise points are simply those that landed in grid cells with too few neighbors to meet the 1% density threshold (tau=0.01).

Spectral clustering takes a completely different, graph-based approach. It doesn't care about centroids or density. It thinks about connectivity.

The workflow can be defined as:

Build a similarity graph: It represents the dataset as a graph, where the nodes are the data points and the edges represent the similarity between them—e.g., using a Gaussian kernel. Two points are strongly connected if they are similar.

Create the laplacian matrix: It computes a special matrix from this graph called the Graph Laplacian, L=D−A, where A is the adjacency (similarity) matrix and D is the diagonal degree matrix. The Laplacian holds information about the graph's structure.

Find eigenvectors: It calculates the eigenvectors of the Laplacian. The key insight is that the first few eigenvectors—corresponding to the smallest eigenvalues—contain powerful information about the global structure of the graph. They represent the lowest frequency vibrations of the graph.

Cluster the eigenvectors: It takes these eigenvectors, which form a new, lower-dimensional representation of the data, and runs a simple clustering algorithm like K-Means on them. Because these eigenvectors have unwrapped the complex structure, K-Means can now easily find the clusters.

The magic is that this method can identify highly non-convex, intertwined shapes, like two interlocking spirals, which would fool almost every other algorithm.

Given a graph with vertices V, the objective is to find a partition of the vertices into k disjoint sets {A1,A2,…,Ak} that minimizes the following function:

Let's see Spectral Clustering solve a problem that would be impossible for K-Means or GMM.

import numpy as np

from sklearn.cluster import SpectralClustering

from sklearn.datasets import make_circles

# --- Generate concentric circles data ---

# Here again, for the sake of the example we will create weird geometric data

circles_data, y = make_circles(n_samples=500, factor=0.5, noise=0.05, random_state=42)

# --- Compare K-Means and Spectral Clustering ---

kmeans = KMeans(n_clusters=2, random_state=42, n_init='auto')

kmeans_clusters = kmeans.fit_predict(circles_data)

spectral = SpectralClustering(n_clusters=2, affinity='nearest_neighbors', random_state=42)

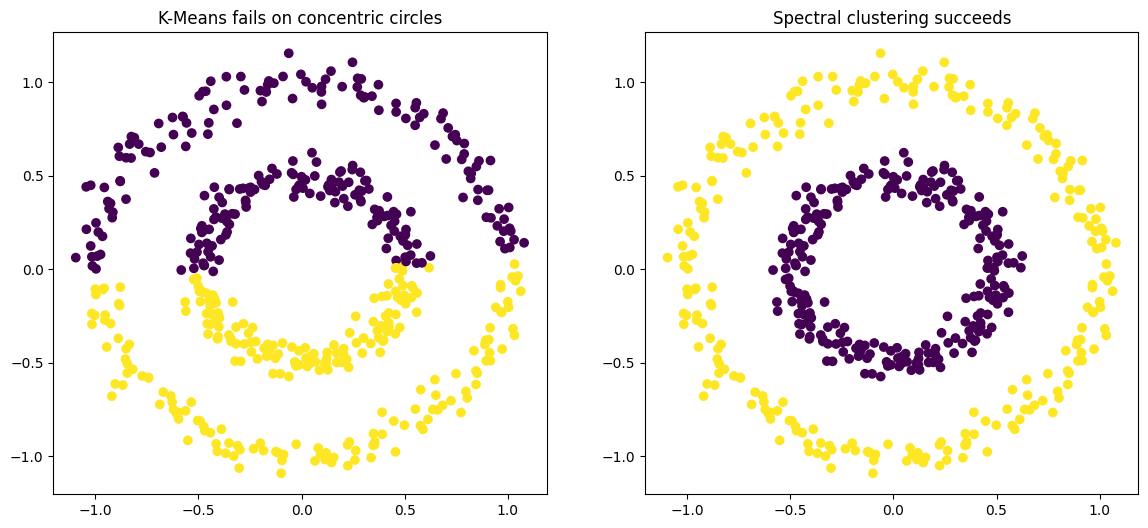

spectral_clusters = spectral.fit_predict(circles_data)The resulting side-by-side plot is definitive. The left plot shows K-Means failing miserably, cutting the two circles in half and grouping the wrong points together. The right plot shows Spectral Clustering perfectly identifying the inner circle as one cluster and the outer circle as the other. It succeeded because it understood the connectivity of the points, not just their spatial proximity.

Strengths:

CLIQUE: Excellent for high-dimensional data, finds clusters in subspaces, and provides interpretable results—it tells you which features define the cluster.

Spectral: Excellent for complex, non-convex cluster shapes. It's based on solid graph theory. Doesn't make strong assumptions about cluster form.

Pitfalls and computational hurdles:

CLIQUE: The grid-based approach can be sensitive to the number of bins chosen for each dimension. Also, its performance can degrade as the dimensionality of the subspace clusters increases.

Spectral: Its primary drawback is scalability. Building the similarity matrix is O(N2), and finding the eigenvectors is O(N3). This makes it unfeasible for very large datasets without using approximation techniques. It also requires specifying the number of clusters, K.

These methods gave us the tools to peer into more complex financial problems. CLIQUE could tell us which factors mattered for a given regime, while Spectral clustering could find regimes with bizarre, intertwined structures that other models couldn't see. But they were computationally heavy and still required careful tuning.

Fuzzy clustering

GMMs introduced us to soft assignments, but the underlying model was still rigid—a mixture of perfect Gaussians. What if the very concept of a cluster is inherently... fuzzy!? This is the core idea behind Fuzzy clustering, most famously implemented in the Fuzzy C-Means algorithm.

FCM relaxes the constraint from hard clustering that each data point must belong to exactly one cluster. Instead, it allows each point to belong to all clusters with a certain degree of membership, a value between 0 and 1. The sum of memberships for a single data point across all clusters must be 1.

FCM looks very similar to K-Means. It also iteratively updates cluster centers and point assignments. But instead of updating a simple assignment, it updates a large membership matrix, U, where uij is the degree of membership of data point xi in cluster cj.

The objective function it tries to minimize is:

The algorithm updates the memberships uij and cluster centers cj in a loop until convergence. A key difference from GMM is that FCM doesn't assume any underlying probability distribution for the clusters.

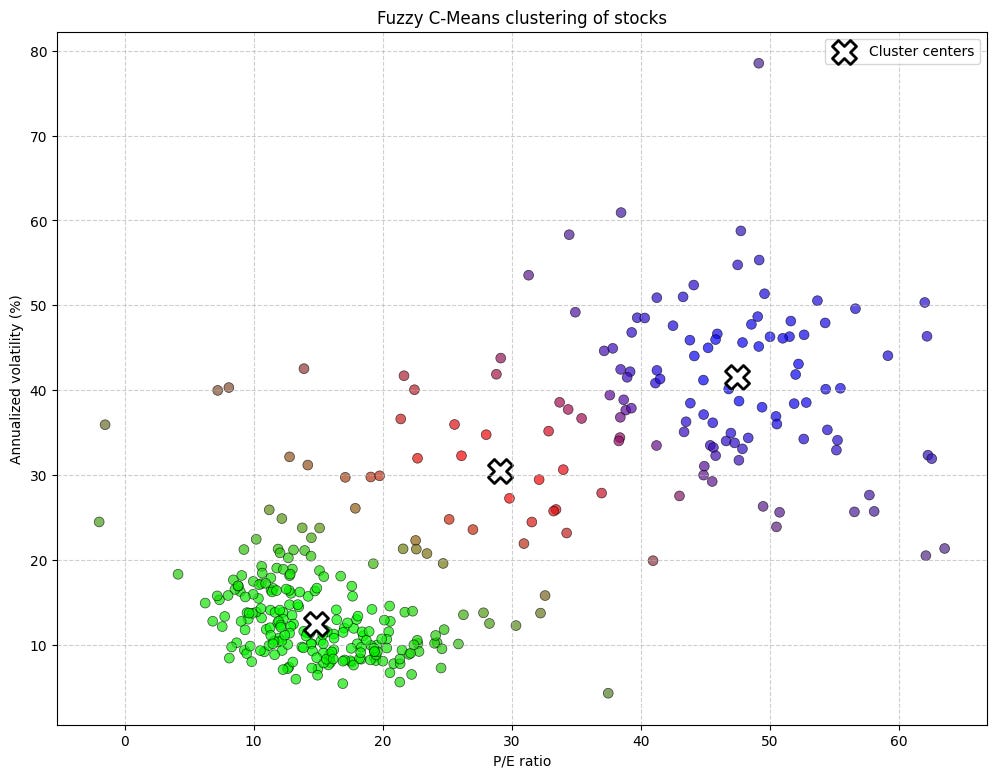

This concept was a breakthrough for our portfolio construction process. Imagine we have clustered a universe of stocks into "Value," "Growth," and "Momentum" regimes.

K-Means: Would tell us Stock A is 100% value.

GMM: Might tell us Stock A has a 70% probability of being value and 30% of being growth.

Fuzzy C-Means: Would say Stock A has a membership of 0.7 to the value cluster, 0.25 to growth and 0.05 to Momentum.

This is a different and more intuitive way to think about it. An asset like Apple might exhibit strong growth characteristics, some value characteristics—due to its cash flow—and some momentum characteristics at different times. Fuzzy C-Means provides a natural framework to represent this ambiguity. We could directly use these membership values as weights in a dynamic portfolio allocation strategy. Let’s see an example:

import numpy as np

from sklearn.preprocessing import StandardScaler

# --- Self-Contained Fuzzy C-Means implementation ---

def fuzzy_c_means_simple(X, n_clusters, m=2.0, max_iter=150, error_threshold=1e-5):

"""

A simplified implementation of the Fuzzy C-Means algorithm.

Args:

X (np.ndarray): The input scaled data, shape (n_samples, n_features).

n_clusters (int): The number of clusters (C).

m (float): The "fuzzifier" parameter.

max_iter (int): Maximum number of iterations.

error_threshold (float): Threshold for convergence.

Returns:

tuple: A tuple containing:

- u (np.ndarray): The final membership matrix.

- centers (np.ndarray): The final cluster centers.

"""

n_samples = X.shape[0]

# 1. Initialize membership matrix 'u' randomly.

# Each row must sum to 1.

u = np.random.rand(n_samples, n_clusters)

u = u / np.sum(u, axis=1)[:, np.newaxis]

for i in range(max_iter):

u_old = u.copy()

# 2. Calculate cluster centers (centroids).

# This is a weighted average, where weights are the powered memberships.

um = u ** m

centers = (um.T @ X) / np.sum(um, axis=0)[:, np.newaxis]

# 3. Update the membership matrix 'u'.

distance = np.zeros((n_samples, n_clusters))

for j in range(n_clusters):

# Calculate distance from each point to the new cluster center.

distance[:, j] = np.linalg.norm(X - centers[j], axis=1)

# To avoid division by zero if a point is on a center.

distance = np.fmax(distance, np.finfo(np.float64).eps)

# Calculate new memberships based on inverse distances.

inv_dist = distance ** (-2 / (m - 1))

u = inv_dist / np.sum(inv_dist, axis=1)[:, np.newaxis]

# 4. Check for convergence.

if np.linalg.norm(u - u_old) < error_threshold:

break

return u, centers

# --- 1. Generate synthetic financial data ---

# We will create a dataset of stocks based on two metrics:

# - Feature 1: P/E Ratio (Price-to-Earnings) - A valuation metric.

# - Feature 2: Annualized Volatility (%) - A risk metric.

np.random.seed(42)

# Cluster 1: "Value Stocks" (Low P/E, Low Volatility)

value_stocks = np.random.randn(100, 2) * [3, 4] + [12, 15]

# Cluster 2: "Growth Stocks" (High P/E, High Volatility)

growth_stocks = np.random.randn(100, 2) * [8, 10] + [45, 40]

# Cluster 3: "Utility-like Stocks" (Moderate P/E, Very Low Volatility)

utility_stocks = np.random.randn(100, 2) * [4, 2] + [18, 10]

# Create some ambiguous stocks that lie between the main clusters

ambiguous_stocks = np.random.randn(50, 2) * [10, 10] + [25, 25]

# Combine into a single dataset

X = np.vstack([value_stocks, growth_stocks, utility_stocks, ambiguous_stocks])

# It's crucial to scale the data for most clustering algorithms

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

# --- 2. Apply Fuzzy C-Means clustering ---

# Call our self-contained function instead of the external library.

u, cluster_centers_scaled = fuzzy_c_means_simple(X_scaled, n_clusters=3, m=2)The points that appear as nearly pure red, pure green, or pure blue are the stocks that the model has confidently assigned to a single cluster. For example, the group in the top-right is likely a pure green, representing our growth stocks—high P/E, high volatility—which have a membership probability close to [0, 1, 0].

Strengths:

The concept of shared membership is a very natural fit for financial assets, which rarely fit into neat, single categories.

Like GMM, it is less susceptible to the hard boundaries of K-Means.

Pitfalls and fuzziness overload:

Just as K-Means has the

Kproblem, FCM has themproblem. The choice of the fuzzifier parametermis not trivial and significantly impacts the results. There is no perfect theory for choosing the bestm.Like K-Means, it finds cluster centers and is best suited for spherical/globular cluster shapes. It inherits the same problems when dealing with complex geometries.

It does not have a mechanism for handling noise or outliers. Every point is forced to have some degree of membership in all clusters, so an outlier can still distort the results by pulling centers off course.

It isn’t a universal solution to the regime detection problem, as it shared many of the geometric limitations of its hard cousin, K-Means.

Neural network-based methods

Our final algorithm takes us into neural networks. We aren't looking for a deep-learning prediction engine, but rather a different way to perform clustering and visualization. This leads us to a classic but incredibly powerful algorithm: the Self-Organizing Map, also known as a Kohonen map.

An SOM is a type of artificial neural network that is trained using unsupervised learning to produce a low-dimensional—typically 2D—discretized representation of the input space of the training samples. Think of it as creating a map of the market, where similar market days are located close to each other on the map.

The SOM consists of a grid of neurons or nodes. Each neuron j in this grid has a weight vector, wj∈Rd, which has the same dimension as our input data—e.g., our 50 features for a trading day. The training process is an iterative one of competition and cooperation.

Initialization: Each neuron's weight vector, wj, is initialized, often with small random values or by sampling from the input data.

Competition: For each input data point x—a specific trading day—the algorithm finds the neuron whose weight vector is most similar to it. This winning neuron is the Best Matching Unit. The similarity is measured by minimum Euclidean distance. The index of the BMU, i(x), is found by:

\(i(x) = \underset{j}{\operatorname{argmin}} \|x - w_j\|_2\)Cooperation & adaptation: The BMU's weight vector, and the weight vectors of its neighbors on the 2D grid, are pulled slightly closer to the input data point. This update rule is the mathematical core of the SOM's learning process. For any neuron j, its weight vector at the next iteration, wj(t+1), is updated as follows:

\(w_j(t + 1) = w_j(t) + \alpha(t) \cdot h_{j,i(x)}(t) \cdot (x - w_j(t))\)Let's break down the components:

α(t) is the learning rate, a factor that decreases over time to allow for fine-tuning. A common form is exponential decay:

\(\alpha(t) = \alpha_0 \exp(-t/T_1).\)hj,i(x)(t) is the neighborhood function, which determines how much a neuron j gets to learn based on its grid-distance to the BMU, i(x). A typical Gaussian function is used:

\(h_{j,i(x)}(t) = \exp\left(-\frac{\text{dist}_{\text{grid}}(j, i(x))^2}{2\sigma(t)^2}\right) \)Here, distgrid is the distance on the 2D map, and σ(t) is the neighborhood radius, which also shrinks over time.

Over many iterations, this process causes the SOM to organize itself into a map that preserves the topology of the input data. High-dimensional data is projected onto the 2D grid, with similar inputs mapping to nearby neurons.

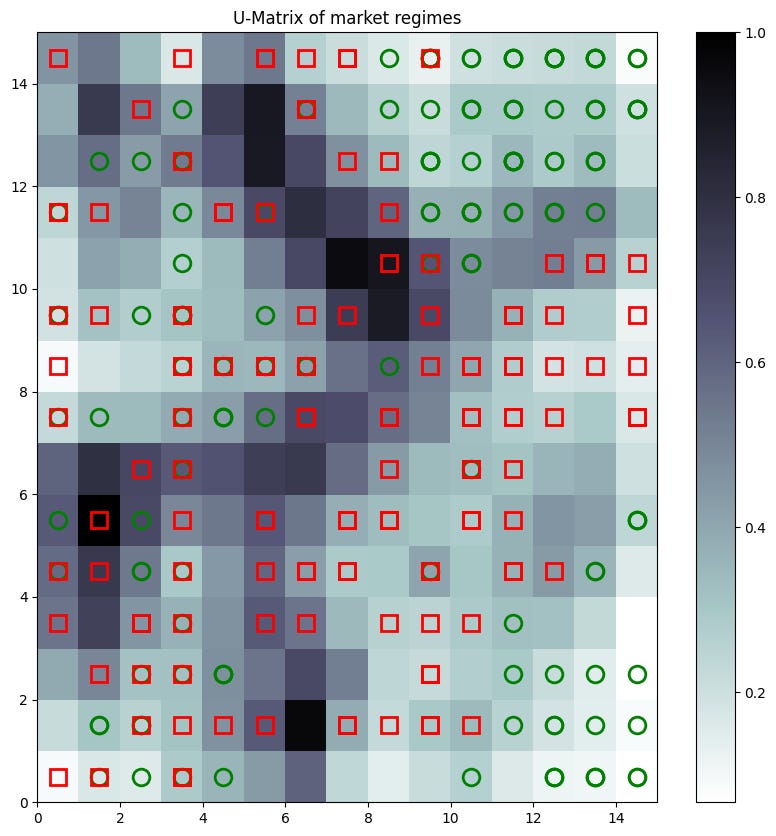

The U-Matrix gives us an intuitive, topographical view of our market regimes:

from minisom import MiniSom

import numpy as np

import matplotlib.pyplot as plt

# Using the 'scaled_data' from the K-Means example

data = scaled_data

# --- Initialize and train the SOM ---

# The grid size (e.g., 15x15) is a parameter to be chosen

map_size_x = 15

map_size_y = 15

# 3 features: return, vol change, and a dummy one for demonstration

som = MiniSom(map_size_x, map_size_y, data.shape[1], sigma=1.5, learning_rate=0.5,

neighborhood_function='gaussian', random_seed=42)

som.random_weights_init(data)

som.train_random(data, 500) # Train for 500 iterations

som.distance_map().TThe background colors—from the U-Matrix—would show dark rivers separating lighter plains. The colored markers show where our original, known regimes were mapped. We would hopefully see the green circles—bull quiet—clustered in one light area, the red squares—bear volatile—in another, separated by a dark border.

Strengths:

Its greatest strength is the ability to project high-dimensional data onto a 2D map that is intuitive for human analysts. It's a fantastic tool for exploratory data analysis.

It reveals the structure and relationships of the data in a way that flat clustering can't.

It's a non-linear form of dimensionality reduction and clustering rolled into one.

Pitfalls:

An SOM doesn't output explicit cluster assignments. It creates a map that must then be interpreted, often by running a second clustering algorithm—like K-Means or DBSCAN—on the neuron weights themselves.

The map size, learning rate schedule, and neighborhood function all need to be chosen carefully. An incorrectly sized map can distort the topology.

Training can be slow for large datasets and large maps.

The map is a projection. It is an abstraction of reality and can sometimes create apparent relationships that don't exist in the original space.

The SOM didn't give us a final answer, but it gave us a new dashboard. It was the ultimate human-in-the-loop tool, a living map of the market that we could use to build intuition and validate the findings from our other, more rigid clustering models.

Okay, guys, we've seen that clustering can help or not depending on what you do. Don't worry, because I'll keep bringing you models that are better than those from classic ML.

Okay team! Great job today! This was mor than enough. It’s time to switch off. Stay curious, stay boundless, stay quanty! 🕹️

PS: Would you like me to show you some online clustering model?

This is an invitation-only access to our QUANT COMMUNITY, so we verify numbers to avoid spammers and scammers. Feel free to join or decline at any time. Tap the WhatsApp icon below to join