[WITH CODE] Risk Engine: Position sizing

Experience next‑level performance with smarter positioning

Table of contents:

Introduction.

Limitations and hidden dangers of static position sizing.

Architectural design and operational framework.

Mathematical foundations and implementation.

Drawdown-based size reduction.

Profit-based size increase.

Recovery adjustment.

Algorithmic flow of the stochastic sizer.

Introducing abstraction via regret minimization.

Pruning of candidates.

Abstracted position sizer.

Introduction

You've got your algorithmic trading strategy, honed and backtested. It spots an opportunity, the signal fires, and you're ready to execute. But before the order goes live, there's that crucial, sometimes gut-wrenching question: How many contracts? How many shares?

This isn't just a minor detail; the position size is a direct multiplier of your strategy's outcome. It amplifies wins, yes, but it also amplifies losses. For a long time, the approach was often static–a fixed number, or a simple fixed percentage of capital. It felt straightforward, predictable even. But in the financial markets, relying on a single, predetermined bet size feels increasingly... inadequate. It's the central dilemma: using a rigid tool in a fluid environment.

The problem with a static position size quickly becomes apparent when the market stops behaving nicely—which, let's be honest, is often. If your strategy hits a rough patch – maybe the market regime shifted, or your model is temporarily out of sync – a fixed, large position size means every loss hits hard, accelerating drawdowns precisely when you need to preserve capital. It's like steering into a storm with the sail fully open because that's the size you started with.

Conversely, imagine your strategy finds its rhythm, navigating the market beautifully and racking up profits. If you're stuck with a small size decided upon when things were less certain, you're fundamentally limiting your upside. You're leaving potential alpha on the table simply because your risk allocation isn't scaling with your success. A fixed size ignores the vital feedback loop of performance, creating a dangerous disconnect between the risk being run and the system's actual ability to handle it in the current market context.

So then, why don’t you make position size adaptive?

Limitations and hidden dangers of static position sizing

Most trading systems use disappointingly simplistic approaches:

Fixed lot sizing: Always trade the same number of contracts.

Fixed fractional: Risk a consistent percentage of capital.

Kelly criterion: Optimize based on win rate and payoff ratio.

These approaches ignore a fundamental truth about markets: they're living, breathing organisms that cycle through regimes of volatility, trending behavior, and choppy consolidation. A position sizing strategy that works beautifully in a bull market might prove disastrous during a flash crash.

Consider these risks of conventional approaches:

Volatility blindness: Fixed lot sizes ignore changing market conditions.

Recovery challenges: After drawdowns, fixed fractional models struggle to rebuild capital.

Parameter sensitivity: Methods like Kelly are highly sensitive to estimation errors.

Black swan vulnerability: Most models perform poorly during extreme market events.

Architectural design and operational framework

The pivotal insight that drives our exploration is this: position sizing should be as dynamic and adaptive as the markets themselves. What if we could develop a system that:

Expands positions during favorable periods.

Contracts during drawdowns to preserve capital.

Smoothly recovers after periods of losses.

Automatically adapts to changing system’s performance.

This brings us to what I have dubbed the Stochastic Position Sizer. A framework that treats position sizing not as a static formula but as a dynamic process. Indeed, translating the concept of adaptive, performance-contingent sizing into a functional algorithm requires defining specific rules and mechanisms.

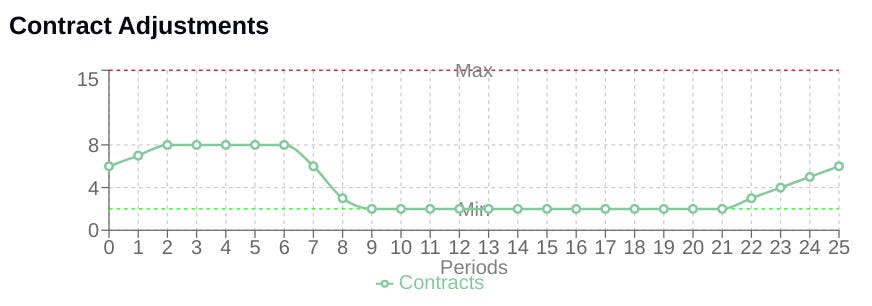

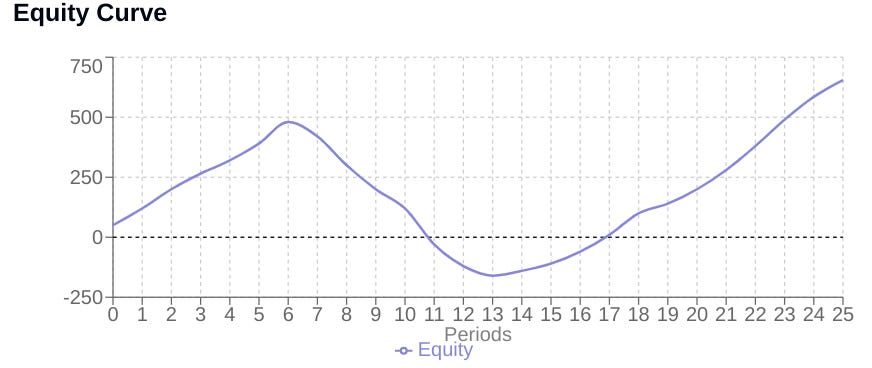

The StochasticPositionSizer is designed to dynamically adjust position size based on the system's performance history, particularly its Profit and Loss and resulting equity curve. It is initialized with boundaries min_contracts, max_contracts and a starting size initial_contracts. Its adaptive behavior is governed by several key parameters:

drawdown_sensitivity: Controls how aggressively position size is reduced as the system enters or deepens a drawdown. Higher sensitivity leads to steeper reductions.profit_smoothing: Determines the influence of recent positive PnL on scaling up position size. A higher value allows recent profits to more readily increase size.recovery_rate: Specifies how quickly position size is increased towards theinitial_contractslevel when the system is showing signs of recovering from a drawdown—i.e., drawdown is shrinking.lookback_period: Defines the window size for calculating recent performance metrics, such as average PnL.

These parameters calibrate the sizer's response profile, allowing it to be more risk-averse during losses and more aggressive during profitable periods, according to the system designer's preference.

But before implementing it, let’s take a look of the mathematical background.

Mathematical foundations of adaptive sizing

The core of the StochasticPositionSizer's logic resides in how it mathematically adjusts the position size based on calculated performance metrics within its update method.

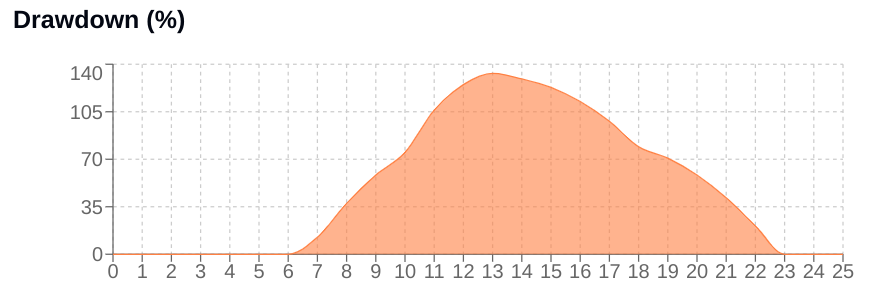

Drawdown-based size reduction

Drawdown (DD) is a critical metric representing the percentage decline from the peak equity reached so far. It is calculated as:

The absolute value in the denominator provides robustness, though for established positive equity curves, it simplifies to just the peak.

The size adjustment based on drawdown uses an exponential decay function:

Here, e is the base of the natural logarithm. This formula ensures a non-linear response: small drawdowns result in minor size reductions—factor close to 1—while larger drawdowns lead to increasingly aggressive reductions—factor decreasing rapidly towards 0. A higher drawdown_sensitivity makes this reduction curve steeper. This mechanism is fundamental for dynamic capital preservation during unfavorable periods.

Profit-based size increase

Positive recent performance encourages scaling up. The sizer looks at the average PnL over the lookback_period.

avg_pnl = sum(recent_pnl) / len(recent_pnl)This average PnL, relative to the equity scale (represented in the code by the sum of the equity curve for normalization), contributes to a growth_factor.

This factor influences how much of the remaining distance to the max_contracts is added to the current size:

distance_to_max = self.max_contracts - current_size

if distance_to_max > 0:

contracts += distance_to_max * (1 - math.exp(-growth_factor)) * 0.1The term 1−e−GrowthFactor increases towards 1 as GrowthFactor increases. The additional 0.1 multiplier in the code dampens this effect, ensuring that scaling up towards the maximum is gradual and smoothed, preventing over-leveraging based on short-term volatility spikes.

Recovery adjustment

When the system's drawdown percentage begins to decrease—prev_dd > self.current_drawdown—indicating a recovery phase, a specific adjustment is applied.

def _apply_recovery(self, contracts: float, improving: bool) -> float:

if not improving:

return contracts

distance_to_initial = self.initial_contracts - contracts

if distance_to_initial > 0: # Only if current size is below initial

contracts += distance_to_initial * self.recovery_rate

return contractsIf the system is improving and the current size is below the initial_contracts, a fraction or recovery_rate of the distance back towards the initial size is added. This provides a focused mechanism to help the position size rebound towards a baseline operating level once the system shows signs of emerging from a slump.

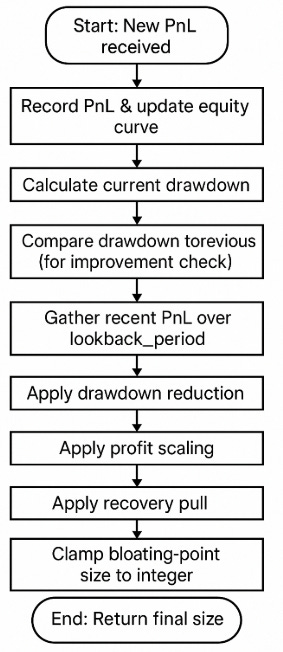

Algorithmic flow of the stochastic sizer

The update method integrates these calculations sequentially. Upon receiving a new PnL:

This flow ensures that risk-off—drawdown reduction—is a primary response, followed by risk-on—profit scaling—and recovery logic.

Besides, there are some granularity considerations. While the StochasticPositionSizer provides adaptive sizing, it calculates a floating-point target size and then rounds it to an integer. In many trading environments, position sizes are discrete integer units. This raises a question: is calculating a precise float necessary or potentially noisy? Constantly adjusting towards a theoretical continuous value before rounding might introduce small, frequent changes that aren't strictly necessary or could be sensitive to minor PnL variations. This highlights a potential area for refinement – managing the decision space to a discrete set of options.

Introducing abstraction via regret minimization

To address the granularity consideration and add a layer of stability, the AbstractedStochasticPositionSizer is introduced. This class maintains a limited set of candidate position sizes and, instead of simply rounding the calculated target size, selects the closest size from this curated list.

The selection and maintenance of this candidate list are managed using a form of regret minimization. After each PnL update, the system calculates the hypothetical PnL that would have been achieved if each candidate size s had been used instead of the actual sizet:

Where Size Usedt is self.current_contracts from the previous step in the code.

The system identifies the candidate size that yielded the highest hypothetical PnL for that step. The "regret" for every other candidate is the difference between the best hypothetical PnL and their own:

This regret accumulates for each candidate over a defined prune_interval.

Pruning of candidates

Periodically, after the prune_interval, the system prunes the candidate list based on the accumulated regret. Candidates are sorted by their total regret—lowest regret is best. Only the top target_range_size candidates with the least accumulated regret are retained. Their regret counters are reset to zero for the next interval.

# Snippet: Regret Update and Candidate Pruning

def _update_regrets(self, pnl: float):

# Calculate hypothetical PnL for all candidates for this step

hypo = {s: pnl * (s / (self.current_contracts or 1)) for s in self.candidate_sizes}

best = max(hypo.values()) # Find the best outcome among candidates

# Accumulate regret for those who weren't the best

for s, val in hypo.items():

self.regrets[s] += max(0, best - val)

def prune_candidates(self):

# Sort candidates by total regret (lowest first) and keep the best

best = sorted(self.regrets.items(), key=lambda x: x[1])[:self.target_range_size]

self.candidate_sizes = [int(s) for s, _ in best]

# Reset regret for the survivors

self.regrets = {s: 0.0 for s in self.candidate_sizes}This mechanism ensures that the candidate set remains focused on the sizes that have empirically performed well—relative to the other candidates—over recent history, filtering out those that consistently led to suboptimal hypothetical results.

Abstracted position sizer

This is the final step where updates happen:

Upon receiving PnL, it immediately updates the regret for the current candidates.

It runs the base

StochasticPositionSizer'supdatemethod to get the theoretical, floating-point target size based on all performance metrics.It finds the candidate size from its approved list that is closest to this theoretical target size.

This closest candidate size is chosen as the actual

current_contracts.It checks if it's time to prune the candidate list based on the accumulated regret, and performs pruning if necessary.

The chosen integer size is stored and returned.

# Snippet: Abstracted Sizer Update Flow

def update(self, pnl: float) -> int:

# 1. Update regret for candidates based on this PnL

self._update_regrets(pnl)

# 2. Calculate the theoretical target size using the core logic

new_size_float = super().update(pnl)

# 3. Select the closest size from the current set of candidates

chosen = int(min(self.candidate_sizes, key=lambda s: abs(s - new_size_float)))

# 4. Use the chosen candidate size

self.current_contracts = chosen

self.historical_contracts.append(chosen)

# 5. Prune candidates periodically

if len(self.historical_pnl) % self.prune_interval == 0:

self.prune_candidates()

return chosenAt this point, we have to ask ourselves, what is the outcome of all this? Imagine if we were to trade without an algorithm that generates signals. Simply, going crazy with the price, what results would we have obtained?

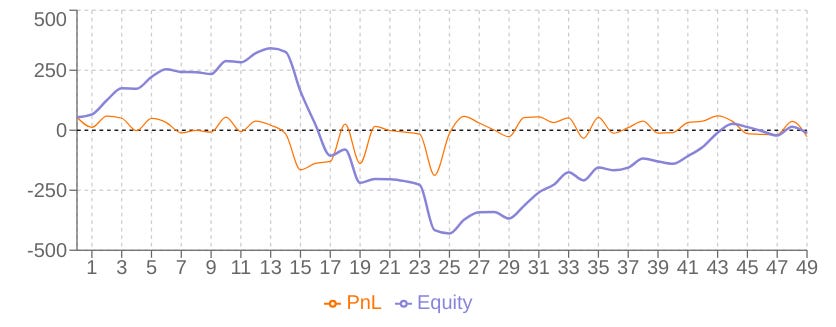

In scenarios where there is high volatility:

In scenarios of crash and recovery:

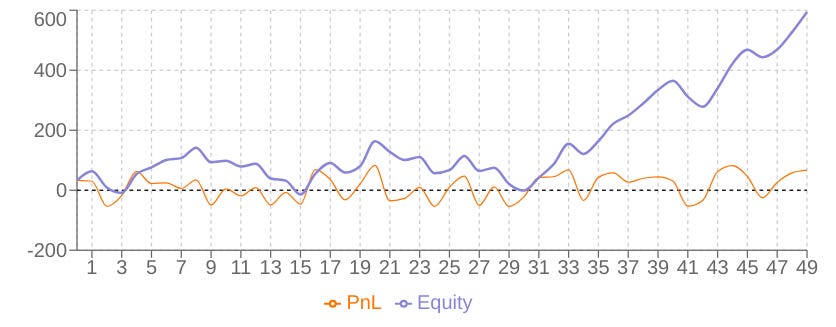

In scenarios of trending bullish:

Awesome work today, team! Tomorrow we unleash more about quant modeling—until then, refine your signals, question your assumptions, and may your edge stay sharp and your drawdowns shallow! Stay sharp, stay quanty!🧠

PS: I don't know if you've noticed, but I'm trying to be more sober with math. Rate the newsletter based on this new format. Is this still a very high level?

This is an invitation-only access to our QUANT COMMUNITY, so we verify numbers to avoid spammers and scammers. Feel free to join or decline at any time. Tap the WhatsApp icon below to join

Appendix

Full script:

import numpy as np

import matplotlib.pyplot as plt

import math

from typing import List, Dict, Optional

class StochasticPositionSizer:

"""

STOCHASTIC-POSITION-SIZER: Dynamic position sizing driven by stochastic performance metrics.

Adjusts contract sizes based on realized PnL, drawdown sensitivity,

profit smoothing, and recovery behavior.

"""

def __init__(

self,

min_contracts: int,

max_contracts: int,

initial_contracts: Optional[int] = None,

drawdown_sensitivity: float = 2.0,

profit_smoothing: float = 0.5,

recovery_rate: float = 0.3,

lookback_period: int = 20

):

self.min_contracts = min_contracts

self.max_contracts = max_contracts

self.initial_contracts = (

initial_contracts

if initial_contracts is not None

else (min_contracts + max_contracts) // 2

)

self.drawdown_sensitivity = drawdown_sensitivity

self.profit_smoothing = profit_smoothing

self.recovery_rate = recovery_rate

self.lookback_period = lookback_period

# State tracking

self.current_contracts: int = self.initial_contracts

self.historical_pnl: List[float] = []

self.historical_contracts: List[int] = [self.initial_contracts]

self.equity_curve: List[float] = [0.0]

self.current_drawdown: float = 0.0

self.drawdown_history: List[float] = [0.0]

def _calculate_drawdown(self) -> float:

peak = max(self.equity_curve)

current = self.equity_curve[-1]

if current >= peak or peak == 0:

return 0.0

return (peak - current) / abs(peak)

def _adjust_for_drawdown(self, contracts: float, drawdown: float) -> float:

return contracts * math.exp(-self.drawdown_sensitivity * drawdown)

def _adjust_for_profit(self, contracts: float, recent_pnl: List[float]) -> float:

if not recent_pnl:

return contracts

avg_pnl = sum(recent_pnl) / len(recent_pnl)

total_equity = sum(self.equity_curve) or 1e-6

if avg_pnl > 0:

growth_factor = 1 + (avg_pnl / abs(total_equity)) * self.profit_smoothing

distance_to_max = self.max_contracts - contracts

if distance_to_max > 0:

contracts += distance_to_max * (1 - math.exp(-growth_factor)) * 0.1

return contracts

def _apply_recovery(self, contracts: float, improving: bool) -> float:

if not improving:

return contracts

distance_to_initial = self.initial_contracts - contracts

if distance_to_initial > 0:

contracts += distance_to_initial * self.recovery_rate

return contracts

def update(self, pnl: float) -> int:

# Update equity and PnL history

current_equity = self.equity_curve[-1] + pnl

self.equity_curve.append(current_equity)

self.historical_pnl.append(pnl)

# Update drawdown

prev_dd = self.current_drawdown

self.current_drawdown = self._calculate_drawdown()

self.drawdown_history.append(self.current_drawdown)

improving = prev_dd > self.current_drawdown

# Profit smoothing

lookback = min(self.lookback_period, len(self.historical_pnl))

recent_pnl = self.historical_pnl[-lookback:] if lookback else []

# Base sizing adjustments

size = float(self.current_contracts)

size = self._adjust_for_drawdown(size, self.current_drawdown)

size = self._adjust_for_profit(size, recent_pnl)

size = self._apply_recovery(size, improving)

size = max(self.min_contracts, min(self.max_contracts, size))

# Finalize contract count

self.current_contracts = int(round(size))

self.historical_contracts.append(self.current_contracts)

return self.current_contracts

def run_sequence(self, pnl_sequence: List[float]) -> List[int]:

"""

Process a sequence of PnL values and return the resulting contract sizes.

"""

contracts_list: List[int] = []

for pnl in pnl_sequence:

contracts_list.append(int(self.update(pnl)))

return contracts_list

def visualize(self, pnl_sequence: List[float]) -> None:

"""

Visualize the strategy performance for a given PnL sequence.

Does not return values; use `run_sequence` to get contract array.

"""

# Optional: reset state here if needed

# Plot preparation

for pnl in pnl_sequence:

self.update(pnl)

fig, axs = plt.subplots(3, 1, figsize=(12, 16), gridspec_kw={'height_ratios': [2, 1, 1]})

fig.suptitle("STOCHASTIC-POSITION-SIZER Performance Analysis", fontsize=16)

axs[0].plot(self.equity_curve, label='Equity', linewidth=2)

axs[0].set_title('Equity Curve')

axs[0].axhline(0, linestyle=':')

axs[0].legend()

axs[0].grid(True)

axs[1].fill_between(range(len(self.drawdown_history)),

[100 * d for d in self.drawdown_history],

alpha=0.3)

axs[1].set_title('Drawdown (%)')

axs[1].grid(True)

axs[2].plot(self.historical_contracts, label='Contracts', linewidth=2)

axs[2].axhline(self.max_contracts, linestyle='--', label='Max')

axs[2].axhline(self.min_contracts, linestyle='--', label='Min')

axs[2].set_title('Contract Adjustments')

axs[2].set_xlabel('Periods')

axs[2].legend()

axs[2].grid(True)

plt.tight_layout()

plt.show()

class AbstractedStochasticPositionSizer(StochasticPositionSizer):

"""

Extends StochasticPositionSizer with range abstraction via regret minimization.

Maintains a reduced candidate set of contract sizes,

periodically pruned based on accumulated regret.

"""

def __init__(

self,

min_contracts: int,

max_contracts: int,

initial_contracts: Optional[int] = None,

drawdown_sensitivity: float = 2.0,

profit_smoothing: float = 0.5,

recovery_rate: float = 0.3,

lookback_period: int = 20,

prune_interval: int = 50,

target_range_size: int = 5,

num_initial_candidates: int = 20

):

super().__init__(

min_contracts,

max_contracts,

initial_contracts,

drawdown_sensitivity,

profit_smoothing,

recovery_rate,

lookback_period

)

self.prune_interval = prune_interval

self.target_range_size = target_range_size

# Generate Python int candidate sizes

self.candidate_sizes = sorted({int(s) for s in

np.linspace(min_contracts,

max_contracts,

num_initial_candidates)

.round()})

self.regrets = {s: 0.0 for s in self.candidate_sizes}

def _update_regrets(self, pnl: float):

hypo = {s: pnl * (s / (self.current_contracts or 1)) for s in self.candidate_sizes}

best = max(hypo.values())

for s, val in hypo.items():

self.regrets[s] += max(0, best - val)

def prune_candidates(self):

best = sorted(self.regrets.items(), key=lambda x: x[1])[:self.target_range_size]

self.candidate_sizes = [int(s) for s, _ in best]

self.regrets = {s: 0.0 for s in self.candidate_sizes}

def update(self, pnl: float) -> int:

self._update_regrets(pnl)

new_size = super().update(pnl)

chosen = int(min(self.candidate_sizes, key=lambda s: abs(s - new_size)))

self.current_contracts = chosen

self.historical_contracts.append(chosen)

if len(self.historical_pnl) % self.prune_interval == 0:

self.prune_candidates()

return chosen

if __name__ == "__main__":

example_sequence = [50, 70, 80, 65, 55, 70, 90,

-60, -120, -100, -80, -150, -90, -40,

20, 30, 50, 70, 90, 40, 60, 80,

100, 110, 95, 70]

system = AbstractedStochasticPositionSizer(

min_contracts=2,

max_contracts=15,

initial_contracts=5,

drawdown_sensitivity=2.5,

profit_smoothing=0.4,

recovery_rate=0.25,

lookback_period=20,

prune_interval=50,

target_range_size=5,

num_initial_candidates=20

)

# Visualization

system.visualize(example_sequence)

# Retrieve pure int contracts array

contracts_array = system.run_sequence(example_sequence)

print("Contracts array:", contracts_array)