AI: Fake news that adds volatility

How manufactured news will make your PnL more volatile than ever

Table of contents:

Introduction.

From headlines to quantitative signals.

Transformer-based models in financial news analysis.

Deepfakes in the old days.

Mechanics and dynamics of impact of deepfakes.

Step-by-step guide to create a deepfake engine.

Get your token.

Load libraries and functions.

Take a news story and fake it.

Introduction

Imagine an automated system—let’s call it Sentinel—designed to analyze stock market news and predict whether prices will rise or fall based on headline sentiment. Under normal conditions, genuine headlines provide clear signals that guide investors toward profitable decisions. However, the situation becomes complicated when sophisticated deepfake technology introduces fabricated headlines into the mix. These deepfakes, created by advanced artificial intelligence, replace real news with deceptive content. The result is a distortion of true market signals, misleading both human investors and algorithmic trading systems.

Deepfake interference creates abrupt reversals in sentiment signals. When manipulated headlines suggest overly positive performance or unwarranted pessimism, they distort market perception. This misalignment between the actual financial condition and the perceived sentiment introduces uncertainty and amplifies price volatility.

From headlines to quantitative signals

In the dark ages of data science, text mining carried out this process—and I have to admit that it was complete shit. The old-timers call it sentiment analysis. It's the process of converting textual headlines into numerical signals. In our framework, each headline is assigned a score based on its content.

For instance, a headline such as “Tech Giant’s Profits Surge!” might receive a positive score, while a headline like “CEO Resigns Amid Financial Scandal” would receive a negative score.

Then we can mathematically model the sentiment S of a headline as:

Consider a headline that contains both positive and negative cues, such as “Stocks rally but rumors hint at a crash.” If we assign +1 for rally and –1 for crash, the overall sentiment might cancel out to zero, indicating a neutral or ambiguous signal.

Now, if deepfakes are introduced into the data stream, they can invert or obscure these signals. A headline that should be clearly positive may be flipped to negative, and vice versa. This misclassification creates uncertainty and can lead to erratic trading behavior.

Notice how simulated deepfake events cause sudden reversals in the sentiment signal.

As you can see the plot displays abrupt changes in sentiment—these disruptions are indicative of increased uncertainty that can drive erratic market behavior.

Transformer-based models in financial news analysis

Old language models, such as those based on transformer architectures, have revolutionized text processing. These models work by assigning an attention score to each word in an input sequence, helping the model focus on the most relevant parts of a headline.

The attention mechanism can be mathematically described by the equation:

where:

Q (queries) and K (keys) are matrices representing different aspects of the input text.

V (values) holds the actual word embeddings.

d is the dimensionality of the key vectors, ensuring that the scores are appropriately scaled.

Take a look at this classic paper to learn more about the attention layer: [Attention is all you need]

This process allows the model to assign a weight to each word based on its importance in the context of the entire sentence.

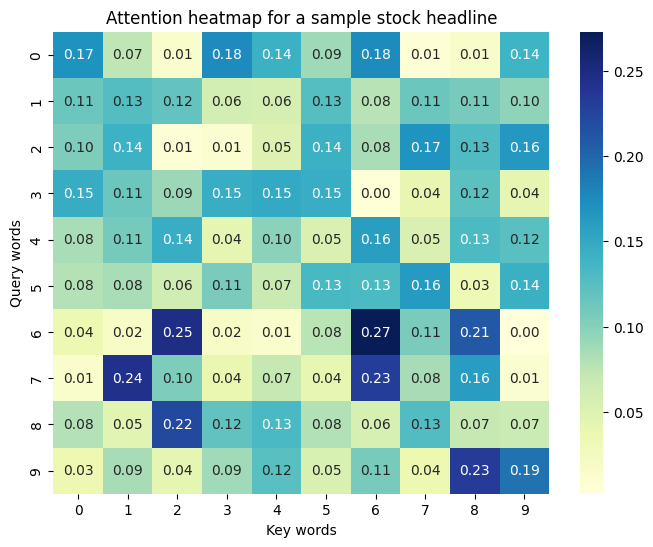

Below is an example of an attention matrix for a sample stock headline. The heatmap shows how attention is distributed across words. In scenarios where deepfakes alter the input text, the focus areas may shift unexpectedly, degrading the model’s performance.

The heatmap visually represents how each word or token in the headline receives attention from others. Misleading or manipulated text—from deepfakes—could disrupt these patterns, leading to suboptimal interpretation.

Deepfakes in the old days

Years ago deepfakes in financial news were created using ML techniques—most notably, Generative Adversarial Networks. These models produced synthetic content that tried to mimic real headlines, making it challenging to distinguish between genuine and deceptive signals.

GANs are like that annoying twin brother competing against you for your parents' love. Basically, two neural networks—the generator G and the discriminator D—are pitted against each other. The GAN’s objective is defined as:

where:

preal represents the distribution of genuine stock headlines.

pz is the noise distribution used to generate synthetic headlines.

The discriminator D learns to distinguish real headlines from fake ones generated by G.

However, modern language models have changed enough to the point where it's almost impossible to tell if it's fake or not. The thing is, what are you going to do when you read a news story? Check if it's fake? Ha!

Politicians and influencers will share it, creating hype, and there will be people who believe what the headline says. How is this process carried out? Let’s see the modern version of LLM in detail!