[WITH CODE] Data: Data cleaning and preparation

Can we validate trading strategies with poor data?

Introduction

Envision yourself as a bold fortune hunter, standing at the threshold of a vast mine. In your hands, you clutch a weathered treasure map—its edges worn and creased, vital markings smudged by water, and entire portions devoured by nibbling rodents. You know riches lie within, but your only guidance comes from faded scrawls and tear-soaked footprints.

Now substitute the following:

You → A trader or quantitative analyst.

Treasure map → Your set of historical market data.

Gold → Profits or that magical edge in the markets.

Welcome to the challenge of validating trading strategies with incomplete, noisy, or otherwise suspicious data—poor data. The question we tackle in these many, many words is simple: Can we confirm a trading strategy’s worth if the data is incomplete, messy, or downright suspicious?

Let's take the grand tour of low-quality data!

Before you can even think of validating a strategy, you need to know your adversary. Poor data isn’t a single, monolithic beast. It’s more like a band of rowdy ruffians—each with its own quirks and ability to trip you up at inopportune times. In this section, let’s examine the typical culprits:

Missing values

High noise

Short time spans

Misaligned or dirty data

We start with the first one. Ever see a cheese with holes so large you can peer through it like a telescope? That's often how broker data looks. You might have entire days with no recorded prices, incomplete volume information, or entire columns that vanish for a week. Check this random chart and pay attention to the red circles—what the hell is this!? 🤨 I feel like the meme of the elephant and the penguin. And check out those hairy candles... WTF!? I encourage you to drop a comment if you find anything else!

Among some common examples, we see:

Suspended trading: When an exchange halts a stock for volatility.

Outage or glitch: An API fails to deliver data for a certain window of time.

Historic data gaps: The asset didn’t exist or wasn’t traded.

Missing values can wreak havoc. If you feed them directly into a strategy’s calculation, you could end up with nonsensical signals or random error messages. Therefore, any validation must handle these holes wisely. If you look closely, you can see day 1... then day 2 disappears, day 3 reappears, day 4 is gone again—and so on, until your chart looks like an avant-garde painting.

Causes: System outages, trading halts, or your data vendor just dozed off.

What is the effect of this? Your algorithm sees random gaps and might mistake them for stable trends, akin to a person believing their cat teleported just because it left the room.

The next one is what I like to call Thunder in the dataset. Imagine a heavy metal concert with thunderous guitars, booming drums, and someone screaming at the top of their lungs. Trying to hear a whisper in that environment is tough. In the same way, noisy data can bury the faint signals you’re after.

Data noise is essentially the presence of random fluctuations and distortions that blur the “true” price or value. Maybe a single post from an influencer triggers a price spike that reverts quickly, or a day trader with deep pockets pushes the price around just for fun. You see patterns that might just be coincidences. Or you might miss patterns because they get swallowed by the noise. Good luck. That’s basically what “noise” is in a dataset. You suspect there’s a hidden pattern, but all you hear is chaotic shrieking and thunder-like drum solos.

For examples: Meme-stock mania, day-trader tweets, or random microstructure noise.

What is the consequence? Even if a legit pattern is there, it’s overshadowed by the raucous noise. You might as well be searching for a needle in a head-banging haystack.

Ok, and what about the third one? I like to call this one the mayfly problem. A mayfly famously lives for only a few hours or days. Similarly, you might have only a few weeks or months of data for a new cryptocurrency or a newly listed stock. Validating a strategy on such a short timescale could be akin to concluding an entire year’s climate from a single afternoon’s weather:

For example: A newly launched altcoin might only have 3 months of trading history.

What's the risk? You might see a massive rally and assume it’s the norm, when it’s just an initial hype wave.

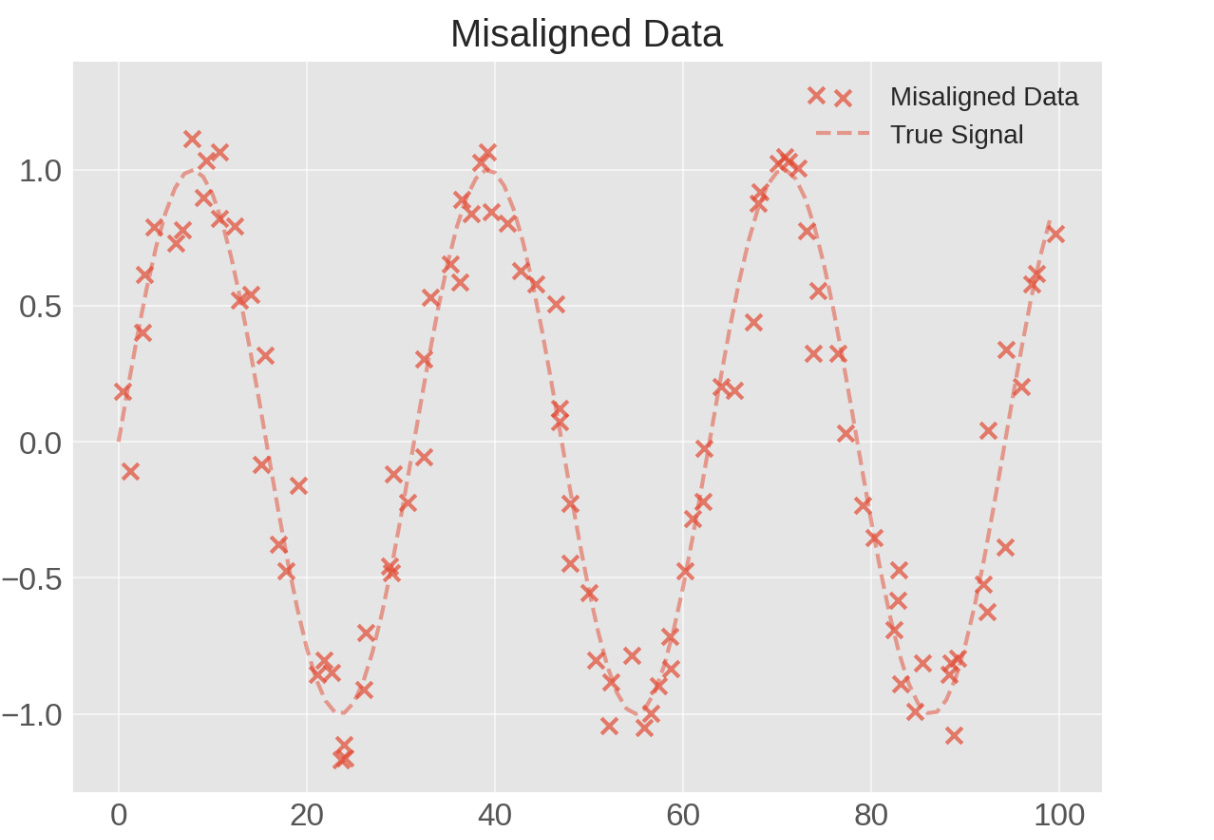

And the last one—no clever labels, just raw, unfiltered dirty data. Or wait, wait—better yet, muggle data. So, sometimes data arrives in jumbled order, or with incorrect timestamps. Attempting to stitch it into a coherent time series is like trying to solve a jigsaw puzzle with pieces from different sets—and someone might have scribbled on them with crayons.

For example: Timestamps in different zones, or volume data that arrives late, or poorly formatted “M/MM/DD/YYYY” fields.

What’s the result? Your strategy tries to compare apples to pineapples, generating pineapple-applesauce at best.

Now that we’ve classified our culprits, let’s talk about why the concept of validating a trading strategy is so critical in the first place. After all, if we’re dealing with incomplete or unreliable data, how can we possibly claim success or identify failure? Moreover, why should that matter when it comes to validating strategies?

Visualize you have a brilliant new idea for a strategy: buy a stock whenever Jeff Bezos posts about space travel or Blue Origin, and sell an hour later. Sure, it sounds whimsical, but how do you prove it has any real merit?

Validation is the process of checking whether a strategy that seems promising in your imagination might hold up in more realistic conditions.

With poor data, you’re attempting to glean stable and consistent results from an unstable foundation. This is somewhat akin to trying to guess what shape a puzzle piece should be even when half the edges are missing.

In robust scenarios, we gather large, clean data sets, meticulously slice them into a training portion (to adjust or create the strategy’s parameters) and a separate set for testing or evaluation. But with poor data, you’re short on many of these resources. You must figure out how to patch the data, gather alternative data, or apply specialized techniques to minimize the risk of concluding nonsense.