[WITH CODE] Risk Engine: Circuit breaker

Adaptive p-values, a new frontier in algorithmic trading

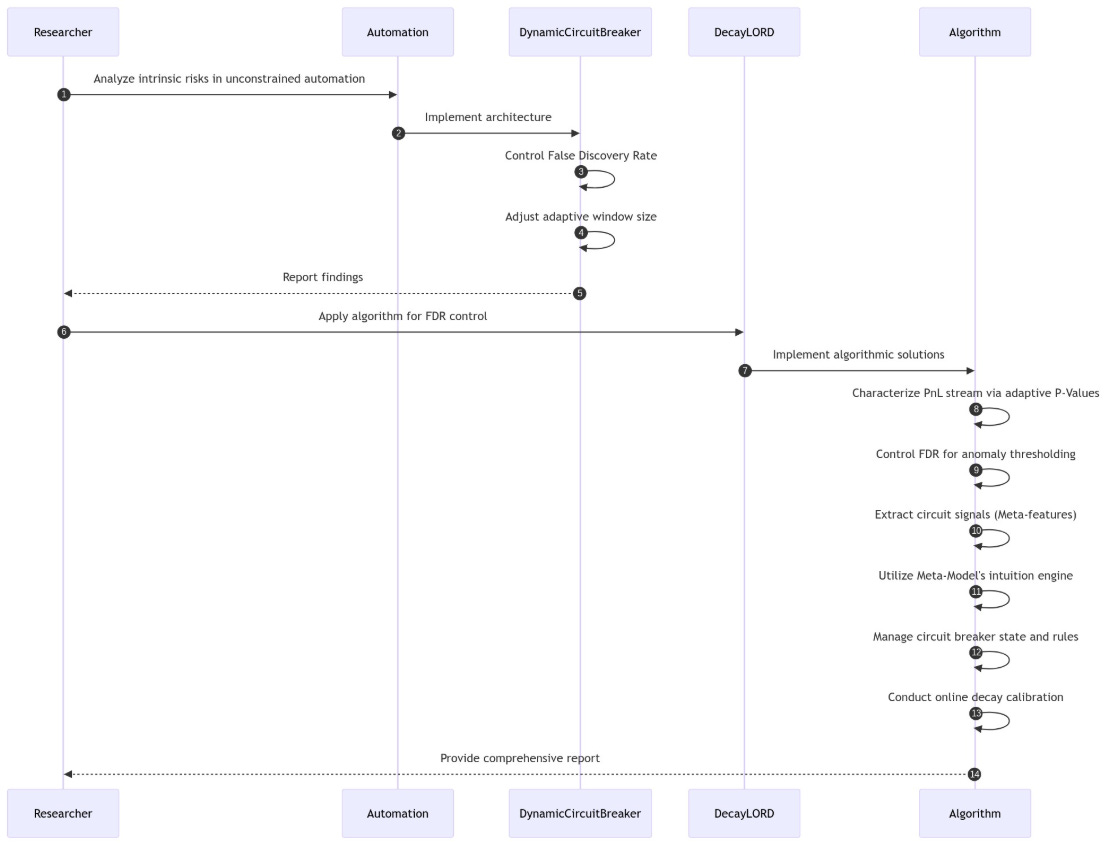

Table of contents:

Introduction.

Intrinsic risks within unconstrained automation.

Dynamic Circuit Breaker architecture

False Discovery Rate control.

Adaptive window size mechanism.

The Decay-LORD algorithm for FDR control.

Meta-learning framework

Algorithmic implementation.

PnL stream characterization through adaptive P-Values.

FDR control for anomaly thresholding.

Extracting circuit signals: Meta-features.

The Meta-Model's intuition engine.

Circuit breaker state management and rules.

Online decay calibration.

Introduction

Once the buy or sell order is opened, success and catastrophe often balance on a knife's edge. Imagine your meticulously crafted trading algorithm humming along, generating steady profits across diverse market conditions. Then suddenly—perhaps triggered by an unexpected geopolitical event or market regime shift—your algorithm begins hemorrhaging capital at an alarming rate. By the time human intervention occurs, significant damage has already been done. This scenario isn't hypothetical; it's a recurring nightmare for quantitative traders worldwide.

The fundamental vulnerability lies in the static nature of most trading safeguards. Traditional circuit breakers typically implement rigid thresholds—stop trading if daily losses exceed x%—but markets are dynamic, complex adaptive systems where yesterday's normal volatility might be today's warning sign. These conventional approaches fail to adapt to changing market conditions and frequently lead to suboptimal outcomes: either excessive trading interruptions during benign volatility or insufficient protection during genuine market dislocations:

The implementation of dynamic circuit breakers introduces its own set of risks. False positives can unnecessarily halt profitable strategies during normal market fluctuations, while false negatives might allow catastrophic losses to accumulate. Statistical methods that are too sensitive create operational inefficiencies; those not sensitive enough fail to provide meaningful protection. Furthermore, circuit breakers that rely solely on historical patterns may fail spectacularly during unprecedented market conditions—precisely when protection is most needed.

Do you remember the March 2020 COVID market crash? When numerous algorithmic trading systems continued operating in wildly irregular market conditions, resulting in devastating losses across the quantitative trading industry. This pivotal event highlighted the urgent need for more sophisticated, adaptive circuit breaker mechanisms capable of distinguishing between normal volatility and genuine strategy failure.

Intrinsic risks within unconstrained automation

The risks associated with an unconstrained algorithmic model are multi-faceted and insidious. Beyond simple performance degradation, we face systemic vulnerabilities:

An algorithm's actions can exacerbate market movements, leading to positive feedback loops where selling triggers more selling, accelerating losses beyond recoverable points.

Models trained on historical data can be too sensitive to conditions outside their training distribution, rendering them fragile when faced with truly novel market behavior.

A minor flaw in logic, amplified by the speed and scale of algorithmic execution across multiple markets or assets, can lead to significant, correlated losses.

Rare, high-impact events are, by definition, impossible to predict and model. An algorithm without a general safety mechanism is particularly exposed to these occurrences.

Dynamic Circuit Breaker architecture

Recently, a fellow I met with asked me what circuit breaker I used. Up until today, I've used a fairly effective one, but curiosity got the better of me. So I've been developing new approaches.

Specifically, the one you're about to read about. It's just a prototype, so it's far from production-ready. It requires a fair amount of refinement, but you'll get an idea of what's going on.

The adaptive trading circuit breaker framework falls within the paradigm of algorithmic risk management or RiskOps. Rather than implementing static loss thresholds, this methodology continuously monitors trading performance through statistical lens, dynamically adjusting to changing market conditions.

False Discovery Rate control

The cornerstone of our approach lies in statistical rigor—specifically, controlling the False Discovery Rate (FDR) when identifying anomalous trading losses. Let's formalize this mathematically:

For a profit and loss time series, we compute p-values pt representing the probability of observing a loss at least as extreme as Xt under normal operating conditions.

The null hypothesis H0 represents the scenario where the trading algorithm is functioning correctly, while the alternative hypothesis H1 indicates a trading anomaly requiring intervention.

The FDR is defined as:

Where:

V is the number of false discoveries (incorrectly identified anomalies)

R is the total number of rejections (total identified anomalies)

R∨1=max(R,1)

This approach generates time-varying rejection thresholds αt such that:

Adaptive window size mechanism

A crucial innovation in this methodology is the dynamic adjustment of the statistical estimation window. Traditional approaches use fixed-size windows, but our framework adapts window size based on observed market volatility:

Where:

wt is the adaptive window size at time t.

wmin and wmax are the minimum and maximum allowed window sizes.

wbase is the baseline window size.

σt is the current volatility estimate.

σtarget is the target volatility level.

This mechanism elegantly addresses a fundamental challenge in statistical estimation. During high volatility periods, larger samples are required to maintain estimation accuracy.

The Decay-LORD algorithm for FDR control

The temporal dimension of trading data introduces unique challenges for statistical testing—recent observations carry more information about current market conditions than distant ones. We implement a modified version of the Decay-LORD algorithm, which incorporates memory decay into FDR control:

Where:

α is the target FDR level

δ is the memory decay parameter

γt=1/t(t+1) represents the sequence of decreasing values.

Rt-L-1 is the set of previous rejections up to time t−L−1.

L is the lag parameter to account for local dependencies.

This formulation elegantly balances the need for statistical rigor with the reality that financial time series exhibit temporal dependence and non-stationarity.

You can check more about FDR control here:

Meta-learning framework

While statistical detection provides a robust foundation, market complexity demands an additional layer of intelligence. Our framework incorporates a lightweight online meta-learning model that analyzes higher-order patterns in the trading data:

Where:

yt indicates anomaly status at time t.

xt represents a feature vector including volatility, skewness, and sign imbalance.

w are model weights updated via online gradient descent.

σ(⋅) is the sigmoid function:

\(\sigma(z) = prob_t = \frac{1}{1+e^{-z}} \)

The weight update rule follows:

Where η is the learning rate. This meta-model complements the statistical approach by incorporating domain-specific features that might indicate trading anomalies even when raw PnL values don't trigger statistical thresholds.